How best to explain the importance of computer vision and how it works to businesses and consumers? Yolo, which stands for ‘you only look once’, is a good place to start. It is one of the main technologies at the heart of self-driving vehicles that protect drivers and pedestrians alike from collisions and responds to traffic signs, road works and other hazards.

To do so, it must be able to detect and track objects in a single step, in real-time. Put simply, Yolo overlays the field of view from the on-board camera with a regular grid. The algorithm identifies objects in each square and then uses this information to surround objects (that may cross multiple squares) with a single rectangle (also known as bounding regression).

Finally, the software looks at all the rectangles and ensures that they fit around the object completely (intersection over union). In this way, a complex array of shapes is reduced to a smaller set of rectangles that surround unique objects and which continuously track their movement.

It is also the reason why self-driving technology is so expensive compared with other forms of computer vision. As well as massive amounts of memory and processing power to detect objects in real-time, you must also build in full redundancy onboard the vehicle. If one system fails, a second must be ready to pick up the wheel instantaneously.

This is why Tesla describes itself as a technology company first and a car manufacturer second. For example, it recently replaced hardware from Nvidia for chips of its own design that contain six billion transistors each. It’s also the reason that global carmakers are in a race to reposition themselves in the same way, with VW aiming to become a ‘software driven mobility company’ by 2030.

Sky’s the limit with satellite AI

Yolo also works well in other scenarios where you need fast, accurate object recognition. Satellite imagery is a good example. Here computer vision is used to sift through massive volumes of static images and video to identify objects at ground level.

In France the authorities use this approach to detect undeclared home improvements, including swimming pools, to shore up tax revenues. Big finance is in the game too. Hedge fund organizations use aerial imagery to track economic activity such as the movements of harbour cranes and shipping containers to place their financial bets.

Similarly, wildlife conservation organisations use technology to track populations of endangered species. In 2021, a team of scientists from the University of Bath, the University of Oxford, and the University of Twente in the Netherlands took advantage of satellite cameras to track the movements and populations of African elephants.

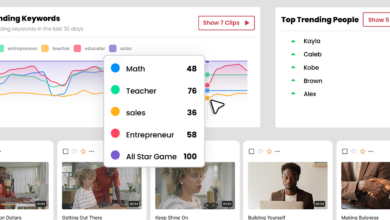

A further example of where Yolo delivers significant return on investment is by sifting through content to spot product logos and prevent copyright theft. This makes perfect sense, especially to luxury brands whose revenues and reputation are threatened by fake goods distributed online.

From fast to flexible

All these examples have one thing in common: they are very fast, typically require a great deal of memory and processing power, but are very rigid in their outputs. So the algorithms used by an autonomous vehicle will detect and classify cars, vans and lorries in a single step. But the software has little interest in the brand, age, or colour of the vehicle. Why would you when the primary goal is to get the driver from A to B safely and do no harm to others?

But let’s say you’re a stock photography business that wants to promote its photo archive of 1960s American automobiles. You need to know the difference between a camper van, convertible and station wagon as well as the manufacturers in each category. A customer may also be searching for a vehicle with tailfins, convertible roof and a certain category of bumper.

In this case you are more likely to use a two-stage detection system which separates the detection task from classification and offers a more nuanced analysis. Once categorized, it becomes that much easier for customers to find and then pay for the image they require.

Innovation at the edge

Two stage detection has the added advantage that it often requires a lighter hardware footprint. Returning to our satellite imagery example, it’s highly expensive to download images from orbit, especially when some 70% of such imagery is obscured by cloud cover and essentially useless.

But the latest generation of computer vision systems are compact and efficient enough to run on board the satellite itself. By filtering out low-value content, this dramatically reduces the data that needs to be downloaded – as well as the cost.

This so-called ‘computer vision at the edge’ is now so flexible that it can be deployed on laptops and even smartphones and smart devices. It also means that you can decouple the software from a specific task. At one extreme you have Tesla with its own hardware and software designed with the unique goal of safe, autonomous driving. At the other, we are now seeing a new wave of computer vision startups launching less expensive software development kits (SDKs) that can be applied in a variety of settings.

This software comes with hundreds of classifications pre-installed, but organizations can also train the algorithms to classify new objects with a relatively small amount of data—sometimes as few as 20 images. Critically, these systems also take advantage of ‘no-code’ interfaces so that business users, rather than software engineers, can undertake the training.

Artificial intelligence meets augmented reality

Right now the computer vision SDKs are most commonly used by media companies and stock photography agencies, but as costs continue to fall, the number of use cases increases. This democratisation of computer vision will drive the technology further into our everyday lives, accelerated by the arrival of the metaverse where computer vision plays a central role.

Computer vision is also a critical component of augmented reality. As the software footprint continues to shrink, we will see rapid growth in the number of wearable devices. These include lightweight smart glasses, which overlay the field of vision with layers of information or digital objects in shared hybrid workspaces.

Controversial? Possibly. Exciting? Definitely. In fact, it’s difficult to name any other technology that will have a greater impact on our lives in the coming decade. From Yolo and self-driving cars to SDKs running on smartphones and smart glasses, computer vision is here to stay.