When an autonomous AI agent takes on a task, it interprets the goal, figures out a plan, and executes it across whatever systems are necessary. One prompt could lead it to, say, pull data from Snowflake, update a Notion document, send a Slack alert, and spin up an AWS Lambda function for extra processing. None of those steps were pre-determined. The agent decided in the moment how to get the job done.

That kind of autonomy is exactly what makes agentic AI valuable – and what makes it disruptive for security. Most of our current safeguards are built on the assumption that software workloads behave in predictable ways, with their access needs defined ahead of time. When the sequence of actions can change on the fly, those assumptions collapse.

At the heart of this collapse is the way we authenticate software to the systems it needs. For decades, the default has been secrets – API keys, passwords, and other long-lived credentials – provisioned in advance and stored for reuse. Whether in a configuration file, an environment variable, or a dedicated vault, these static credentials have been the backbone of machine-to-machine trust.

Agentic AI is going to make the whole model of pre-provisioned, static secrets fundamentally obsolete. Just as passwordless authentication is replacing passwords for human users, identity-first, real-time access will replace the vault-centric approach for autonomous workloads. For enterprises, that means rethinking how every non-human connection in their environment is secured – and doing it before AI-driven processes make their existing models unmanageable.

Secrets managers like HashiCorp Vault or CyberArk Conjur were designed for a different time, when workloads were predictable, credentials were created and rotated at human speed, and there was always a person managing access. In that world, secrets could be provisioned once, stored securely, and injected into workloads when needed. The assumption was that tomorrow would look much like today, and the software you trusted this morning would behave the same way in the afternoon.

Over the past decade, that certainty has already been eroding. The rise of cloud-native infrastructure, ephemeral compute, and automated deployments has been shifting organizations toward short-lived credentials, workload identity federation, and real-time policy enforcement. If that shift was the first crack in the armor of long-lived secrets, agentic AI may be the death knell.

These agents can pivot mid-execution, reach into systems you didn’t anticipate, and assemble workflows on the fly. You can’t preload every credential an agent might need, because you don’t know what it will do until it does it. And you can’t give it sweeping, long-lived access “just in case” without creating a pool of over-permitted credentials waiting to be abused.

The deeper issue is attribution. In human workflows, every action can be tied to a single account, making it clear who did what. In an agentic workflow, identity is fragmented. Did the database change come from the human who started the process? From the orchestrator managing execution? From the reasoning engine deciding the next step? Or from the connector that called the API? Without distinct, verifiable identities for each component, audit trails blur and fine-grained permissions become guesswork.

Replacing static secrets means rebuilding access around identity from the ground up. Each part of an agent needs its own cryptographically attested identity, ideally asserted by the infrastructure it runs on. An AWS Lambda function should be able to prove its identity through IAM roles; a Kubernetes pod through its service account token.

With that identity in place, access decisions can be made in real time, based not just on who is asking but also on context: where the agent is running, when the request is made, what the security posture of its environment is, and whether its behavior matches expectations. Credentials are issued only when needed, scoped to a single purpose, and set to expire within seconds or minutes. Every step – from the initial user instruction to the agent’s reasoning to the API calls it makes – is logged in detail.

Some of this is starting to be codified in open standards. The Model Context Protocol (MCP) provides a structured way for agents to discover and interact with tools. Its authorization spec uses OAuth 2.1 with PKCE to protect token exchanges from interception. PKCE ensures an intercepted authorization code can’t be redeemed without a secret verifier, but it doesn’t answer the “who is this client?” question. That’s where infrastructure-asserted identity closes the loop, allowing the authorization server to authenticate the workload itself before issuing a token.

This shift is already visible in practice. In multi-cloud orchestration, an agent might deploy resources in AWS, adjust configurations in Azure, and run analytics in GCP – authenticating dynamically in each environment through federated workload identities instead of pulling long-lived credentials from a secrets manager. Hybrid-identity agents are also emerging: a scheduling assistant might use delegated OAuth access to manage a user’s calendar, then switch to its own non-human identity to update internal systems, with an identity broker deciding which to use at each step. In AI-assisted CI/CD, pipelines can generate new stages on the fly, with credentials created only for those stages and destroyed immediately afterward.

Static secrets will still exist in certain corners – for example, legacy systems, manual processes, and integrations that can’t yet support real-time identity. But their role will diminish from being the default to being the fallback. The primary mechanism will be workload authentication backed by infrastructure, conditional access based on runtime context, and ephemeral credentials that expire before they can be reused.

[WHITE PAPER] A DEEP-DIVE INTO WORKLOAD IAM

The pace of agentic AI adoption makes this shift urgent. These systems are already stress-testing security models designed for slower, more predictable environments. Organizations that move now to verifiable workload identity, context-aware authorization, and short-lived access will be ready to scale agents safely.

Those that wait will be forced to retrofit identity discipline into systems already shaped by autonomous decision-makers – a far more costly and challenging task.

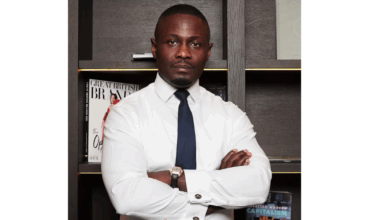

Kevin Sapp is the co-founder and CTO of Aembit, the Workload IAM company. He’s an entrepreneur and technology executive with extensive experience in developing and commercializing new products to serve large, high-growth markets. With a strong background in startups (including New Edge Labs, which he co-founded and was acquired by Netskope in 2019), his focus is on enterprise security, cloud, and mobile computing. He also owns several patents in information systems security.