74.3 million AI prompts reveal something unexpected: we’re not just using AI to create. We’re using it to talk to other AI.

Here’s something we didn’t expect to find.

Paligo.net analyzed 74.3 million AI prompt uses from AIPRM, one of the largest repositories where people share ready-made ChatGPT templates. We categorized them into 56 work tasks, looking for patterns in what people actually automate.

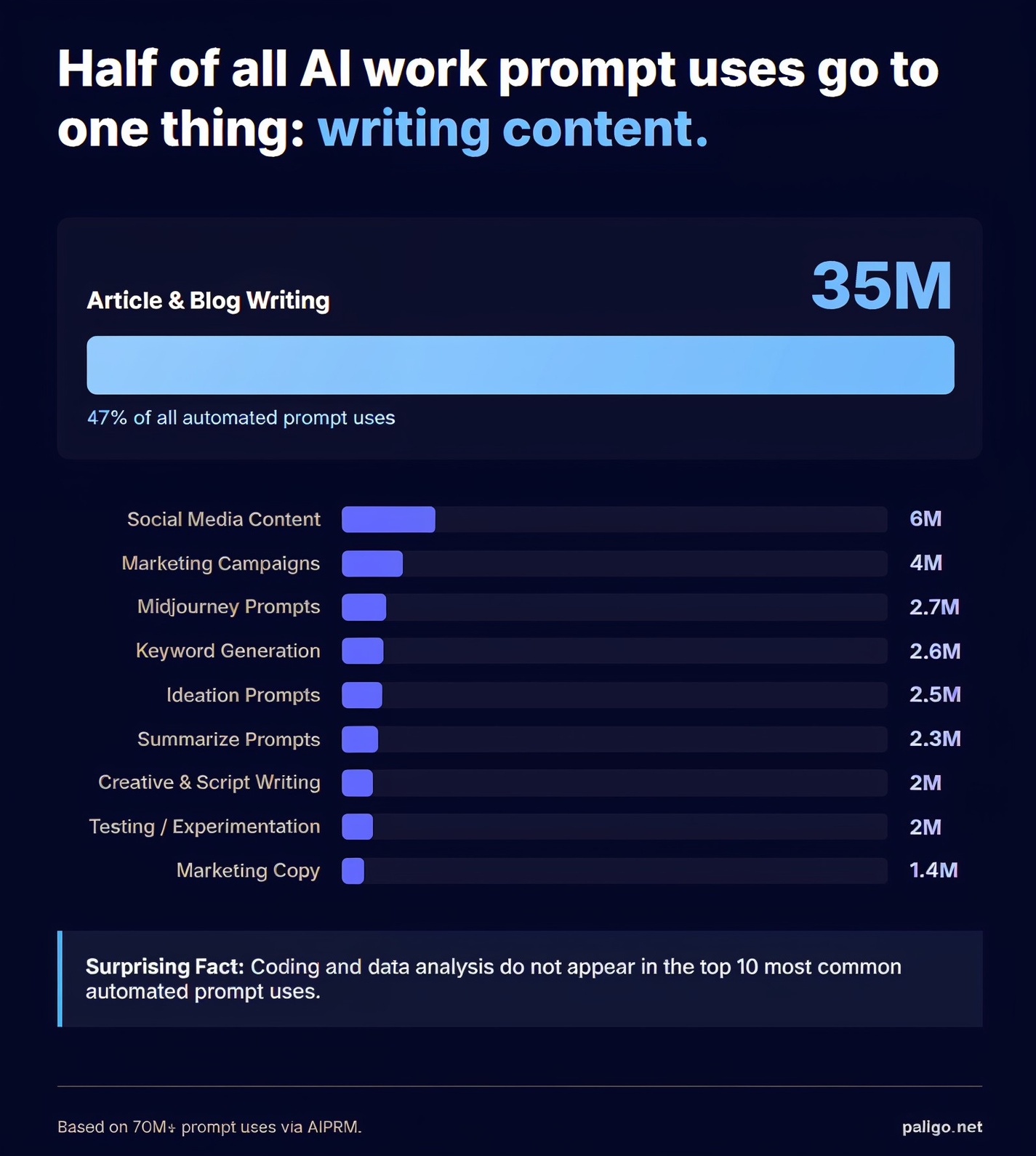

The obvious finding: article writing dominates everything. 35.3 million uses—47% of all activity. Five times more than the second-place task.

But the interesting finding was something else entirely.

The rise of the AI middleman

2.7 million prompt uses were designed to do one thing: generate better prompts for Midjourney.

Not to create images. To create the instructions that create images.

Workers are using one AI to talk to another AI, because language models describe visual style, detail, and nuance better than most humans can. We’ve become translators between machines.

Take DishPrompt, a template that generates food photography prompts from recipe descriptions. You feed it “spaghetti carbonara” and it outputs the lighting direction, depth of field, plating style, and mood that Midjourney needs to produce something usable. It’s been used 4,700 times.

Similar patterns appear across image generation: Stable Diffusion prompt helpers (439,545 uses), Leonardo AI templates (372,762 uses). Current estimates put AI-generated images at over 17 billion since 2022, with 34 million more created daily.

The production pipeline hasn’t just changed; it’s been completely overhauled. It’s developed a new layer—humans in the middle, orchestrating conversations between systems.

This isn’t just happening with images

Look at the marketing data.

Social media prompts: 6 million uses. Broader marketing tasks: 4 million. That’s 10 million automations—roughly 13% of all activity in our dataset.

But here’s what caught our attention: link building and outreach (372,350 uses), audience segmentation (163,215 uses), and media channel selection (69,612 uses).

These aren’t mechanical tasks.

Audience segmentation requires understanding customer psychology—who responds to what message, and why. Media channel selection demands market knowledge—where your audience actually is versus where you assume they are. Link-building involves relationship judgment—which connections are worth pursuing and how to approach them.

These are the tasks that used to define marketing expertise. The judgment calls. The nuance.

Hundreds of thousands of workers are now outsourcing them to AI.

What happens when judgment becomes automated?

There’s an optimistic version of this story. AI handles repetitive analysis; humans make the final calls; everyone wins.

But that’s not what our data shows.

Of the 35 million writing automations we analyzed, prompts for fact-checking and governance appeared only rarely. Content production has massively outpaced content verification. The human-in-the-loop is increasingly optional.

“We expected writing to be a top priority for automation, but the data is staggering—it’s not just leading, it’s dominating. Content creation has fundamentally shifted from a manual task to a repeatable workflow.”

— Rahul Yadav, CEO, Paligo

A Terzo and Visual Capitalist survey of 1,700 businesses found “inaccuracy” to be the top AI risk identified—above job displacement, above cybersecurity. Companies are already rehiring positions they previously cut to fix errors introduced by AI-generated content.

We’re not just automating work. We’re automating the parts of work that made it distinctly human—taste, nuance, strategic thinking. And we’re doing it by default, not by design.

When everything sounds the same

Here’s the pattern we’re seeing emerge.

When everyone uses the same tools to generate the same kinds of outputs, you get convergence. The marketing strategy that AI suggests for a SaaS startup starts to look remarkably like the strategy it suggests for a law firm. The audience segments flatten into familiar archetypes. The “creative” becomes predictable.

Merriam-Webster chose “slop” as its 2025 word of the year. Our data shows exactly where it’s coming from—and it’s not just the 35 million articles. It’s the judgment calls behind them.

A Graphite study from October 2025 estimates that 50% of new online content is now AI-generated. This happened in roughly two years.

Google’s algorithm updates have hit AI-heavy sites hard. Search visibility increasingly rewards demonstrated expertise and accuracy—signals that prompt-generated content struggles to provide. The market is already correcting for the flattening.

But the factory keeps running.

What comes next

The patterns in our data point toward a specific future.

Agentic AI—systems that can plan, execute multi-step tasks, and operate autonomously—represents the next phase. These won’t just assist workflows. They’ll run them. The current wave is about speed: generate more, faster. The next wave will be about delegation: hand off entire processes.

That makes the question of judgment more urgent, not less.

If we’re already outsourcing audience segmentation and media strategy to AI, what happens when the AI can execute on its own recommendations? When the human translator between machines become optional?

The real question

The question isn’t whether to use AI. That ship has sailed—74.3 million times over, just in our dataset.

The question is whether you’re building systems that preserve human judgment where it matters—or just hoping the prompts work out.

Right now, most organizations are choosing the latter. Not deliberately. Just by default, one automation at a time.

The tools that will matter in the next phase aren’t the ones that generate faster. They’re the ones that help you decide what should be generated at all—and maintain the structure to know whether it worked.

Somewhere right now, someone is using ChatGPT to write a prompt for Midjourney to generate an image for an article that was also written by ChatGPT.

The machines are talking to each other. The question is whether we still have something to say.

Methodology

This analysis is based on 74.3 million prompt uses from AIPRM, manually categorized into 56 work task categories. The full study, including detailed methodology and category definitions, is available at Paligo.