Artificial intelligence is being adopted rapidly across regulated industries. From quality monitoring and deviation trending to risk scoring and decision support, AI systems are increasingly influencing outcomes that truly matter product quality, patient safety, and regulatory compliance.

Organizations are investing heavily in model development, validation activities, and performance metrics. Yet alongside this progress, a quieter issue often goes unaddressed.

There is a growing compliance gap in how AI systems are governed once they move beyond experimentation and into real operational use.

This gap is not about whether AI can work.

It is about whether organizations can demonstrate sustained control over systems that learn, adapt, and evolve over time.

Why AI exposes weaknesses in traditional compliance models

Most compliance frameworks in regulated industries were built around deterministic software. These systems behave predictably: the same input produces the same output, and changes are introduced deliberately through controlled releases.

AI systems do not behave this way.

Machine learning models can shift subtly as data patterns change, operational contexts evolve, or models are retrained. Even when the underlying code remains unchanged, outputs may drift in ways that are difficult to detect using traditional validation and change control mechanisms.

As a result, organizations often apply familiar software validation practices to AI systems only to realize later that those practices were never designed to manage adaptive behavior.

The compliance gap emerges not because governance is ignored, but because existing controls were built for a different class of system.

The overlooked middle: what happens after AI is “approved”

In many organizations, AI governance focuses heavily on two points in time:

- Before deployment – model development, testing, and initial validation

- After failure – investigation, remediation, and corrective action

What is frequently missing is sustained attention to the period in between.

Once an AI system is approved and placed into operation, it may run for months or even years. During that time, subtle but meaningful changes can accumulate:

- Input data distributions shift

- Operational use expands beyond the original intent

- Human reliance on AI recommendations increases

- Model retraining occurs with limited downstream visibility

Individually, these changes may not trigger formal revalidation. Collectively, however, they can significantly alter how the system behaves and how much risk it introduces.

This is the compliance gap: AI systems continue to influence regulated decisions without continuous evidence that they remain fit for purpose.

Why documentation alone cannot close the gap

A common response to AI governance challenges is to increase documentation—model descriptions, validation reports, risk assessments, and standard operating procedures.

Documentation is necessary, but it is not sufficient.

Static records cannot capture how an AI system behaves in real operational conditions. They cannot reveal performance drift as it occurs, nor can they show whether human oversight is functioning as intended on a day-to-day basis.

In regulated environments, trust must be grounded in observable control, not just documented intent.

Without mechanisms to continuously monitor, assess, and respond to AI behavior, compliance becomes theoretical rather than demonstrable.

The role of risk in meaningful AI governance

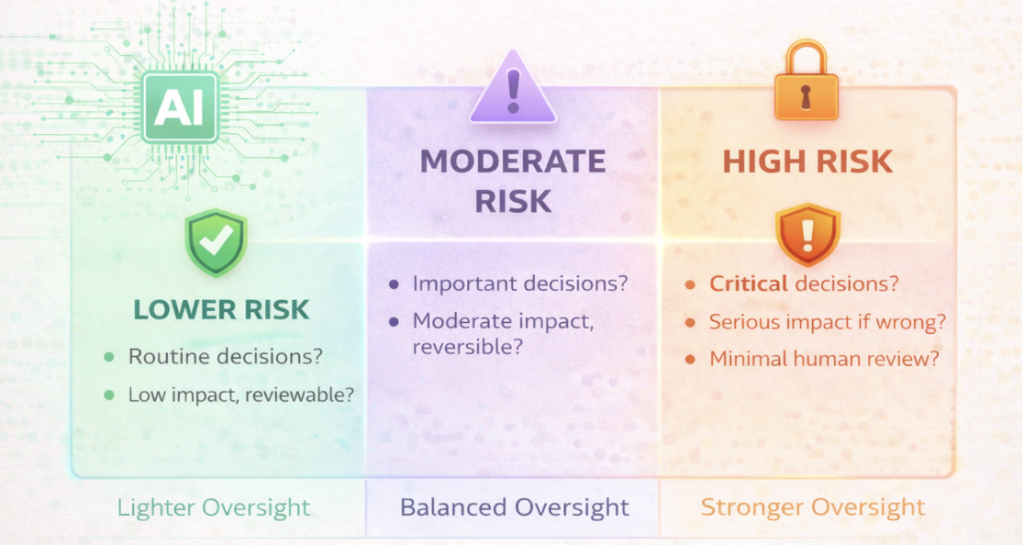

Not every AI system carries the same level of risk, and not every output deserves the same level of scrutiny.

Effective AI governance begins with risk-based classification, including questions such as:

- What decisions does the AI influence?

- What is the potential impact of an incorrect or biased output?

- How reversible are those decisions?

- How much human judgment remains in the loop?

High-risk AI systems require stronger safeguards tighter oversight, clearer accountability, and more frequent monitoring. Lower-risk systems can often be managed with lighter, more automated controls.

A common mistake is applying a uniform governance model across all AI use cases. This either overwhelms teams with unnecessary controls or leaves critical risks insufficiently managed.

Human oversight is not optional – it is structural

One of the most misunderstood aspects of AI governance is human oversight.

Human oversight does not mean occasionally reviewing AI outputs. It means designing systems with explicit accountability pathways, including:

- Who is responsible for approving AI-influenced decisions?

- When should AI recommendations be challenged or overridden?

- How are deviations from expected behavior escalated?

- What evidence shows that oversight is actually being exercised?

Without clear answers to these questions, “human-in-the-loop” becomes a slogan rather than a control.

In regulated environments, accountability must be explicit, auditable, and sustained over time.

Closing the compliance gap requires a lifecycle mindset

The compliance gap in AI systems cannot be closed through one-time validation or post-incident reviews. It requires a fundamental shift in how organizations think about control.

AI governance must be treated as a lifecycle discipline, not a deployment milestone.

This includes:

- Ongoing monitoring of model performance and data quality

- Clear thresholds for triggering review or intervention

- Structured management of retraining and model updates

- Periodic reassessment of intended use and risk classification

- Continuous verification that controls remain effective

When these practices are embedded into daily operations, compliance becomes something that is continuously demonstrated—not something reconstructed during inspections.

Why this matters now

Regulators may not always use the term “AI assurance,” but expectations are clearly moving in that direction. Authorities increasingly look for evidence that organizations understand their systems, manage risk proactively, and maintain control throughout the system lifecycle.

Organizations that cannot explain how their AI systems remain trustworthy over time may struggle not because AI is prohibited, but because its governance is insufficient.

The compliance gap is still manageable. But it is widening as AI adoption accelerates.

Final thought

AI does not introduce risk because it is intelligent.

It introduces risk because it changes how decisions are made and how accountability is distributed.

Closing the AI compliance gap requires more than better models or thicker documentation. It requires governance frameworks that recognize AI as a living system one that must be continuously understood, monitored, and controlled.

In regulated industries, trust in AI is not something you approve once.

It is something you earn every day.