The promise and the potholes

Enterprises everywhere are experimenting with “AI agents” that can plan, decide, and act across marketing, sales, support, finance, and operations. The demos sparkle. Reality is messier. Projects slow down once agents must make real decisions with real stakes. Teams discover that decision rights are fuzzy, data paths are either overbuilt or underbaked, and the measurements executives care about aren’t wired up.

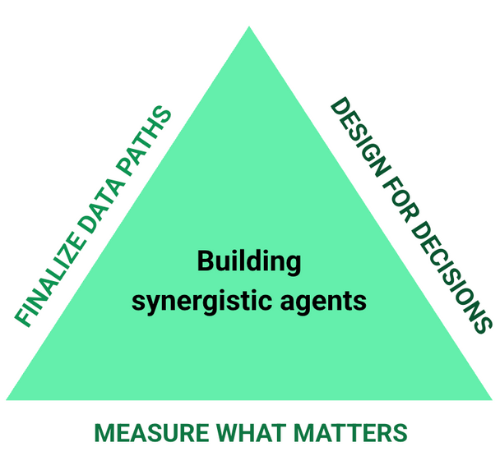

This article proposes a practical operating model leaders can put to work within a quarter. The flow is simple and sequenced for speed: design for decisions, not demos; finalize data paths; measure what matters.

Design for decisions

The problem. Most agents are showcased as if they were magic. In production, magic fades. People are unsure what the agent is allowed to do, when a human must step in, and how to explain an action to impacted stakeholders. Without clarity, adoption stalls and risk creeps in.

How this helps. Treat the agent as a collaborator. Its job is to propose, explain, and act within well‑defined bounds. Humans retain control and accountability. The rhythm is straightforward: the agent summarizes context and recommends an action; it surfaces the signals, assumptions, and confidence behind that recommendation; then it either executes small, reversible steps automatically or routes material decisions to an approver. Everything is logged so that anyone can reconstruct “who did what, when, and why.”

Governance, elevated. Governance is not red tape; it’s how leaders buy down risk and buy into scale. The business case is clear. First, governance contains downside: a bad automated decision can cost money, harm a brand, or trigger a compliance incident. Second, it builds trust: when rationales are readable and logs are complete, skeptical executives become sponsors. Third, it accelerates learning: structured human overrides create the cleanest training data you will ever get. And fourth, it keeps you audit‑ready: finance, legal, and security can trace actions in minutes, not weeks.

Putting it into practice. Start by defining decision classes. Low‑risk, reversible actions such as sending a reminder or raising a non‑critical priority can be auto‑executed. Material actions that carry financial or brand impact such as budget shifts, pricing tweaks require approval. High‑risk or irreversible actions such as contract terms, compliance‑sensitive communications never auto‑execute. Pair those classes with two reliability levers: an override discipline that tracks how often humans intervene and why, and auditability by default, where inputs, prompts, model versions, and outputs are versioned and recoverable. Finally, insist on rationale surfaces. If an action cannot be explained in plain English with expected impact and confidence, it is not ready for production.

Finalize data paths (lightest viable, purpose‑built)

The problem. Agents starve or bloat. Some teams under‑spec data access and the agent cannot make good decisions or be measured. Others over‑engineer pipelines that are expensive to build and slow to change. In both cases, time‑to‑value suffers. It’s important to identify the right set of datasets that the agent needs to access.

How this helps. Providing the agent the minimum viable, policy‑compliant information it needs to decide, helps to prove value in a fast, safe, and cheap way. It’s critically important to work backward from the decision you want the agent to make, then capture only the signals that materially change that decision.

A simple checklist keeps teams honest. Begin with purpose: articulate the decision and map each data element to it. Lock down access with least‑privilege roles. Tighten scope by minimizing to the columns and rows that truly matter. Set timeliness by asking what latency the use case really needs. Real time is rare; hourly or daily often suffices. Manage exposure with masking, hashing, tokenization, and comprehensive read logs. And steward the lifecycle with retention, deletion, and versioning for datasets, prompts, and policies.

With those principles, climb a restrained “data path ladder,” selecting the lowest rung that works. Prefer derived features from tables you already govern. If you must broaden access, expose read‑only, scoped warehouse views. Reach for event streams or webhooks only when latency is material to the decision. Where teams benefit from clear contracts, use a feature service or aggregation API that returns pre‑computed scores and segments. Pull in partner feeds only if external data is essential. Throughout, maintain a one‑page Data Bill of Materials that lists datasets, fields, owners, lawful basis, latency targets, retention, and audit notes. Review it once with legal and security; evolve it like code thereafter.

Measure what matters: the three‑layer scorecard

The problem. Teams often celebrate proxy metrics that look great in dashboards but don’t move the business. Executives remain unconvinced and expansion stalls.

How this helps. Tie agent activity to business value with a scorecard that is simple, defensible, and predictive. It has three layers, each clarifying a different question: did the business benefit; does behavior suggest we will continue to benefit; and are we governing the system well enough to scale?

At the top sit business outcomes, the only metrics that close the loop for finance: revenue, pipeline, win‑rate, cost‑to‑serve, retention, and cycle time. In the middle are leading indicators of quality—signals that plausibly precede those outcomes, such as customer engagement, response latency, backlog clearance, or SLA adherence. At the bottom are governance metrics that earn the right to automate more: explanation quality, override and appeal rates, safety and privacy checks, on‑policy percentages, and audit completeness.

What good looks like at 90 days

By the end of a quarter, outcomes should be moving and traceable to agent‑driven decisions. Rationales improve and reviewers spend less time asking for missing context. Data paths remain right‑sized and not get heavier than the purpose demands. Most importantly, you have a plan for scaled optimization: a documented list of actions that are now safe to auto‑execute and another that will always require approval.