A lot of people today manage brick-and-mortar locations such as retail stores, grocery stores, or factory floors, and want to deploy AI solutions. However, it’s hard to manage it, to monitor it and to maintain control. In this article, I’ll talk about how to manage your Edge AI with relative ease, and how to maintain control over it.

Why Edge AI?

First up, edge AI lets you reduce latency dramatically by bringing computation closer to the data source. As they say, “Bandwidth is cheap, latency is expensive”.

However, in case of Vision AI, bandwidth isn’t cheap either. Let’s run some quick numbers. For a typical fisheye camera, you can expect anywhere between 20 to 30 megabits of compressed video. For a medium-sized store, you would need about 8 cameras, totaling about 200 megabits bandwidth requirement. Which means you need a fiber internet connection. And fiber coverage in the US is sorely lacking and where it’s available, it is prohibitively expensive.

Running on edge lets you control your privacy and security posture. Your data, your customer’s data, does not leave your premises.

Finally, it lets you keep AI systems in your control. We don’t want a Skynet situation, do we?

Pressure of AI adoption

According to a recent KPMG study, investor pressure for AI adoption jumped from 68% to 90% in just the last quarter.

Over half of S&P 500 companies mentioned “AI investments” in their latest earnings calls.

This surge reflects not just elevated chatter—but intense pressure from investors, analysts, and board members. Everyone and their grandmothers are demanding an “AI strategy”.

The conclusion is pretty clear; to keep your “edge”, you need to adopt AI.

State of AI Adoption

Let’s talk about how the industry is adopting AI right now. Here are some reports from industry leaders.

“95% of AI pilot programs fail to deliver P&L impact.” – MIT NANDA, July 2025

“Orgs abandon 46% of AI PoCs prior to production.” – S&P Global Market Intelligence 2025 Generative AI Outlook

“According to Writer, March 2025, 42% of execs said AI adoption was tearing their company apart.” – Writer, March 2025

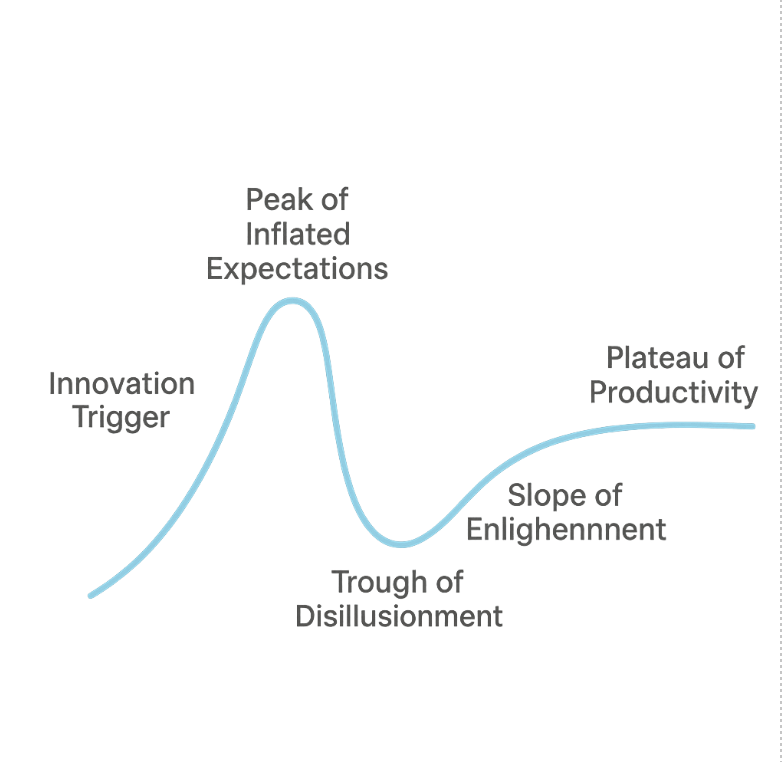

Consider a typical new technology adoption curve below. All the signals indicate that we’re in this downward slope before the productivity stabilizes.

The Pilot Problem

Lot of retailers are facing what I will call “The Pilot Problem”. Let’s say you’re running some AI pilots.

Vendor pilots in this space often arrive as a black box.

They have custom hardware, custom software, and custom telemetry.

For IT teams, it means they’re now dealing with heterogeneous deployments: GPUs in camera or in a cooler screen or in displays or POS systems. You also have smarts cameras now that lets you run some inference on-device. On the one hand, it’s interesting tech. But it’s still an additional complication, right? How do you deploy to it? How do you monitor it?

You have limited or no visibility into these vendor systems. There’s an uncomfortable security exposure: multiple authentication implementations and PII concerns throughout.

IT teams forced to support, patch, and secure each pilot as if it’s production-grade. What looks like innovation to the business side often looks like chaos to IT.

How?

So, we’ve established you need Edge AI. Now, let’s talk about how you would manage it with ease. If you’re a retailer, this knowledge would let you judge how mature your vendors really are.

AI Apps

Let’s talk about that first piece. Are AI applications really special? Short answer: no, not really.

AI applications are basically just software running on semi-specialized hardware.

We can and should use open and standard terms to describe AI apps. What are the inputs and outputs? What filesystem access does it need? What are the network requirements? What sort of compute load does it generate? What kind of monitoring does it require? And so on. Any question you would ask for an API app, you should ask of an AI app.

AI apps must be containerized. When Standard started, like a lot of young startups, deploy strategy was not mature. All software dependencies were installed manually. And deploys involved ssh’ing onto the production server and running “git pull”.

In conclusion, don’t pedestal your AI – you want to version it, monitor it, and move on.

Orchestration

So, you’ve built your AI apps, but you need a tool that can orchestrate how and when to run those applications. The best tool for orchestration of edge applications is Kubernetes.

You might ask: there’s no auto-scaling when you’re on-prem, so why do you want the complications of Kubernetes? It’s a fair question. Kubernetes used to be hard. That’s not really the case anymore. Kubernetes is much easier now, even on-prem. There are even lightweight Kubernetes flavors specialized for deploying on low-power edge devices. You can even run Kubernetes on a Raspberry-Pi at this point.

Kubernetes lets you run containerized applications with ease and lets the system recover automatically. If a server fails, your app is moved to another one with very minimal downtime without any manual intervention. If you’re already using Kubernetes in the cloud, the uniformity of infra across cloud and edge would be super valuable.

You can also easily deploy supporting actors, like maybe you need a message bus or a database or a cache. It’s all really easy.

Finally, my favorite part is that it lets me treat physical servers as disposable. You don’t want to get attached to the metal. I install vanilla OS, my favorite Kubernetes distribution, and that’s it. Kubernetes handles everything else – including GPU drivers. If a server fails, I replace it with another one without skipping a beat.

Application Specification

Application specification encapsulates all the infra requirements for an app. What’s the stable version for each environment? How much memory does it need? What permissions does it need? And so on. And my favorite tool for managing app specs and deploys is ArgoCD.

It’s declarative – Argo uses what’s called the gitOps framework, more about that below. It automatically roll out your application changes and you can manage multiple environments from a single instance.

There’s support for various forms of manifests – Helm, Kustomize, plain YAML files.

Finally, it’s free and open source with a beautiful UI and UX. It’s truly a joy to use.

gitOps

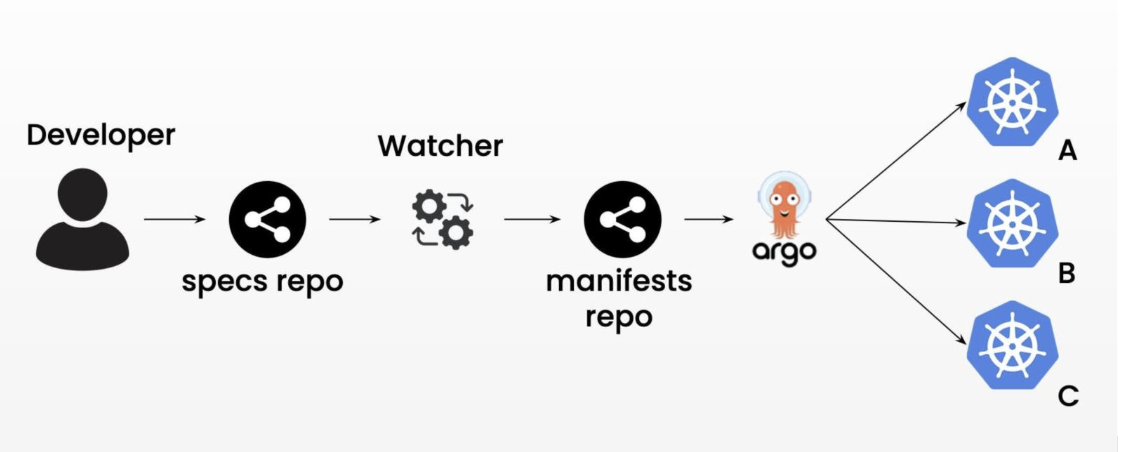

By far, my favorite deploy framework is gitOps. It’s the practice of using git repositories as source of truth for your infrastructure, including application specifications. gitOps lets you apply software development lifecycle best practices to your manifests. You can do code reviews, audit your deploys, version them, and trivially roll back.

Here’s a workflow for how to adopt gitOps

Steps:

- A developer commits application specification changes to a specs repo.

- A watcher component renders Kubernetes manifests and commits them to the manifests repo

- ArgoCD polls the manifests repo for changes and applies the changed manifests to the appropriate clusters.

All of this takes about a minute or two, so your deploys are really quick.

Manage Compute (Cluster)

So, we’ve established that you should use a standard application specification format and Kubernetes in combination with gitOps. However, since we’re running on edge, there are potentially thousands of independent compute clusters to manage. So we need some way to manage all these clusters.

There are several solutions in this space. One of my favorite is Google Distributed Cloud (GDC). It’s based on Kubernetes, so you don’t have to change anything about application packaging or orchestration. There’s an integrated hardware and software offering which lets you offload some of the physical aspects of maintaining machines in the wild. GDC has first-class support for cutting-edge AI, including running some LLMs on edge!

There’s also Rancher from SUSE which is an open source software based on Kubernetes. The installation is really straightforward, it’s really stable and battle tested, which extended (paid) support options.

Talos takes an interesting approach of building a new Kubernetes optimized Linux distro. With a solid foundation, it adds first class emphasis on Kubernetes on bare metal. It’s fully managed using an API, so need to ssh onto a production server, ever! The disk is immutable, which increases resiliency and reliability of the system. And it comes with related offerings like Omni which let you control all your clusters from a single dashboard.

If none of these fit the bill, you could also develop a “home grown” Docker Compose based solution.

Monitoring

Now that we’ve deployed all this software, you obviously need some way to monitor it’s health. My recommendation is to use a standard stack of OpenTelemetry, Grafana and Prometheus and you can’t go wrong.

All applications must expose a way to monitor its health using either metrics or HTTP APIs which can be scraped. The application health must encapsulate all details about what makes the application healthy. Is it able to reach its database? Is it able to keep up with the incoming throughput? Is it running into a crash loop? In addition, you want to use custom metrics to expose detailed health status.

Conclusion: Your Edge AI Journey Starts Now

The pressure to adopt AI is real, yet as we’ve seen, 95% of AI pilots fail to deliver P&L impact. The difference between success and failure? Control and standardization. Edge AI isn’t just about latency reduction or bandwidth savings, it’s about maintaining sovereignty over your AI infrastructure. By treating AI applications as what they truly are—containerized software on specialized hardware—you can escape the pilot purgatory that traps so many organizations.

The path forward is clear: Containerize everything, Embrace Kubernetes, Adopt gitOps, Monitor relentlessly, and Demand transparency. The retail landscape is littered with abandoned AI pilots and unfulfilled promises. But armed with the right approach—treating AI as disciplined software engineering rather than magic—you can join the 5% who deliver real business value.

Remember: The goal isn’t to have AI. The goal is to own your edge, literally and figuratively. Start with one properly architected deployment. Prove the model. Then scale with confidence.

Your customers are waiting. Your competitors are moving. And now you have the blueprint to do Edge AI right.