Trust has always been the foundation of cooperation and commerce. From the barter systems of ancient times to today’s complex global markets, trust enables individuals and institutions to engage confidently in transactions and collaborations. Recent studies confirm that a 10-percentage-point increase in social trust corresponds to a notable GDP growth boost. Yet today, trust is under unprecedented pressure, with AI-generated fraud eroding confidence at a rapid pace.

Trust Through History

According to AI itself, trust means confidence that someone or something will act reliably, honestly, and as expected. Trust is confidence in reliability and integrity. Trust is also the belief that people, systems, or data will perform as promised. But as technology evolves, so too must our understanding and mechanisms of establishing and maintaining trust in this new digital landscape.

Ancient written travel documents dating back to around 450 BC served as early trust mechanisms to enable safe human movement. These documents did not include any portraits; identity verification relied on written descriptions, official seals, and the authority of the issuer.

The modern concept of the passport emerged much later, with standardized passports introduced in the 19th and 20th centuries to regulate cross-border movement. For example, the early British passports in the late 19th century were simple paper documents without photos, primarily focused on certifying identity and permission to travel.

It was only in the 20th century, particularly after World War I, that passports began incorporating photographs and more detailed identification data to strengthen security and trust. These developments laid the groundwork for current identity verification systems, underpinning trust in human-to-human interactions for centuries.

These examples of identity established trust as a societal cornerstone, enabling people and goods to move securely across regions. And here’s a turning point – we find ourselves in a similar situation today, we need an updated “cornerstone” for the digital realm.

Is Trust a timeless problem?

In a few years, the era of the AI agent economy will arrive, according to Sequoia Capital. The AI sector’s market size today is around $15 billion; however, this is just 0.15% of the global IT software and services market, valued at approximately $10 trillion. If the AI industry follows the SaaS model, which expanded from about 2% to 60% of the software market in just 14 years, its potential could reach hundreds of trillions of dollars by 2040.

This exponential growth offers huge opportunities but also significant risks. Trust has already expanded beyond human-to-human interactions into digital ecosystems where autonomous AI agents act independently. They transact, communicate, and make decisions autonomously. The fundamental problem is persistent identity: how can we be sure who or what is behind every digital interaction?

AI rapidly integrates into many industries, and criminals are also mastering AI to create synthetic fraud and subvert trust systems. The rise of autonomous AI agents requires a new kind of trust that extends beyond traditional human verification.

Expanding Trust Layers in Digital Ecosystems

Trust originally existed between humans and was verified through government-issued documents. Passports and official IDs created the foundational trust infrastructure that society relied upon for identity verification.

Adding to this, digital human profiles now augment these traditional identities. Digital profiles compile biometrics, social behaviors, transaction histories, and other data points to provide a fuller and more dynamic view of identity. Companies like Social Links develop these digital profiles to enhance trust in online interactions, bridging physical and digital identity verification.

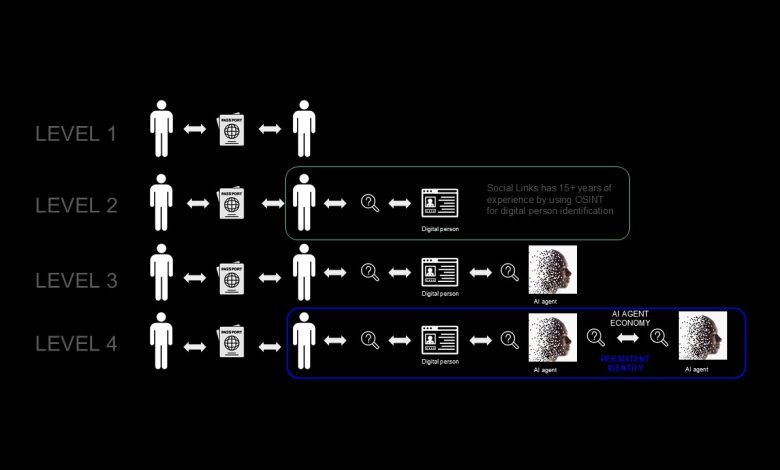

The next trust layer incorporates AI agents that act on behalf of humans or organizations. These AI agents must be verifiable and trustworthy themselves to ensure that interactions within the digital ecosystem maintain integrity. This creates a four-tiered trust model:

– Level 1: Human-to-human trust verified by passports and official government documents.

– Level 2: Human-to-human trust enhanced with rich digital human profiles.

– Level 3: Trust extended to a human’s AI agents, confirming and maintaining previous trust layers.

– Level 4: Trust between AI agents themselves, representing different individuals or companies.

The Path Forward

The complexity grows as AI agents begin interacting autonomously on behalf of different humans or companies. This leads to the core challenge: how can the system ensure AI agents themselves can verify who or what is behind each other?

One promising solution is a universal interaction protocol for AI agents. Such a security service would assess AI identities and fraud risks in real time using OSINT (open-source intelligence) and blockchain technologies. We are pioneering this approach, working to create a secure ecosystem where AI agents interact with a high degree of trustworthiness.

The stakes could not be higher. As early as March 2024, Assaf Rappaport, CEO of Wiz, warned that “criminals are doing a better job leveraging AI for attacks than we are,” highlighting the urgent need for advanced trust frameworks. The global digital identity verification market is also rapidly expanding, projected to reach approximately $29 billion by 2030, with a strong compound annual growth rate of nearly 15%.

Despite this growth, the AI software market remains modest – valued at around $15 billion in 2024, a mere 0.15% of the $10 trillion IT software and services industry. However, if AI adoption accelerates like the SaaS market did – rising from 2% to 60% of the total software market – the AI software sector could swell into a multitrillion-dollar industry. This expansion would underpin trillions of dollars in economic activity powered by AI agents.

Within such a vast and evolving landscape, developing trust protocols becomes critical. Ensuring that AI agents can identify, verify, and trust each other will not only unlock immense economic potential but will also safeguard against escalating threats, fraud and cyberattacks.

Looking ahead, trillions of AI agents – ranging from software bots to humanoid robots – are expected to populate the digital economy by 2040. Embedding trust protocols early, based on transparent verification and open data, will be essential to protecting this complex digital ecosystem and securing its sustainable growth.

Trust in digital identity continues a timeless human challenge, now intensified by new technological dimensions. By building on centuries-old trust foundations and evolving them with universal protocols, digital profiles, and AI verification, society can create reliable and verifiable identities for both humans and AI agents alike.