The semiconductor industry has been the critical infrastructure enabler of the artificial intelligence revolution. From 2023 to 2025, logic processing semiconductors such as GPUs—primarily used for model training—saw the greatest momentum, turning market leaders such as NVIDIA into household names. Then, starting in late-2025, the momentum shifted to memory semiconductors. As AI transitioned from training to inference, it necessitated immense amounts of data storage and retrieval. The current memory shortage has boosted the share prices of leading memory chip makers such as Micron and SanDisk several-fold in a matter of months.

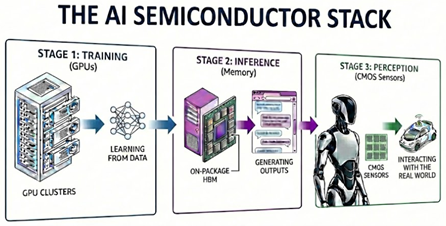

Investors are forward-looking, so the million-dollar question is: What comes after memory? The answer follows the three stages of AI development: first, model training; second, inference; and third, Physical AI. With GPUs and memory enabling the first two stages, it’s becoming increasingly evident that a still-overlooked chip—the CMOS sensor—will be the semiconductor driving the third.

CMOS are the Eyes of AI

What is a CMOS sensor? CMOS stands for Complementary Metal-Oxide Semiconductor, which is the semiconductor that enables image sensing, thereby allowing Physical AI, such as autonomous vehicles and humanoid robots, to see, interpret, and respond to the world around them. Simply put, if GPUs and memory are the “brains” of AI, then CMOS sensors are the “eyes.”

Technically, CMOS sensors, GPUs, and memory are all fabricated using the same underlying chip manufacturing process that builds millions or billions of transistors on chips produced from a silicon wafer. The key difference is that a CMOS sensor then integrates these transistors with microscopic, light-sensitive photodiodes to convert light into digital data, whereas a GPU arranges them for high-speed mathematical computation and memory integrates them with capacitors and floating gates for data storage.

Prior to the AI-revolution, these chips all had mundane uses. GPUs were used to render video games, and flash memory provided high-speed storage on personal computers and smartphones. Similarly, pre-AI, the CMOS sensor is best known for enabling smartphone cameras. Every smartphone camera lens has a CMOS sensor directly behind it to capture and process light input. Yet its applications extend far beyond consumer electronics, serving as a foundational component enabling Physical AI.

Physical AI: The Next Frontier of AI

The era of Generative AI has been defined by the text generation abilities of large language models. But the next frontier is Physical AI—systems capable of perceiving, navigating, and acting autonomously in the real world.

Physical AI is broadly categorized into two areas: autonomous vehicles and robotics. Both require sophisticated sensors to interact with the environment safely and effectively. NVIDIA CEO Jensen Huang has described Physical AI as the “next frontier” of AI with a $50 trillion market opportunity (NVIDIA). Similarly, Tesla CEO Elon Musk has said he expects up to 80% of Tesla’s future value to come from autonomous robots (NASDAQ), and is discontinuing several popular EV models in order to produce humanoid robots (Car & Driver).

And at the heart of enabling this shift are CMOS sensors.

Physical AI will Significantly Increase Demand for CMOS Sensors

Unlike Generative AI, which is trained on pre-existing texts and images, Physical AI must learn from real-world interactions. While some training can be done with synthetic data from digital twin simulations, true autonomy requires a constant and large amount of data from live surroundings. This is where CMOS sensors step in: they serve as the critical light processing technology behind both cameras and LiDAR sensors that turn raw environmental input into usable data for AI systems.

For autonomous vehicles, the difference is dramatic. Traditional cars typically have zero to three cameras, the level 2+ autonomous Sony-Honda Afeela has 19 cameras and LiDAR, and the level 4 autonomous Waymo employs a staggering 34 cameras and LiDAR, hence containing 34 CMOS sensors.

The robotics sector presents an equally compelling case. Boston Dynamics’ Spot quadruped robot requires five optical cameras and five depth cameras, with an optional LiDAR attachment—up to 11 CMOS sensors in a single robot (Boston Dynamics). As robotic applications become more generalized and affordable (with the cheapest Unitree quadrupeds already priced under $3,000 and humanoids starting at $16,000), mass adoption will drive exponential demand for CMOS sensors.

CMOS Pricing Power: Oligopoly Meets Innovation

In addition to increasing sales volume, two key factors are driving higher prices in the CMOS industry: market structure and technology innovation. Combined, these factors are greatly accelerating CMOS market growth.

First, the CMOS sensor market is an oligopoly. Large capital requirements and deep technical expertise create high entry barriers, giving incumbents significant pricing power. Sony commands nearly ~50% market share, followed by Samsung (~20%) and Omnivision (~10%) (Yole). Both Sony and Samsung design and fabricate in-house, underscoring the bespoke nature of CMOS manufacturing. Premium CMOS sensors such as Sony’s already sell for 2.5x the price of entry-level brands (Third Point).

Second, the steady development of more technologically advanced chips lifts sensor prices. For instance, the unit cost of each Sony CMOS sensor in Apple’s iPhones doubled in a span of only 2 years from ~$7 in the iPhone 12 (PhoneArena) to ~$15 in the iPhone 14 (AppleInsider), mainly driven by increases in camera resolution. Automotive CMOS sensors cost significantly more, exceeding $100 per unit (Sony). Highlighting the sector’s rapid growth, market leader Sony has grown its annual CMOS revenue nearly fivefold over the last decade, from $2 billion to $12 billion (Sony).

Looking ahead, Physical AI applications such as autonomous cars and robots demand even more advanced capabilities. Beyond higher resolution, CMOS sensors must deliver faster readout speeds to maintain clarity while moving, superior low-light sensitivity, and improved dynamic range to operate in bright lighting conditions (Sony).

These improvements are enabled by new breakthrough technologies such as multi-stacking technology that allows stacking of CMOS sensor, memory, and logic silicon on a single chip. This virtually eliminates image processing latency, a particularly important requirement for real-time autonomous vehicle and humanoid robot operations.

The Underappreciated AI Chip Opportunity for Investors

Semiconductors are the backbone of AI, and while GPUs and memory have been the stars of the Generative AI era, CMOS sensors will be a critical infrastructure for the Physical AI era. As autonomous vehicles and robots become mainstream, the CMOS sensor market is poised for exponential growth. For investors searching for the next big winner in the AI revolution, CMOS sensors are still an overlooked semiconductor growth story hiding in plain sight.

Author

Bob Ma is a Principal at Copec WIND Ventures, where he heads investments in AI, commerce, and fintech. Previously, he was a venture investor at Soma Capital and Causeway Media Partners, and was the head of strategy for Verizon’s go90 mobile television app. He earned an MBA from Stanford University, an MPA from Harvard University, and a BSc from The Wharton School.