You can’t really blame AI for today’s data deluge. The explosion is being driven by applications and an expanding range of use cases, particularly user-generated content. All of these require audit trails to document AI decisions and events.

Users now effortlessly create videos and those videos, along with drafts and earlier versions, often remain stored in cloud accounts indefinitely. Autonomous vehicle companies, for example, faced fines if an accident cannot be reconstructed due to missing data, creating a strong incentive to retain everything. As a result, more sensor data is being captured, stored more frequently, and kept for longer periods of time. This is what is fueling the data explosion, and it amplifies the complex, multifaceted challenge data storage already faces:

Not Enough storage: The HDD shortage

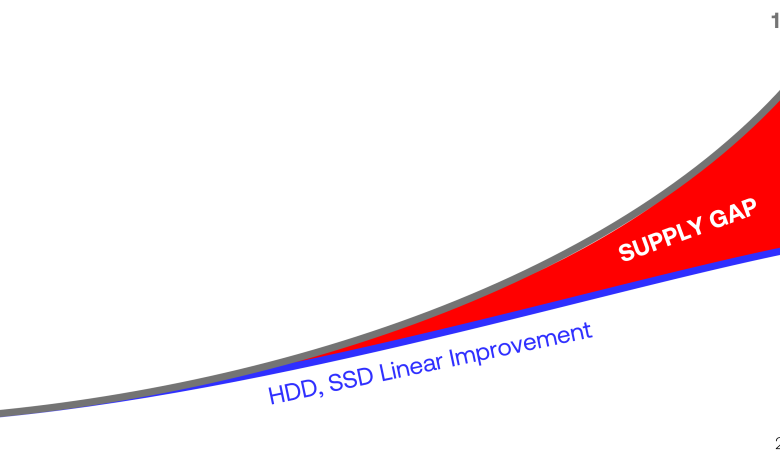

A classic supply gap is widening. The exponential growth curve of data is diverging from the increasingly flattening curve of storage-media capacity advancement. Hard-drive lead times are reportedly stretching beyond two years, and prices are rising.

The industry is currently insufficiently prepared. Although analysts have cautioned for years, the latest investments in storage technologies have resulted in suboptimal returns. Manufacturers, focused on short-term gains, have made only minor advancements instead of investing in major innovations. They have little choice but to produce more of the same.

Most Data is Cold

Look at the data on your laptop or phone – much of the data is rarely or never accessed. Only a tiny fraction, perhaps 1percent or less, is used daily or weekly. Yet you don’t want the remaining 99 percent to disappear. The same pattern holds true for enterprises and hyperscale data centers. Most data is “cold” and stored for undefined periods of time – which often means decades, and sometimes over a century. Here is where the mismatch occurs: data with multi-decade lifetimes and near-zero access frequency is stored on media where the lifetime is measured in years. Think of YouTube-scale video archives, astronomical imagery, climate datasets, government records, national archives and healthcare records that last beyond a patient’s lifetime. In fact, entire nations are going digital. Tuvalu, for example, may soon become the first country without physical territory due to rising sea levels, yet its digital state must persist.

Today’s storage technologies were designed for compute performance, not for true long-term digital preservation. That’s why the service lifetimes of today’s storage technologies are limited. Even magnetic tape, often treated as long-term cold storage, requires ongoing maintenance, migration and periodic media replacement. Classification doesn’t really solve the issue. While definitions vary, “cold” generally means rarely or never accessed. By that measure, most of the world’s data is cold.

Long-term Data Storage on Today’s Media is Unsustainable

Cold data is supposed to live on the most cost-efficient tier, which today is magnetic tape. But the reality is different. Only a small fraction is stored on tape, while much of the remaining cold data sits on hard disk drives (HDDs) -with a lifecyle of a few years. Roughly a million hard drives reach end-of-life every single day, and are often shredded. Frequent media replacement produces massive electronic waste.

Long-term storage is also becoming financially unsustainable. Data is often described as a valuable digital asset, but the cost of keeping it for decades is turning into a major budget line item. IT budgets rarely scale at the same rate as data volumes, and that gap keeps widening.

Cold data isn’t Really Cold Anymore

Modern AI/ML systems are fueled by large datasets that are commonly labeled cold. When these datasets need to be used for analysis, forecasting, pattern recognition, training, liability protection, diagnostics, etc., they need to be warmed up promptly. That means they must be accessible and readily available.

When data must be retrieved quickly and repeatedly tape can’t meet this need. HDDs can, at first, but then becomes too expensive and energy consuming over the long run when used as the default repository for decades of retention.

The AI Era Needs a New Storage Tier

The AI era requires a fundamentally new tier in the storage stack, one that combines very low cost with reasonable access time.

The ideal medium stores data permanently without bit rot and without energy required to retain the data. That removes four dominant cost drivers of long-term storage: media replacement and data migration, energy consumption, and continuous data maintenance.

But permanence alone isn’t enough. Access speed and bandwidth matters. For many large-scale AI workloads, a latency of a few seconds to first byte is entirely acceptable, if it is dramatically faster than tape.

As organizations explore a range of emerging storage technologies to address long-term data growth, durability, access and sustainability multiple approaches are being evaluated. Ceramic-based media can combine permanence (and therefore low cost) with fast access and high bandwidth, positioning it as one option within the broader landscape.

Across the storage landscape, new materials and form factors are being developed to improve longevity and density beyond conventional media. In ceramic-based designs, the medium can consist of thin, flexible glass sheets, similar to the glass used in foldable smartphone displays. These are coated with a thin, dark ceramic layer with data written as physical bits, microscopic holes in the ceramic and read optically.

Many storage systems emphasize parallelism to overcome throughput limitations. Rather than rotating like traditional optical discs (CDs, DVDs), ceramic-based media are typically square and stationary, with data written and read in data matrices that allow millions of bits to be written or captured, with a path toward GB/s throughput per write/read unit.

Automation and modularity are common themes across scalable archival storage platforms. Building on established library automation concepts from LTO tape, ceramic-on-glass media can be stacked by the hundreds in cartridges with the same outer form factor as LTO tape. But unlike tape where a kilometer-scale ribbon must be wound out and rewound ceramic-on-glass enables random access by separating the stack at the sheet level that contains the required data.

In many large-scale storage systems initial access time is dominated by mechanical movement rather than data transfer rates. For ceramic-based architectures, time-to-first-byte is dominated by robotics moving cartridges from a library slot to a reader and extracting the correct sheet. Performance can be scaled by deploying more readers per rack matching throughput to use case workload.

Future storage roadmaps rely on manufacturing scale and process reuse to control cost. By leveraging amortized semiconductor manufacturing tools, ceramic based storage technologies aim to target density and cost-per-terabyte that are required in the coming decade. Levels which current storage technologies struggle to scale.

Finally, higher level software and data management techniques play a critical role in improving efficiency regardless of the underlying media. Optimizations such as metadata strategies, dynamic scheduling, and tiering between previews and high-resolution objects can further improve AI performance and storage efficiency while operating above the media and storage technology layer.

Unlocking AI’s Full Potential

This new class of storage enables AI to scale sustainably combining permanence, cost-and energy efficiency, and fast access speeds to meet real-world demands. It removes the fundamental bottlenecks of today’s storage stack and better aligns data retention with the demands of the AI era.

Permanent, low cost, fast accessible data storage will be the catalyst that pushes AI past “better answers” into “new discoveries” unlocking breakthroughs the brightest minds only dream of today.