AI powered cyber threats are categorically different from other types of cybersecurity concerns. Historically, cybersecurity threats fell into two main categories: highly targeted attacks typically launched by advanced persistent threats (APTs) or nation-states and broad, automated attacks – often nicknamed “spray and pray” – that exploited common vulnerabilities indiscriminately. As it blends automation and adaptability, generative AI introduces a new class of threat.

AI-powered threats, unlike traditional automated attacks, can learn from defenses, exploit novel vulnerabilities, and mimic legitimate behavior, all at a fraction of the cost and effort previously required. For instance, AI can craft personalized phishing emails or dynamically adapt malware to bypass signature-based detection, enabling even less skilled attackers to launch sophisticated, scalable campaigns. This combination of accessibility and evolution sets AI-driven threats apart from conventional cybersecurity concerns.

As we continue to see, many organizations aren’t evolving their strategy quickly enough and therefore aren’t prepared for this type of attack. Organizations must shift from traditional, signature-based defenses to behavioral-based approaches. Signature-based tools rely on recognizing known attack patterns, but AI-driven threats evolve too rapidly for these methods to remain effective. Behavioral defenses, powered by machine learning and AI, detect anomalies in user or system activity, such as unusual login patterns, but require new technologies, expertise, and processes that many organizations lack. The rapid advancement of AI further outpaces the ability of most security teams to update infrastructure or train staff, leaving gaps that attackers can exploit.

The reduced cost and complexity of AI-powered attacks lower the barrier to entry for cybercriminals, driving a surge in both the number of attackers and the potency of their methods. For example, previously overlooked vulnerabilities, such as minor misconfigurations, can now be exploited at scale. This could mean stolen customer data, regulatory penalties, operational downtime, and eroded trust, amplifying both immediate and long-term damage. So, failure to prepare leaves organizations vulnerable to more frequent and effective attacks, resulting in severe consequences like data breaches, service disruptions, and financial losses.

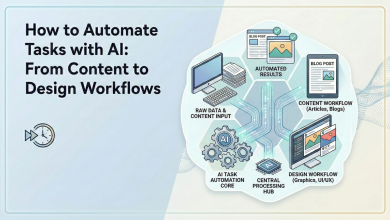

To counter AI-powered threats, organizations must leverage AI in their own defenses while strengthening foundational security practices.

Some strategies to consider:

- Adopt AI-driven tools: Deploy technologies like behavioral analytics and automated threat hunting to detect and respond to anomalies in real-time, such as irregular network traffic or account behavior.

- Enhance system resilience: Implement continuous updates and patch management to minimize exploitable vulnerabilities.

- Train personnel: Educate staff to recognize AI-generated threats, such as deepfake-based social engineering or sophisticated phishing attempts.

- Strengthen access controls: Enforce multi-factor authentication (MFA) and least-privilege policies to limit attackers’ reach.

- Prepare for incidents: Develop and test response plans tailored to AI-driven attacks, ensuring rapid containment and recovery.

AI opens up a whole new world for cybercriminals, but can also be the key to defending against them. Recognizing this need to prepare and enacting the proper strategies will make all the difference.