Lauded as a ‘must-have’ by vendors, the ‘AI Gateway’ promises to tame the AI maelstrom, enabling organisations to centralise and control access to AI applications or Large Language Models (LLMs). But as with any new solution, there is always a certain element of bandwagonism, with the functionality and capabilities of these gateways varying widely from vendor to vendor. As a result, it has become something of a catch-all term, making it difficult to discern what the technology brings to the table and whether it will remain relevant as we enter the era of agentic AI.

It is, however, possible to break down the different types of offerings. Early gateways essentially acted as routers that seek to streamline AI access to multiple LLMs. They can handle traffic to AI models situated externally such as those hosted by OpenAI, Anthropic, Google, Groq etc. but also those that have been developed or adopted inhouse and can provide access to hundreds of different models.

These AI gateways sit between the enterprise application and the AI service and look for the most efficient way of handling the prompt by passing it to the most appropriate LLM. Techniques used to do this include caching information to make repeat requests faster and rerouting to another LLM if service performance is impacted. But in addition to assessing performance and response from the LLM, gateway providers often provide additional features.

Gateways as gatekeepers

Most will offer authorisation, observability, and prompt control as well as the ability to control costs. Given that AI processing is notoriously expensive, it’s become increasingly apparent that businesses need to be able to rein in AI’s appetite. AI gateways help in this respect by declining access to unauthorised AI or applying rate limiting which they’re able to do by tracking AI tokens.

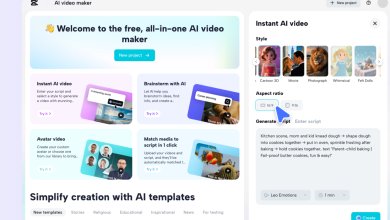

Some gateways not only facilitate multi-model access but also provide a suitable environment in which to build AI applications. Developers can use the foundational elements of the gateway to provide the integration capabilities and necessary guardrails for their application and AI gateways can also standardise requests to ensure that the application can be interpreted and understood by different models.

Other vendors have taken a more security-conscious approach. Mindful of the security vulnerabilities that LLM applications are subject to (the OWASP Top Ten for LLM Applications is a good primer), these also offer security and policy enforcement to mitigate these risks or have added security assurance and controls such as secure key storage for AI provider keys and Data Loss Prevention (DLP).

The primary aim of these AI gateways is to track and control access to Generative AI (GenAI). While they do enable the business to manage multiple models and provide visibility, they range from the complex to the fairly simplistic, with some using a single API endpoint to handle the flow of traffic. They’re also geared towards handling GenAI applications rather than agentic AI, which means their usefulness could well become limited.

Moving to Agentic AI

Agentic AI rewrites the rules because whereas GenAI typically relies on human prompts, agentic AI will carry out machine-to-machine requests independently. Keen to cash in on the productivity gains, organisations are already building prototypes but many are coming unstuck when it comes to scaling and protecting these projects. They’re typically hacking together single-user prototypes, connecting AI agents to critical systems without sufficient security, oversight, or context.

A major cause of these issues is that they are struggling to create Model Context Protocol (MCP) servers that have sufficient security to protect these fledgling projects. MCP has emerged as the de facto standard for connecting AI agents to enterprise applications and APIs. Devised by Anthropic, it provides the common language agentic AI needs to be able to go off and interact with different data sources, tools and repositories via their APIs. It’s not a substitute for APIs but rather provides a universal way for agents to interact with them.

What’s interesting is that AI gateways are now facilitating access to multiple MCP servers. In an MCP architecture, an agentic AI client such as Claude on a desktop can access different resources via multiple MCP servers. Perhaps one MCP server talks to the APIs of applications and data while another might be used to access proprietary data services (see diagram below). Yet, as with generative AI, centralising that process can provide significant benefits.

Running agent requests via an AI gateway can improve security and the speed of response through API management. APIs can be discovered by the requesting clients, and crucial enterprise security services like authentication, authorisation, monitoring, and logging are provided by more capable offerings.

MCP as a target

However, as most MCP servers are public facing they can pose a significant risk. There have been numerous stories of data leakage associated with MCP servers, a prime example of which is the vulnerability affecting the GitHub MCP integration, discovered by security researchers back in May. This saw attackers hijack AI agents so that when a developer asked their AI assistant to check for open issues, the agent would then read a malicious issue, be prompt-injected and follow commands telling it to access private repositories and leak that data.

The GitHub prompt injection attack perfectly illustrates the problem posed by MCP servers from a security perspective and the need for better control to prevent agents going rogue. It also serves as a warning because AI agents that go unchecked due to a lack of controls and human oversight are liable to pose a greater threat. But if that traffic is routed via an AI gateway it becomes possible to carry out real-time monitoring of agent interactions.

As the agent is given an identity and a subset of the user’s access it becomes possible to check what actions it has taken and the tools it has used. By monitoring its activity, the gateway can determine if an agent is at risk of going rogue and can stop any abuse in real-time, preventing the agent from running amok.

Gateways as servers

Finally, while an AI gateway can be used to simply communicate with MCP servers, crucially, it can also create them. This means that for those businesses that are struggling to successfully deploy an enterprise-grade agentic AI solution, they can create the MCP server within the gateway itself. The best examples accomplish this without coding, using standardised API specifications as a primary input. This can drastically reduce the cost, complexity and time associated with deployment, with one company reporting an agentic AI project expected to take six months was reduced to just 48 hours.

As with any protocol, the MCP specification has changed over time, and will likely continue to do so in the future. With that in mind, look for a solution that can manage the complexity of handling new versions as they appear.

What’s clear is that AI gateways have and will continue to play a vital role in organising, facilitating, and governing agent access. These platforms enable users and developers to safely utilise and build AI and are now ensuring interactions are conducted securely, reducing the risk of data leakage or agent abuse. Going forward, they are enabling businesses to build and manage their own agentic AI deployments and even their own MCP servers. In doing so, they could prove to be the key to ensuring the technology realises its potential and doesn’t just result in a mountain of technical debt.