When large sea creatures and vessels meet, the outcome can be catastrophic. Maritime traffic is a major threat to large marine mammals and is of particular concern for critically endangered whale species such as the North Atlantic Right whale whose numbers are estimated to be fewer than 350.

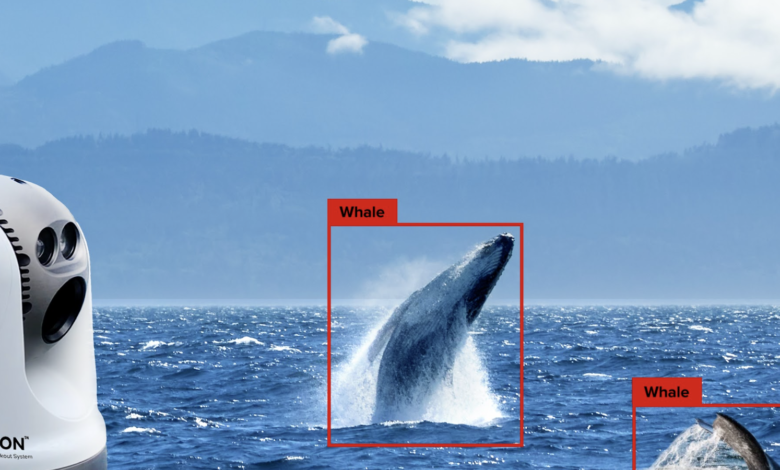

AI-driven computer perception technologies can, and should, play an important role in measures to protect vulnerable whale populations. Detection systems, including autonomous ship-board technology, as well as data mapping are showing promise in reducing the risk of vessel strikes and sonar exposure by leveraging a combination of advances in the world of deep-learning AI and computer-vision technologies.

Aiding Human Lookouts

It is impossible to know exactly how many whales are mortally wounded from encounters with ships, but one study by the non-profit conservation organization Friend of the Sea estimates more than 20,000 whales are killed by ship strikes each year. Several species are especially susceptible because their seasonal habitats and migration routes are near busy ports and overlap with shipping lanes.

Even when crews use the utmost caution, human lookouts may be hampered by a limited field and range of vision as well as fatigue. These challenges are further compounded by the fact that unless whales are breaching or creating large blows, they can be very difficult to spot.

Over the last 10 years, an explosion of deep-learning advances has greatly increased machines’ ability to perceive and accurately interpret the world around them, including the identification of specific objects and animals for situational awareness, surface perception, and environment modeling.

Integrated AI and computer-vision systems with cameras that sweep the horizon to autonomously find and track objects, including whales and fishing gear, are increasingly adept, achieving marine mammal detection accuracy rates of 80 percent at ranges up to three kilometers.

Addressing Data Gaps

The biggest challenge with these types of systems is training deep-learning neural networks on how to identify whales. Inherently, optimizing AI performance depends on the quality, quantity, and representativeness of training data. Recent advances in deep learning have been partially driven by the public availability of large datasets containing annotated images of many different objects, including a variety of animal species. Training neural networks with the whale data from these datasets pose an interesting challenge.

For land animals, pictures and videos are abundant, even those of them doing relatively boring, everyday things. In contrast, marine mammal videos and pictures almost always show them doing something exciting right in front of the camera, and this is especially true of the publicly available annotated datasets. However, when these animals are encountered in the real world, they are usually doing nothing more exciting than swimming and breathing. Thus, the available training data is not representative.

I realized this during a cruise in Alaska with my family. I was on the deck when I saw a tiny smudge way out on the water and was told that the little sliver of black in the distance was a whale. That moment crystallized the major hurdle facing automated whale detection – the targets are small, hard to find, and look nothing like the dramatic images we are used to seeing of whales leaping out of the water.

To develop detection systems that can accurately detect marine mammals at long ranges to give large vessels enough time to take evasive action, we need to annotate a lot of boring whale images.

And if the problem with visible light imagery is bad, it is even worse for thermal infrared (IR), where there is no annotated imagery at all. In IR, warm whales stand out distinctly against cold water, so the potential benefits of these sensors for whale detection are significant. For both visible and IR systems, annotated data is critical to accuracy. The more the scientific community can share, the better.

Supporting Wildlife Conservation & Clean Energy Infrastructure

The animal protection and conservation applications for AI-powered, computer-vision systems are extensive and exciting. The offshore wind industry is embracing the potential of the technology to help meet regulatory requirements for the protection of vulnerable marine species across all phases of wind-farm development and operation.

As advances continue and hardware costs come down, we can apply the technology to address any number of challenges surrounding clean energy infrastructure, nature conservation, and wildlife protection.

From tracking animal migration patterns and population counts to conducting remote wind turbine inspections, autonomous computer-vision systems have the potential to significantly aid in a wide range of environmental efforts.