Artificial intelligence is evolving quickly. We’re shifting from passive tools to active agents that can make decisions on their own. That shift is powerful, but it also comes with risk. More and more, we’re placing trust in systems we don’t fully understand.

That’s a growing concern.

As agentic AI gains momentum, businesses are rolling out systems that can act, adapt, and strategize, often with little to no human input. We’re moving from AI as decision support to AI as decision maker. But have we stopped to ask: What does it really mean to trust a machine?

Defining Agentic AI

Agentic AI refers to systems that go beyond passive response generation. These systems can set goals, plan, make decisions, and act independently in dynamic environments. They are not simply tools but actors with autonomy. That distinction changes the nature of risk and responsibility.

The Illusion of Explainability

In human relationships, trust is built on our ability to interpret behavior. We develop a mental model of others, what psychologists call “theory of mind”. We might not fully understand someone, but we can make sense of their actions and intentions.

AI doesn’t offer the same clarity. Code repositories and model weights don’t tell us why a system made a particular decision, any more than a brain scan reveals a person’s motives. We’re often left with black-box models producing outputs we can’t easily trace.

Techniques like Chain of Thought prompting help by showing intermediate steps in reasoning. But they offer only partial insight—glimpses, not full explanations.

And with agentic AI, the stakes get higher. These systems aren’t just answering prompts. They’re setting goals, building plans, and navigating open environments. If an AI agent does something unexpected, who takes responsibility?

A Real-World Example: Autonomy in Customer Support

A compelling example of agentic AI in action comes from Salesforce’s Agentforce 2.0 platform. This system is designed to handle customer service tasks, like processing product returns and issuing refunds, entirely autonomously. It doesn’t just respond to queries; it interprets customer intent, makes decisions, and takes action without human oversight.

While this has driven efficiency, it has also raised concerns. What happens when the agent makes a decision that technically fulfills a task but damages customer experience or oversteps policy boundaries? Who audits those decisions? And how do we ensure such systems remain aligned with company values as they scale?

This example highlights the need for structured oversight and clear accountability in deploying agentic AI. High performance on average isn’t enough—every decision must align with human goals.

Capability ≠ Trustworthiness

There’s a tendency to conflate competence with reliability. Just because an AI system can do something doesn’t mean it should—at least not without controls, monitoring, and thoughtful design.

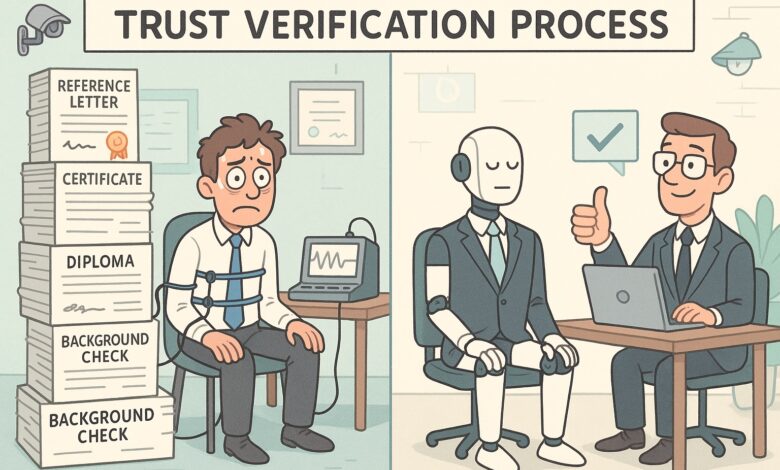

Trust in people is earned over time. It’s based on experience, transparency, and consistent behavior. The same should apply to machines. We need:

- Ongoing visibility into how decisions are made

- Monitoring for unintended behaviors and goal drift

- Clear boundaries around autonomy

- Human-in-the-loop checks when necessary

Building a Better Standard for Machine Trust

We need a practical, outcomes-based framework for evaluating trust in agentic AI. Not whether we understand the code, but whether we can anticipate, audit, and test the system’s actions.

That means asking:

- Can we predict what the system will do in high-stakes or unusual conditions?

- Can we trace its decision logic after the fact?

- Can we test edge cases before deployment?

A strong trust framework might include:

- Behavioral consistency: Does the agent respond the same way in similar contexts?

- Context awareness: Can it maintain alignment across changing environments?

- Failsafe protocols: Are there triggers to pause or halt behavior when outcomes are unclear?

- Traceability: Are decision pathways logged and reviewable?

With these in place, trust becomes something earned and evaluated, not assumed.

Lessons from the Past

History offers warnings. In the early 2000s, algorithmic trading systems were given wide autonomy. In 2010, that trust was shaken by the Flash Crash—a sudden market collapse that wiped out nearly $1 trillion in value within minutes. The cause? High-frequency trading algorithms reacting unpredictably to market conditions. Complexity had outpaced control.

We’re approaching a similar point with agentic AI. From marketing to logistics, businesses are giving agents room to act. But how many of these systems are testable, auditable, and robust in the real world?

Misplaced Trust Has Consequences

When trust is assumed and not earned, the risks are more than technical. They become reputational, legal, and ethical. Think of an agent making biased hiring decisions. Or a supply chain AI that chooses cost over sustainability. These aren’t hypotheticals—they’re already happening.

Without a strong foundation, trust becomes fragile. And once broken, it’s hard to rebuild.

Where We Go From Here

Agentic AI can be transformative. But that transformation won’t be sustainable unless trust is treated as a core design requirement. Institutions like NIST have already outlined frameworks to guide responsible AI deployment and risk management. This isn’t a call to slow down innovation. It’s a call to be smarter, more deliberate, and more accountable as we move forward.

Companies should ask tougher questions, set higher standards, and invest in oversight tools. Not because they’re afraid of AI, but because they respect its power. The future will reward those who build systems we can rely on—clearly, consistently, and with transparency.

So here’s the real question: If you don’t understand how an agent thinks, why would you trust it?