Our interactions with machines have transformed significantly from smart chatbots to sentiment analysis. Today, Artificial Intelligence (AI) and Natural Language Processing (NLP) have become indispensable in many tech applications. However, the true game-changers are Generative Pre-trained Transformers, or GPT models. These models have boosted the capabilities of existing applications and unlocked new possibilities in AI.

What are GPT Models?

GPT models are a class of AI models, including open-source AI models, that understand and generate human-like text based on the input given by utilizing transformers. Transformers, simply put, are a deep learning method that allows models to meaningfully process and produce text.

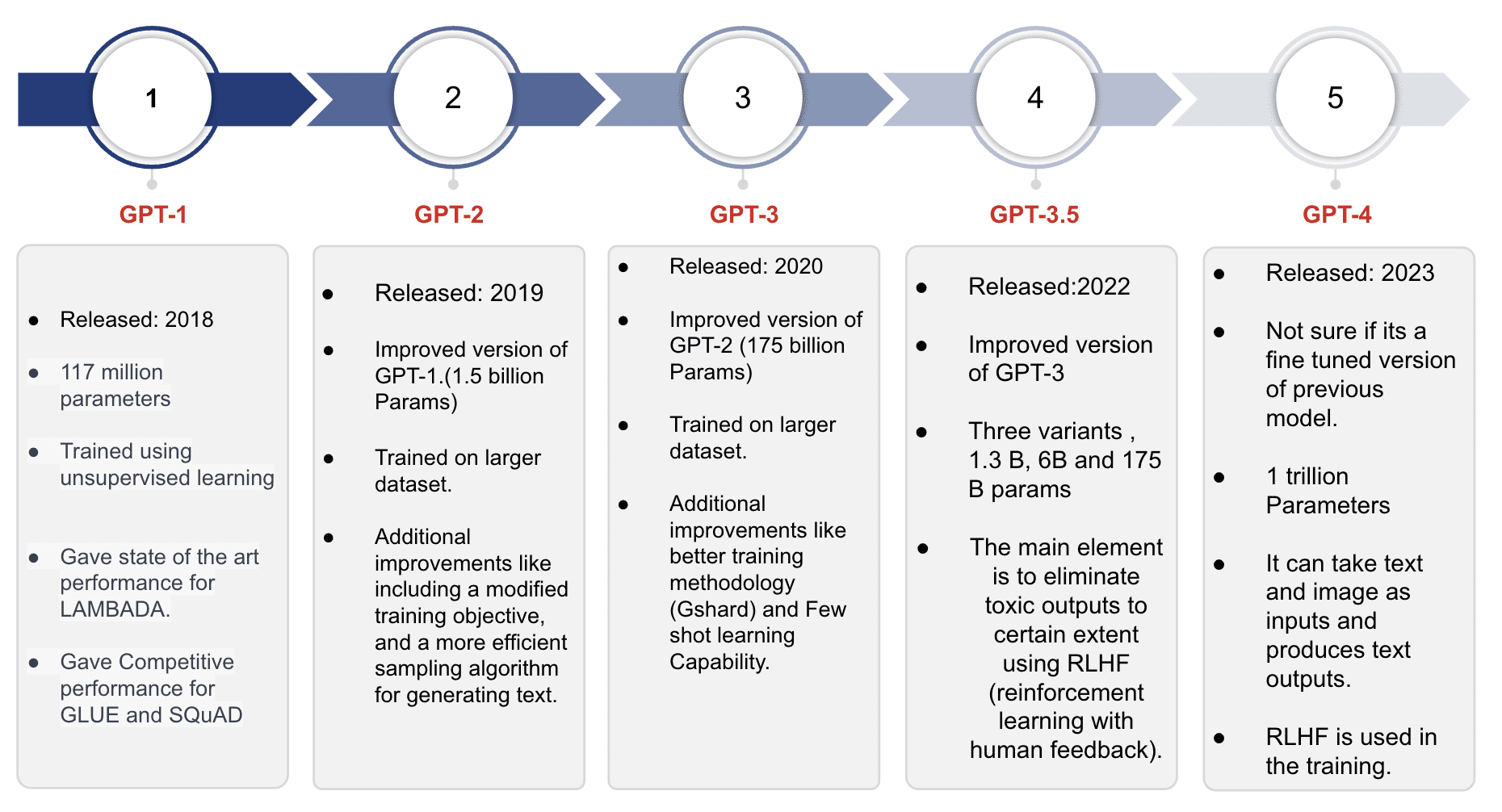

OpenAI started developing the GPT models with the introduction of GPT-1 in 2018. On several language tasks, the model showed remarkable results, establishing its efficiency.

Next year, OpenAI released GPT-2 as an improvement from the previous model by being trained on a wider set of data. This version could generate highly coherent text. This understanding led to discussions about the ethical implications of powerful language models.

GPT-3, was released in 2020, built with 175 billion parameters. At its launch, it was it one of the largest and most powerful language models. It can perform a wide range of tasks with minimal fine-tuning, from answering questions to writing essays, making it a versatile tool in the Generative AI toolkit.

Again in 2020, GPT-3 was brought out as an updated version of these other earlier inventions. With 175 billion parameters, this was one of the largest and most powerful language models ever built. It has wide abilities with minimal fine-tuning from answering questions to writing creative essays.

In 2023, OpenAI introduced the fourth version of GPT; which improved on what was done by its predecessors. With enhanced understanding, it could produce even more accurate and nuanced texts.

Finally, the latest GPT model is GPT-4o launched in 2024. The highlight of the model is its ability to optimize power by significantly reducing computational requirements. Rather than resource-intensive high-performance goals, this release aims at reaching broader sectors while maintaining strong performance levels.

How Do GPT Models Work?

The transformer architecture is the foundation of GPT models. Unlike traditional models, transformers process data in parallel using self-attention mechanisms, which allows them to handle long-range dependencies more effectively.

Additionally, self-attention mechanisms enable the model to focus on different parts of the input sequence by assigning varying importance to each word. This helps the model understand context and generate coherent text.

Large datasets are crucial for training GPT models, providing diverse language patterns and contexts that help the model develop a broad understanding of language, enhancing its ability to generate accurate and contextually appropriate text.

By using the transformer architecture, attention mechanisms, and extensive datasets, GPT models excel in understanding and generating human-like text for various NLP applications.

Key Features and Capabilities

GPT models are designed to effectively read as well as write very natural language. They are capable of understanding what is being said, define meanings of sentences, and provide contextually accurate and semantically logical answers.

Of the GPT models, one of the defining characteristics that have been embraced is the model’s tolerance for context over long form text. This is good since it means that they can come up with text that remains conversational and meaningful when it is in long interactions or complex subjects.

GPT models are rather general and can be used in any of the NLP subtasks including-

- Content Creation: Generating articles, blog posts, and marketing copy

- Customer Support: Powering chatbots and virtual assistants to handle queries

- Translation Services: Converting text between languages accurately

- Summarization: Creating concise summaries of long documents

- Email Drafting: Assisting with composing emails quickly

- Programming Assistance: Generating and debugging code snippets

- Personalized Tutoring: Providing educational support tailored to individual needs

- Social Media Management: Crafting and scheduling posts

- Market Analysis: Analyzing text data for insights and trends

- Sentiment Analysis: Determining the sentiment of text for brand monitoring

- Research Assistance: Summarizing academic papers and reports

- Creative Writing: Helping with story and script writing

Challenges and Limitations

- Ethical Concerns: GPT models can unintentionally generate biased or misleading information, reflecting the biases present in the training data

- High Computational Requirements: Training and running GPT models require significant computational resources, making them expensive and less accessible for smaller organizations

- Understanding Nuanced Emotions: While GPT models are good at understanding and generating text, they struggle with grasping subtle human emotions and contexts, sometimes resulting in inappropriate or incorrect responses

Future of GPT Models

- Ongoing Research and Potential Improvements: Research continues to improve the accuracy, efficacy, and ethical employment of GPT models. Data scientists are constantly working to reduce bias, improve contextual knowledge, and maximize computational efficiency

- Integration with Other AI Technologies: In future, GPT models might be linked to other AI technologies- such as, computer vision or robotics. This cohesive system would make it possible for more comprehensive and interactive AI systems that can also understand and respond to both text and visual inputs

- Predictions for Next-Generation Models: The next generations of GPT models are projected to turn out even more powerful than the previous ones. This will be measured through their better performance on complicated tasks, improved handling of subtle human emotions and greater flexibility across many applications. These versions could possibly attain greater accessibility due to optimization that would necessitate fewer resources than earlier ones.

Conclusion

GPT models have revolutionized natural language processing, offering powerful tools for content creation, customer support, and research. Despite their vast applications, challenges like ethical concerns and high computational demands remain, requiring ongoing research and careful consideration.

The future of GPT models looks promising with continuous improvements aimed at increasing efficiency, reducing biases, and integrating with other AI technologies. As these models evolve, they will handle complex tasks and nuanced contexts even better. Comparing leading models like ChatGPT, Gemini, and Claude highlights the competitive advancements, each bringing unique strengths. By staying informed about their capabilities and limitations, we can harness these models to make our work easier and enable business growth.