The use of AI in 2025 follows a predictable pattern. Companies rapidly integrate it into business processes and don’t disclose its use, right until they face the credibility crisis. To avoid this 3rd stage, let’s look at how the media industry quietly integrated AI three years ago.

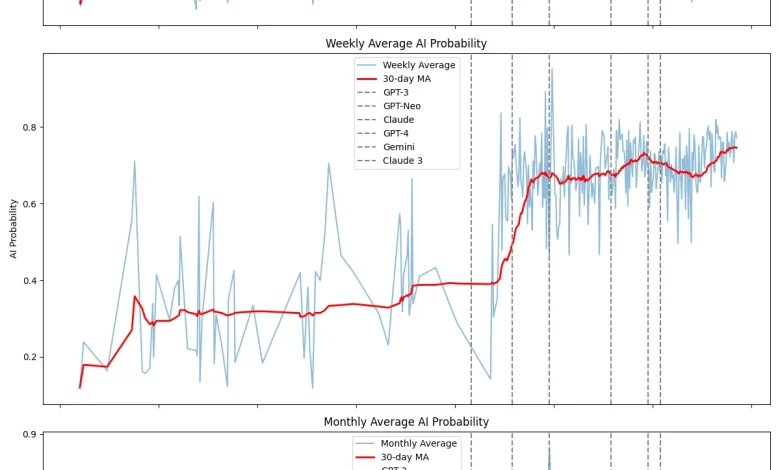

Between 2020 and 2021, one of the world’s most respected news outlets started generating the majority of its articles with AI. It was a significant and rapid shift from ~20-40% before 2020 to 70-80% after 2021 and maintained it to 2025. This change followed the launch of GPT-3 and the challenges of the pandemic. The data comes from the analysis of thousands of BBC articles published between 2012 and 2025, using AI detection tools.This analysis reveals trends that many businesses are facing today.

What’s particularly concerning is the transparency gap. The readers didn’t know until organizations started publishing AI ethics guidelines, well years after AI was integrated.

This gap mirrors what MIT’s State of AI in Business 2025 found across enterprise use of AI. Organizations rush to implement AI without establishing disclosure policies and verification methods. According to the report, 95% of organizations see no measurable ROI from AI, because adoption outpaces governance.

Where’s the enterprise parallel

In February 2024, the BBC shared its AI strategy. It contains 3 principles public interest, focus on creative talent, and readers transparency. They set clear boundaries:

- AI doesn’t write news stories,

- doesn’t fact-check content,

- and every AI text is reviewed by editors.

It happened 2 years after the actual raise of AI content on the BBC website (Artificial Intelligence section in particular). This approach is pretty much the same for some financial, healthcare, and professional services. They deploy the technology, optimize it in-house and only then address governance later, sometimes only if stakeholders ask questions.

This media industry’s experience is like a preview of what happens when enterprises prioritize implementation over transparency architecture. Analysis of BBC content reveals three phases that enterprise leaders should be aware of:

1. Stealth adoption

For the BBC it was in 2012-2020. During this period, AI detection rates in BBC content were low (probability scores around 0.2–0.4), there were mainly human-written articles. AI tools were too primitive for production, probably there were some quiet experiments of using them for simple tasks.

2. Rapid scaling

In late 2020-early 2021 AI probability scores at the BBC content increased to 0.6+, it followed the release of more advanced LLMs. Although the analysis showed a wide score variance: some content was AI-generated, while other remained human-authored. It was kind of just opportunistic, I believe they used AI wherever tools seemed useful.

3. Normalized use of AI

From 2021 scores stabilized at 0.7-0.8, meaning around 70-80% of articles were written with the use of AI. Its use became more of a standard practice. Multiple AI models were used, indicating a shift from vendor-focused solutions to task-oriented adoption.

Today quite a lot of organizations are between scaling and normalizing AI use. Sales teams use AI for email drafting, customer service deploys chatbots, legal reviews contracts with AI, finance automates reporting, and HR screens candidates algorithmically. Each department adopts tools independently without enterprise-wide governance.

Real experts still cover more complex and important tasks

As for the BBC content there was a clear correlation between content length and the likelihood of AI content. Briefs and summaries were mainly written by AI, while longread investigative articles were usually written by journalists. The same is in business, AI handles the routine, and human expertise focuses on valuable tasks.

Customers who want to get a quick answer or are more likely to interact with AI and clients with premium plans or some kind of priority can contact humans. Or entry-level job applications are often screened by algorithms, while C-level receive real HR attention. But oftentimes the first ones are unaware of it, and when they realize it, they lose trust in the company, or service.

The economic driver

AI adoption in the media is not about tech “enthusiasm”, but more about economic pressure. As ad revenue dropped and subscription models mainly worked only for selected publications, AI became a great solution to scale the production and save the budget.

This is not limited to the media. Some businesses face the same financial challenges and are turning to AI, like staffing costs rise with every new employee, AI chatbots help scale customer support at a lower cost. So it’s pretty obvious that companies adopt AI because economics demands it. But later on struggle to gain back loyalty of the clients, customers and partners. And oftentimes not because AI was used, but because it wasn’t clearly disclosed.

How to disclose the use of AI

Based on media industry patterns and enterprise needs, here’s a disclosure framework:

Level 1. Human-directed, AI-assisted

- Humans make the main decisions.

- AI helps with research, information synthesis, or drafting.

- Human review and approval are required before publication.

- Appropriate for customer service, contract review, financial analysis.

Level 2. AI-generated, human-verified

- AI creates the initial content from defined inputs.

- Humans check accuracy and approve before use.

- Suited for standard reporting and routine document creation.

Level 3. Automated with human oversight

- AI works autonomously, humans monitor and review only exceptions.

- Works best for processing transactions, scheduling, or basic inquiries.

Level 4. Fully automated

- AI works with no human involvement.

- Use only for non-critical activities and disclose clearly.

- Examples: routine data processing, system maintenance.

It’s better to use a visible disclosure, not like a hidden fine print.

The recommendations for founders and CAIO

The gap between AI use and governance is a risk. Here’s what I recommend doing:

- Get to know how every department uses AI. Identify informal or unapproved AI activity. There might be unauthorized usage in areas like sales, customer service, HR, and operations. Especially pay attention to those areas where AI may be used for communication with partners, users or customers.

- Set disclosure standards to shape future regulations, it’s better than writing last-minute regulations.

- Analyze which business areas benefit from AI assistance and where human expertise matters most.

- Explain AI’s role to customers, employees, and investors. The use of AI is not evil, just clarify how AI and humans work together in your company

Will people learn about your use of AI via open disclosure or discover it themselves through detection tools? In a couple of years detectors will be routine for customers, applicants, investors, and well, regulators (probably a bit earlier). Responsible use of AI works better, you can use existing frameworks or examples from your industry, on how other companies disclose AI use.

The choice now is whether your organization will set the standard for AI transparency or struggle to catch up once detection becomes universal.

Author

Olga Scryaba is the Head of Product at isFake.ai, a platform dedicated to making AI content transparent. Over the years, she has built products from scratch, set and tested goals with talented teams, and excelled at turning big, sometimes messy ideas into something real—while making tech simple and useful for people. At isFake.ai, the team helps everyone spot what’s real and what’s fake online, with ease and a little fun.