Abstract

As large language models (LLMs) evolve from conversational interfaces into agentic systems capable of proposing or executing actions, the enterprise differentiator shifts from raw model capability to system reliability. This article presents the Pyramid of Trust, a reliability architecture designed to solve critical failure modes in enterprise AI deployment.As AI systems increasingly support enterprise systems that underpin critical economic and service infrastructure, reliability becomes a matter of economic security. This novel framework provides the essential evaluation ‘moats’ required to transition AI from experimental pilots to safe, scalable production. The Pyramid of Trust integrates three complementary layers: (1) deterministic structural safeguards, (2) probabilistic semantic validation, and (3) outcome verification tied to real operational results. This framework reflects my independent synthesis developed through repeated enterprise practice. Publicly available analyses of digital supply chain transformation and service telemetry operations are used as illustrative contexts to explain why structural, semantic, and outcome-based evaluation moats are essential prerequisites for safe and valuable deployment of AI agents.

Keywords: agentic AI, evaluation, reliability engineering, semantic validation, human-in-the-loop, AI governance, AI risk management

1. Introduction: The Reliability Problem in Enterprise Agency

Over the past decade, enterprise AI has progressed from predictive forecasting to multimodal inference, and now to agency. These are systems that can recommend and or initiate actions within operational workflows. In these settings, the central question is no longer: Can the model generate an answer? It is: Can the organization trust the system’s recommendation enough for a human, or another system, to act on it reliably?

To address this reliability gap, I propose the Pyramid of Trust, a principles-based evaluation architecture for agentic systems in high-stakes enterprise settings. The framework is presented as an author-developed synthesis and focuses on general evaluation mechanisms rather than organization-specific implementations.

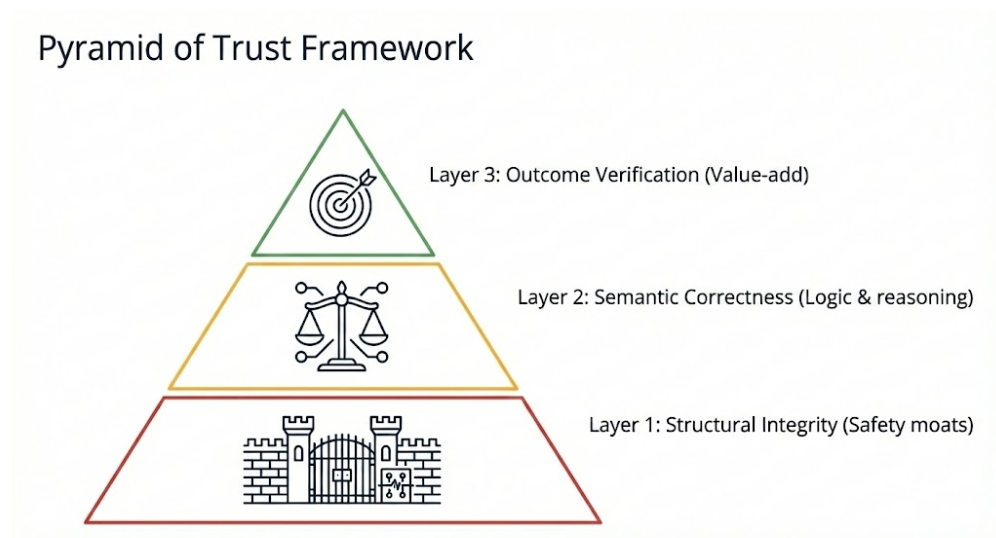

The Pyramid of Trust consists of three layers:

- Structural Integrity (Safety Layer): deterministic constraints and preconditions that gate action.

- Semantic Correctness (Logic Layer): probabilistic validation that the agent’s reasoning is consistent with available evidence.

- Outcome Verification (Value Layer): feedback loops that confirm real-world execution success and penalize silent failure modes.

The sections that follow illustrate each layer using publicly documented industry contexts and governance guidance.¹ These contexts are included to clarify problem structure and evaluation patterns using publicly available sources.

The Pyramid of Trust is intentionally domain-agnostic and is derived from sustained application of AI across both customer experience systems (diagnostics, dispatch, resolution workflows) and large-scale supply chain operations (planning/ forecasting, execution, and exception management).

2. Layer 1: Structural Integrity in Digital Supply Chains

2.1 Why structural safeguards matter

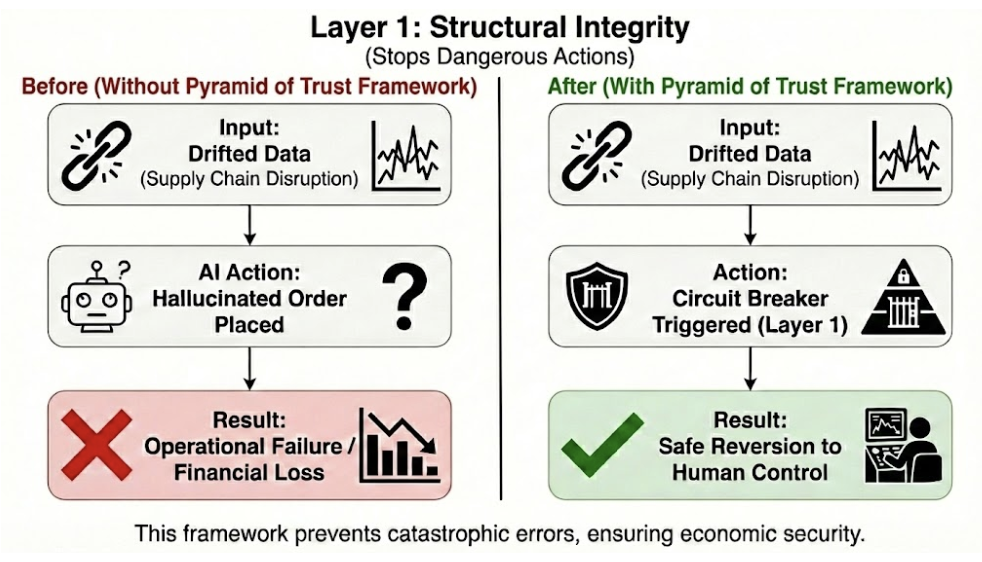

Publicly documented case analyses of large-scale supply chain digital transformation illustrate operational volatility patterns that motivate this layer: shifting lead times, incomplete signals, sensor and integration failures, and abrupt demand changes.¹ In such environments, the dominant risk for an agent is not simply misunderstanding a prompt. The risk is taking action on degraded or drifted data.

2.2 A structural pattern: drift-gated circuit breakers

The Pyramid of Trust recommends implementing a pre-inference circuit breaker to detect drift and block agent action when the operating regime becomes unstable. One common approach is to monitor input distributions using drift metrics such as Population Stability Index or related distribution-shift indicators and to define policy thresholds that route control to deterministic safeguards or human review.

Examples of drift conditions that should trigger an action gate include:

- Lead-time regime shifts: a step-change in supplier delivery estimates consistent with disruption scenarios.

- Concept drift in demand signals: a demand spike driven by external events not represented in the feature set used to train and validate downstream models.

- Feature corruption: null or zero values or broken telemetry streams for critical fields such as inventory-on-hand, capacity, or in-transit state.

Policy principle: when drift indicators exceed pre-defined tolerances, the system should bypass agent action and revert to deterministic controls such as safety stock rules or constraint solvers and or explicit human decisioning. This converts silent agent failure into an observable, governable event. This is essential for high-stakes operations.

3. Layer 2: Semantic Correctness in Telemetry-Driven Diagnostics

3.1 Why semantic validation matters

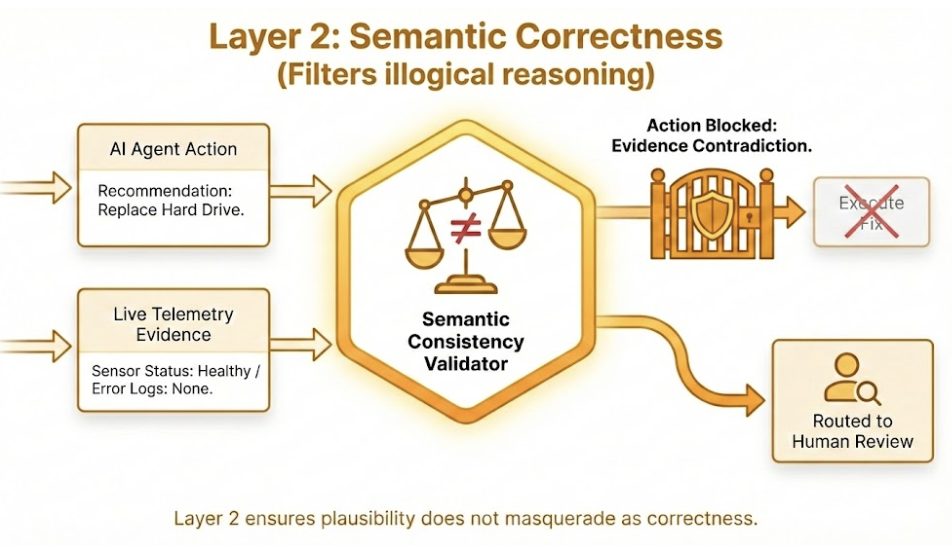

Even when data is stable, an agent can fail through inconsistent reasoning. It can produce a confident recommendation that contradicts the evidence. This pattern is especially dangerous in technical diagnostics and support workflows, where plausibility can masquerade as correctness.

3.2 A semantic pattern: evidence-consistency validators

The Pyramid of Trust recommends a consistency validator that cross-checks the agent’s proposed diagnosis and action against a structured set of admissible evidence combinations. This can be implemented using rules, constraints, or learned validators derived from labeled outcomes.

Publicly documented industry recognition and benchmarking programs for service analytics and reliability emphasize the operational importance of telemetry-grounded decisioning and consistent diagnostic logic.²

Mechanism (generalized):

- Ingest multi-source telemetry such as logs, sensor readings, and configuration state.

- Compare agent recommendations against an evidence model such as a truth table, constraint set, or diagnostic graph.

- Block or route to review when the recommendation contradicts telemetry.

Policy principle: if an agent proposes a hardware failure but available evidence shows healthy hardware signals, the semantic layer should block the recommendation, or require a higher-assurance pathway, before it reaches a technician or triggers downstream actions. This layer is the difference between an agent that is persuasive and an agent that is correct.

In customer support environments, this prevents scenarios where an agent recommends device replacement while telemetry indicates a configuration or software fault, reducing unnecessary dispatches and repeat contacts.

4. Layer 3: Outcome Verification in Automated Dispatch Workflows

4.1 Why outcomes define value

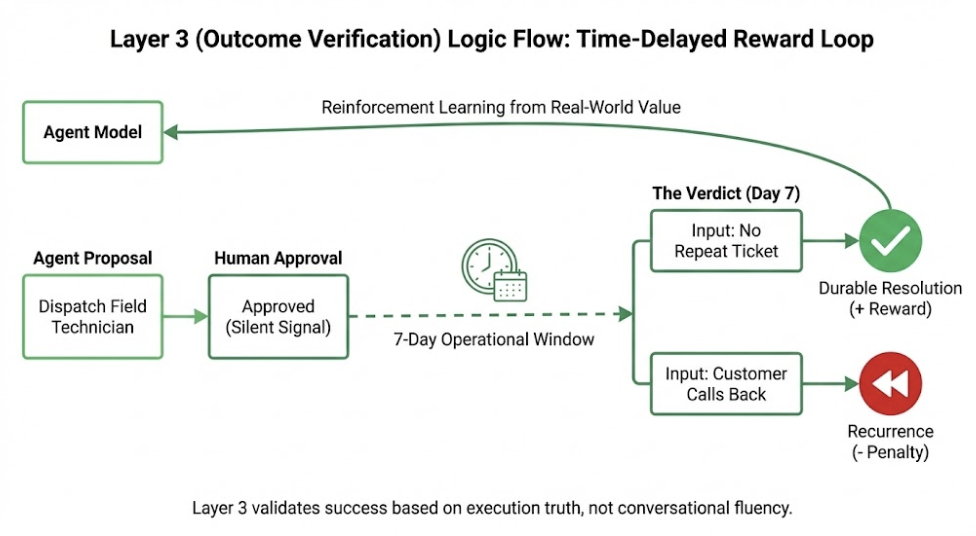

In enterprise service operations, success is ultimately measured by operational outcomes: resolution efficiency, avoidance of repeat incidents, and cost and quality performance. It is not measured only by conversational fluency. Industry benchmarking frameworks consistently emphasize measures such as first-time resolution and recurrence rates.²

4.2 An outcome pattern: time-delayed reward with silent human validation

The Pyramid of Trust recommends aligning agent learning and evaluation to real outcomes via time-delayed verification. A practical pattern is a silent validator loop where humans remain the final authority, while the system measures downstream success and uses it as the reward signal.

Mechanism (generalized):

- The agent proposes an action such as a dispatch decision or recommended fix.

- A human expert approves or overrides without needing to provide extensive annotation.

- The system assigns reward only if (a) the action is accepted and (b) the incident does not recur within a defined window.

Policy principle: the agent should be optimized for durable resolution and non-recurrence, thereby training the system against failure modes that only appear after execution. This establishes an outcome moat. Performance is measured by execution truth, not text similarity.² ³

5. Conclusion: The Pyramid of Trust as an Evaluation Moat

Agentic AI systems become enterprise-grade not when they can talk, but when they can be trusted to act. The Pyramid of Trust provides a prescriptive reliability architecture:

- Layer 1 (Structural Integrity): drift-gated circuit breakers prevent action on degraded regimes common in operational domains.¹

- Layer 2 (Semantic Correctness): evidence-consistency validators block plausible-but-wrong recommendations before they reach execution.²

- Layer 3 (Outcome Verification): time-delayed rewards and outcome-based evaluation align agents to durable operational value.² and ³

Together, these layers form an evaluation moat, a reliability advantage that is difficult to replicate by swapping models alone. Implemented well, the architecture supports the core objectives of modern AI governance guidance, including risk identification, measurement, and mitigation throughout the AI lifecycle.³

This framework reflects a multi-year evolution informed by repeated deployment, evaluation, and refinement of agentic AI systems in production enterprise environments.

Endnotes and References

- Sáenz, M. J., Borrella, I., and Revilla, E. (2021). DELL: Roadmap of a Digital Supply Chain Transformation. MIT Center for Transportation & Logistics case study, published by Ivey Publishing and distributed by Harvard Business Publishing.

- Technology and Services Industry Association (TSIA). (2024). TSIA STAR Awards: Smart Troubleshooting & Resolution with Intelligent Dispatch Engine (STRIDE). Public program descriptions and industry benchmarking materials for service analytics and reliability.

- National Institute of Standards and Technology (NIST). (2023). AI Risk Management Framework (AI RMF 1.0). National Institute of Standards and Technology.

Author Note (Independence and Non-Confidentiality)

The Pyramid of Trust is the author’s independent framework and synthesis. This article relies on publicly available sources for illustrative context and does not disclose non-public or confidential information.