Generative AI has reached its inflection point. After two years of pilots and proofs of concept, many organizations have discovered that creativity alone doesn’t transform a business. The fundamental shift comes when intelligence connects to action – when systems can reason, decide, and execute within the guardrails of enterprise governance. This is the promise of the emerging agentic AI era.

Agentic AI combines the language fluency of generative models with the autonomy of automation. It closes the gap between “what should be done” and “what actually happens.” But realizing that promise requires moving beyond hype toward disciplined, responsible adoption.

From Generation to Action

The first wave of generative AI unlocked creativity. Marketing teams drafted copy in seconds, developers scaffolded code, and analysts summarized reports at scale. Yet most pilots stalled at the same point: impressive demos that never reached production.

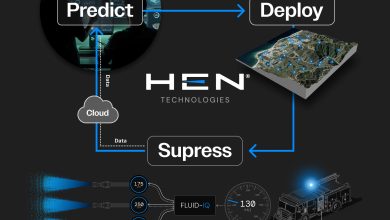

Agentic AI aims to bridge that divide by combining generative reasoning with automation. Rather than producing insights that wait for humans to act, these systems complete closed-loop tasks: retrieving data, triggering approved actions, and learning from feedback.

Consider the energy sector. Production teams that once relied on dashboards to flag potential issues now interact with AI services that monitor equipment, flag anomalies, and support decision-making through natural-language assistants layered atop predictive systems. This is an approach exemplified by platforms like Baker Hughes’ Leucipa, which couples AI-driven monitoring with conversational access to operational data.

Retailers are following suit. At Hugo Boss, generative AI tools now support merchandising, product imagery, and store operations, part of a broader push toward intelligent retail ecosystems. At Albert Heijn, an internal assistant built on Azure OpenAI, helps employees answer operational questions, shortening training cycles and improving store consistency.

These examples illustrate the same trajectory: AI moving off the lab bench and into the enterprise bloodstream.

Frameworks Matter More Than Models

According to McKinsey’s State of AI 2025, nearly 80% of companies now report using AI in at least one business function, but many still struggle to tie those efforts to measurable impact. The organizations seeing value are “rewiring” workflows and putting senior leaders over governance, not just experimenting at the edges.

The lesson is clear: sustainable success depends less on picking the “best” model and more on engineering discipline. Two public frameworks have become anchors for that discipline. The NIST AI Risk Management Framework guides organizations in identifying and mitigating bias, safety, and transparency risks across the AI lifecycle. The complementary ISO/IEC 42001 standard introduces an auditable management system for AI, defining roles, incident response protocols, and continuous improvement loops. Together, they offer a common language for responsible scaling.

Enterprises that build around such standards focus on three essentials: strong data foundations, human-in-the-loop checkpoints, and metrics that map directly to business outcomes. Without these, even the most advanced model remains a science project.

Redefining Human and Machine Productivity

Public debate often frames automation as a zero-sum contest between people and machines. The reality is subtler. Agentic AI is redefining how humans work, not whether they work.

Developers now orchestrate AI copilots that generate and test code. Analysts guide systems that surface anomalies instead of manually combining through spreadsheets. Customer-service agents handle empathy while machines resolve routine transactions. In healthcare, machine-learning agents summarize patient histories so clinicians can focus on care quality. Across logistics and engineering, agentic workflows compress software release cycles and reduce error rates.

In every case, productivity gains come from collaboration, not substitution. The challenge lies in cultivating trust – training employees to question outputs, monitor decisions, and treat intelligent systems as teammates with both limits and strengths. The most effective teams build “explain-as-you-go” feedback loops and clear escalation paths to keep humans meaningfully in the loop.

Why Early Adopters Are Teaching Us

The organizations achieving measurable results share several traits. First, they start small, with clearly defined processes like maintenance scheduling or invoice reconciliation, where outcomes and tolerances are well understood. They instrument those workflows with metrics executives already recognize downtime, return rates, and mean time to repair.

Second, they invest in data readiness. Most “failed” agentic projects are not model failures but data-governance failures, stemming from unstructured content, missing metadata, or disconnected knowledge repositories. As Business Insider recently noted, AI success increasingly depends not on model sophistication but on data integrity; poor, unstructured data and weak retrieval pipelines remain the biggest bottlenecks.

Finally, they enforce continuous evaluation. Gartner predicts that up to 40% of agentic initiatives may be abandoned by 2027 due to unclear value or safety risks. Enterprises that monitor agent behavior, run red-team simulations, and integrate feedback loops dramatically reduce that risk. In short, they treat AI as living software, not a static product.

Governance as Competitive Advantage

As autonomy rises, so does accountability. A responsible governance stack includes policy taxonomies aligned to NIST and ISO 42001; pre-production testing for prompt injection and tool-use abuse, runtime anomaly detection, and structured post-incident learning.

Governance, however, is more than compliance. It is the foundation of trust, both for customers and employees, who must rely on machine partners. When people understand the boundaries of automation and see how their feedback improves the system, adoption accelerates naturally.

This ethos is increasingly reflected in the emergence of enterprise-wide transformation frameworks, approaches that embed governance and measurement into every phase of AI adoption. These playbooks align technical execution with business strategy, ensuring that AI is not just deployed, but operationalized responsibly and at scale. It’s a signal that the enterprise AI era is maturing from isolated innovation labs to integrated systems of record.

The Path Toward AI-Native Enterprises

The term AI-native describes organizations that embed intelligence into every layer of operation, from strategy and workflow design to product delivery. In these enterprises, data flows seamlessly, automation scales safely, and decisions are continuously informed by both human judgment and machine reasoning.

Becoming AI-native doesn’t happen overnight. It demands re-architecting technology stacks, modernizing data ecosystems, and fostering a culture of experimentation. It also requires restraint: knowing when not to automate, when to keep humans firmly in the loop, and when to pause for ethical review.

Some organizations are formalizing this journey through structured transformation frameworks that unify advisory, engineering, and delivery practices. These integrated models—pairing human-critical thinking with agentic automation—help enterprises move from experimentation to execution. The result is a measurable, repeatable path toward embedding intelligence across functions.

If the last decade was about digitization, the next will be about autonomization. The enterprises that master this transition will discover that AI’s most significant value lies not in faster answers, but in better actions, executed responsibly, at scale, and in partnership with the people who understand the business best.

Looking Ahead

Agentic AI is not a marketing term or a magic cure. It represents the next logical step in the evolution of enterprise automation. The leaders of this era will be those who view AI not as a bolt-on capability but as a disciplined craft, anchored in engineering rigor, guided by ethics, and animated by human creativity.

Models will continue to improve. Regulations will mature. Frameworks will evolve. But the enduring differentiator will remain the same: the ability to translate intelligence into impact responsibly. When that balance is achieved, we stop chasing the future of AI and start building it, one trustworthy agent at a time.