In our world that is becoming more and more connected, AI is no longer limited to big data centers. AI is making its way to the edges of our networks, which lets us do things like having smart sensors in factories and self-driving cars that can find their way around city streets. The transition to ‘edge computing’ offers immediate insights and real-time decision-making; however, it presents a considerable challenge: how can we integrate robust AI models into small, resource-constrained devices? The solution, unexpectedly, may reside in the mysterious domain of quantum mechanics.

Envision a future in which AI models, previously too vast and intricate for anything other than the most potent cloud servers, operate effortlessly on your smartphone, a drone, or even a smart appliance. This is not science fiction; it is the potential of quantum-enhanced AI model compression, an innovative method that could transform the deployment of intelligence within hybrid cloud architectures.

The Squeeze: The Necessity for AI Miniaturization for Edge Computing.

The advantages of edge computing are evident. Local data processing reduces latency and bandwidth issues associated with sending information to a remote cloud. This is crucial for applications where every millisecond is vital, such as in self-driving vehicles responding to unexpected obstacles or medical devices monitoring a patient’s vital signs. However, there is a stipulation. The AI models that drive these applications are typically colossal, trained on extensive datasets and necessitating substantial computational resources.

Figure 1: An artistic depiction of quantum computing, suggesting the sophisticated technology capable of addressing the AI compression challenge.

Learn about the problems that come up when you use a supercomputer in a small space. Edge devices are small, don’t use a lot of power, and don’t have a lot of memory or processing power. It’s not easy for modern AI models to get the energy they need in a way that works well. In this case, “model compression” is a very important idea. The goal is to make AI models as small as possible while still being able to think. Big improvements have come from using old-fashioned methods like getting rid of unnecessary connections in neural networks and lowering the precision of calculations. They often face a limitation requiring a compromise between size and intelligence. Excessive compression undermines the model’s accuracy, making it unsuitable for critical tasks.

Quantum’s Whisper: An Innovative Epoch of Compression

Present quantum algorithms not as replacements for classical computing, but as a significant enhancement to our compression arsenal. Despite the nascent state of fully developed quantum computers, the principles of quantum mechanics are already facilitating transformative approaches for AI model compression. These “quantum-inspired” algorithms employ principles like superposition and entanglement to discover more efficient techniques for representing and processing information.

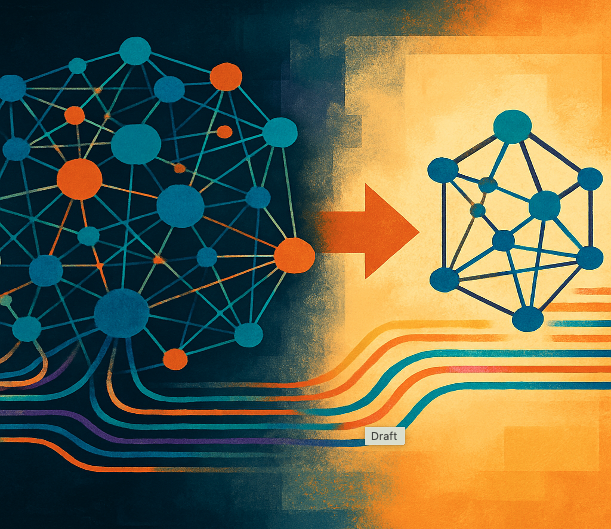

Figure 2: An artistic representation of AI model compression, illustrating a complex network being optimized into a more efficient structure.

Think of a neural network where each connection doesn’t just show one value, but a mix of different values. This would make the representation much more complex and smaller. Analyze quantum annealing, a method that simultaneously explores various potential solutions to identify the most effective strategy for model pruning or the factorization of intricate mathematical constructs. These techniques aim to exceed the constraints of conventional compression methods, enabling the minimization of AI models to unprecedented dimensions while preserving or potentially improving their accuracy.

This pertains not only to reducing model size but also to enhancing their intelligence and resilience. Quantum-inspired compression can produce models that are both lightweight and more resilient to noise, as well as more efficient in their learning mechanisms. This results in expedited training durations, enhanced predictive accuracy, and ultimately, more robust AI applications capable of flourishing at the edge.

The Hybrid Cloud Convergence: Where Edge Integrates with Cloud through Quantum Elegance

In the future, choosing between cloud and edge computing won’t be the only thing you can do with AI. The hybrid cloud is also about figuring out how to get the two to work well together. This design lets companies train AI models and look at complicated data because centralized cloud data centers have a lot of processing power. It also lets them make quick, real-time decisions at the edge using compressed, optimized models.

Figure 3: An artistic depiction of edge computing, demonstrating the processing of data in proximity to its source, thereby enhancing cloud infrastructure.

Quantum-enhanced AI model compression serves as an effective intermediary within this hybrid ecosystem. Examine the training process of a sophisticated AI model utilizing cloud infrastructure and its substantial resources. Upon refining this robust model, quantum-inspired algorithms can efficiently compress it into a compact format. This innovation can be readily implemented on edge devices, allowing them to execute intelligent functions independently of continuous cloud connectivity.

This synergy establishes a robust feedback loop. Data gathered by compressed AI models deployed at the edge can be transmitted to the cloud for additional analysis and to enhance the original, larger models. The ongoing cycle of learning and implementation helps AI systems get better over time, making them more accurate and efficient. With quantum compression, the hybrid cloud combines the power of cloud computing for deep learning with the speed of edge computing for real-time intelligence.

The Horizon: A Future Defined by Quantum-Enhanced Artificial Intelligence

The path to genuinely omnipresent and intelligent AI is marked by innovation, and quantum-enhanced AI model compression signifies a substantial advancement. This technology facilitates the deployment of advanced AI models on resource-limited edge devices, thereby unlocking new opportunities across all industries. The effects will be significant, ranging from intelligent urban environments and enhanced manufacturing processes to individualized healthcare and genuinely autonomous systems.

As quantum research advances, we can expect increasingly potent and efficient compression techniques, further obscuring the distinctions between cloud capabilities and edge computing potential. The future of AI transcends merely larger, more intricate models; it emphasizes the development of more intelligent and efficient systems, driven by the profound yet nuanced principles of quantum mechanics. This revolution is not merely impending; it is already commencing to transform our world.

Bio: Milankumar Rana is a distinguished Software Engineer Advisor and Senior Cloud Engineer at FedEx. Rana is also IEEE Senior Member, Contributed to Many other articles about his Cloud Expertise.