The data contradict everything the business press is preaching. Headlines celebrate companies undertaking massive AI transformations, but the best returns often belong to companies rejecting the greatest number of AI initiatives.

They’re not Luddites. They’re instead practicing strategic AI rejection: the sophisticated ability to know when to say no.

Strategic AI rejection comes from understanding how AI actually works—not the surface-level “AI can do amazing things” that fills keynote speeches, but the kind of understanding that reveals why most AI initiatives fail, why vendor promises rarely survive production, and why waiting often beats rushing.

The Magic Eight Ball Syndrome

Picture a Fortune 500 boardroom where executives approve $50 million AI initiatives under the same time pressure they’d apply to routine cap-ex. They’re essentially shaking a magic eight ball.

They might get lucky with good outcomes, but they’re not making good decisions. They’re gambling their companies’ futures on technologies they don’t really understand.

In fairness, these executives are working under pressure: industry reports showing AI leaders pulling ahead by 20-30%, investors asking pointed questions about AI strategy, and genuine success stories from early adopters. The pressure to act is real. The problem is that the pressure is suffocating strategic thinking.

The solution is to understand the technology—not enough to code neural networks or fine-tune language models, but just enough to cut through vendor hype and evaluate real business impact.

Executives who have this understanding have the power to navigate their companies to success. If you look at what they do, you’ll see them evaluating every opportunity through three lenses: Leverage, Evaluation, and Delay. I’ll discuss these in order.

The Leverage Lens: Moats versus Mimicry

“Does this create a moat or just match the baseline?”

This is the first strategic question that comes to mind when you understand how AI models actually work. When you grasp that models trained on public data converge toward similar capabilities, you realize that using OpenAI’s API gives you the same tools as everyone else.

Consider two logistics companies. One implements an off-the-shelf chatbot for customer service—the same solution available to every competitor. The other uses twenty years of proprietary data to train routing algorithms that no one else can replicate.

Both claim to be AI-powered. Only one has leverage.

Real AI leverage comes from marrying unique data to a unique application. If you’re implementing the same large language model as your competitors for the same use cases, you’re buying baseline capability. That capability might be necessary (like having a website in 2025), but it isn’t differentiating.

Models trained on public data and used for common applications become commodities with shocking speed. A company saying no to these initiatives isn’t falling behind. It’s instead preserving capital for opportunities where its unique assets can create genuine differentiation.

If your AI strategy is, “We’ll use ChatGPT for content,” you don’t have a real strategy. You’re just doing what everyone does. As a result, you’ll accumulate mediocre solutions that consume resources while only delivering marginal value, and eventually watch competitors pull ahead—not because they adopted more AI, but because they adopted the right AI for their unique advantages.

The Evaluation Lens: Demo versus Production

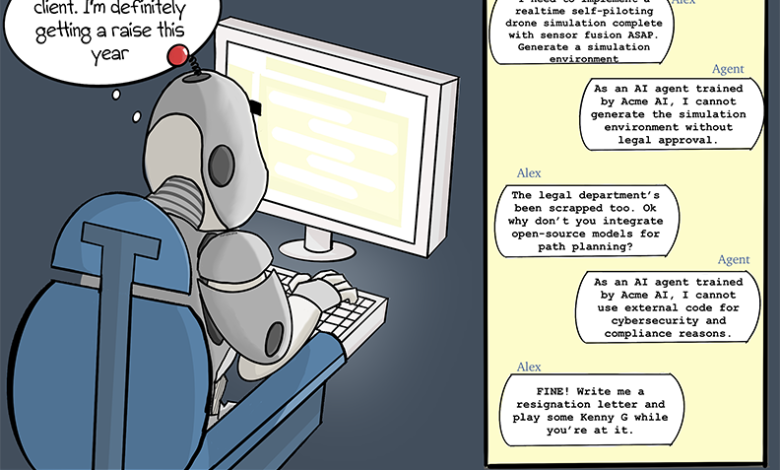

Every AI vendor has a compelling demo. But demos lie—not through malice, but through wishful thinking that tries to bridge the gap between controlled demonstrations and chaotic reality.

Executives who understand AI take an almost forensic approach to vendor evaluation. They don’t want success metrics (everyone has those). They want failure rates, error cascades, and recovery procedures.

They understand that AI models interpolate brilliantly within their training data but fail catastrophically outside it. That knowledge breeds skepticism. They ask about edge cases immediately: “What happens when your system encounters something outside its training distribution?” In other words, “What happens when it breaks?”

When you know that models perform brilliantly on clean data but degrade rapidly with real-world noise; when you grasp that confidence scores can be dangerously miscalibrated; when you comprehend the true computational costs of inference at scale—when you understand all these things, you ask different questions. The answers reveal vendor pricing to be either appropriate or predatory.

The build-versus-buy fantasy deserves its own obituary. Hearing executives confidently announce they’ll “train their own models” is like hearing someone confidently announce they’ll build their own semiconductor fab. Those who understand AI’s technical reality—the data requirements, computational costs, and expertise needed—know that for 99% of companies, training custom models is setting money on fire while chanting, “Innovation!” They say no to these initiatives not from conservatism, but from comprehension.

The Delay Lens: Timing versus Scrambling

Recognizing when strategic delay beats early adoption isn’t procrastination but pattern recognition. In many domains, AI’s capabilities are accelerating faster than Moore’s Law — doubling in months, not years, while costs collapse just as quickly. Model performance tends to leap in discrete jumps, and costs don’t decline gradually but fall off cliffs — often by 80–90% within a year.

In early 2023, rolling out real-time call transcription required a mid-six-figure custom Automatic Speech Recognition build and six months of tuning. By mid-2024, the same capability needed only a two-week Whisper-API integration that cost pennies per minute. The firms that waited just twelve months conserved capital and still launched a sharper product.

The executives who understand these dynamics time their moves like seasoned surfers reading waves. They know that today’s $5 million custom solution becomes tomorrow’s $5 API call. Their waiting isn’t stagnation, but strategy.

It’s true that some markets reward first movers who shape customer expectations. And sometimes data advantages compound: start late, and you’ll never catch up. Sophisticated executives know these things. They know that in winner-takes-all markets or markets in which network effects dominate, the cost of waiting can be existential.

They know the right move isn’t always to wait. They know the difference between strategic delay and surrendered advantage. They know that when implementation costs are dropping faster than competitive advantages are accruing, then patience pays. And they know that when the opposite is true, moving fast matters more than moving smart.

But sophisticated executives also know that if current solutions require extensive customization for basic functionality; if vendors can’t articulate ROI beyond vague “efficiency gains,” or if their technical teams are more excited than their business teams, then waiting often wins. Fundamentally, sophisticated executives know that what’s possible isn’t always practical, and this teaches them to avoid expensive scrambling and embrace strategic delay.

Leverage, Evaluation, and strategic Delay—these three lenses form what I call the L.E.D. framework. When an AI opportunity lights up all three, it’s likely worth pursuing. Otherwise, it’s likely worth rejecting.

AI Invisibility & Steps to Sophistication

The more deeply a company understands AI, the more selectively they use it, the more initiatives they reject, and the better their results. The best result is AI invisibility.

When AI works properly, it disappears into the background, like electricity or running water. The models settle into the technology stack alongside databases and message queues. Boards no longer debate “database strategies,” but endorse business plans that simply assume AI is working quietly in the background.

Companies that say no to what’s flashy-but-flawed preserve resources and organizational focus for implementations that truly matter. They understand that the best AI strategy might be invisible to outsiders but transformative to operations.

Reaching AI invisibility requires the sophistication to reject the wrong initiatives along the way. Developing that sophistication requires deliberate practice:

Learn the vendor lexicon. “AI-powered,” often means, “We added a chatbot.” “Proprietary model,” usually means, “We fine-tuned GPT and tripled the price.” “Enterprise-ready,” sometimes means, “It works if you don’t push it too hard.”

Design experiments that can fail. Insist on contained tests with pre-defined kill criteria—not pilots designed to succeed, but real experiments with clear abandonment conditions. Define what failure looks like before you start, then have the courage to pull the plug when you see it.

Grasp the failure modes. Understand training data bias, distribution shift, and how confidence calibration can affect evaluation ability. If you know how AI systems break, you’ll ask better questions about how those systems work.

Develop market timing intuition. Study AI’s development patterns. Learn to recognize when capabilities are stabilizing versus evolving rapidly, and when being six months late to a stable solution beats being six months early to an expensive beta test.

The New Competitive Edge

The business press peddles a simple story: adopt AI everywhere or face extinction. The reality is more complex and more profitable.

The current AI landscape resembles a gold rush, complete with fortune seekers, snake oil salesmen, and a few people getting genuinely rich. The consistent winners in historic gold rushes weren’t usually the miners. They were the people who knew enough about geology to distinguish pyrite from gold. The same is true today.

The companies winning with AI aren’t the ones saying yes to everything. In today’s AI gold rush, saying no to the wrong initiatives is more valuable than saying yes to everything. The winning companies are the ones with the sophistication to distinguish initiatives that matter from ones that don’t.

That sophistication isn’t born of vague hope in the promise of AI. It’s born of understanding: knowing how AI works—its capabilities, its limitations, and its evolutionary trajectory. That understanding is what transforms executives from gamblers into successful, fortune-finding strategists.