The convergence of artificial intelligence and cloud-based data platforms is fundamentally reshaping how organizations approach data architecture. As we progress through 2025, the traditional boundaries between data warehousing, analytics, and AI are dissolving, creating unprecedented opportunities for enterprises to harness their data assets. This transformation isn’t merely technological—it represents a paradigm shift toward intelligent, self-optimizing data ecosystems that can adapt, learn, and evolve alongside business needs.

AI-Enhanced Data Warehouse Architectures Move Beyond Traditional Limitations

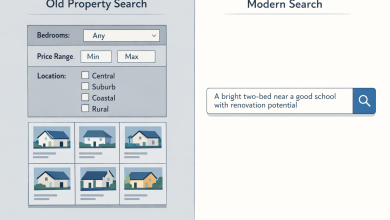

Traditional data warehouses, while powerful, face significant limitations in today’s data-driven landscape. AI-powered data warehousing is no longer a futuristic concept. It is a necessity for businesses aiming to stay competitive, agile, and data-driven. The integration of AI fundamentally transforms how data warehouses operate, moving from reactive to predictive systems that anticipate needs and optimize performance autonomously.

Modern AI-enhanced architectures leverage machine learning algorithms to optimize query performance, automate data quality management, and enable predictive resource allocation.AI and ML can analyze data usage and determine the best ways to optimize data storage. For example, AI can identify duplicated or redundant data and automatically delete it, freeing up space.</a> This intelligent automation extends beyond simple optimization—it fundamentally reimagines how data flows through organizational systems.

The semantic layer has emerged as a critical component, acting as a bridge between raw data and business context.A semantic layer in data architecture takes a metadata-first approach and is becoming an essential component of modern data architectures, enabling organizations to simplify data access, improve consistency, and enhance data governance. This metadata-first approach ensures that AI systems can understand not just what data exists, but what it means in business terms.

Practical Implementation in Snowflake Environments

Snowflake has positioned itself at the forefront of AI-integrated data platforms, offering a comprehensive suite of AI capabilities that demonstrate practical implementation pathways. Snowflake enables AI on governed data, simplifying unstructured data processing, gen AI app development, and model training with minimal operational overhead. The platform’s approach to AI integration showcases how organizations can implement advanced capabilities without completely overhauling existing infrastructure.

The Snowflake Cortex suite exemplifies practical AI implementation, offering everything from LLMs to understand unstructured data, answer freeform questions, and provide intelligent assistance to automated ML functions that democratize advanced analytics. Organizations can now process text documents using SQL functions powered by LLMs from leading providers, eliminating the complexity of external API integrations while maintaining data security and governance.

Performance Improvements and Cost Optimization Show Real-World Results

The implementation of AI in data platforms delivers measurable business value through both performance improvements and cost optimization. TS Imagine implemented generative AI at scale with Snowflake Cortex AI, cutting costs by 30% and saving 4,000 hours previously spent on manual tasks.</a> These results demonstrate that AI integration isn’t just about technological advancement—it’s about delivering tangible business outcomes.

Performance improvements manifest in multiple dimensions. Quantization typically reduces model size by 75-80% with minimal accuracy loss (usually under 2%). This makes it ideal for edge devices, while automated optimization techniques can deliver significant speed improvements. Organizations report query performance improvements of 20-50% through AI-driven optimization, alongside substantial reductions in manual effort and operational overhead.

Cost optimization extends beyond simple resource reduction. The platform’s pay-as-you-go pricing model adjusts to the dynamic demands of AI applications, particularly for resource-heavy use cases such as generative AI (GenAI) and predictive modeling. This flexible pricing model enables organizations to scale AI workloads efficiently while controlling costs—a critical consideration for enterprise adoption.

Roadmap for AI-Augmented Data Platform Transition

Organizations embarking on AI-augmented data platform journeys require a structured approach that balances innovation with operational stability. A well-defined and structured data management practices are the best way to mitigate the limitations that derive from these challenges and leverage the most possible value from your data. The transition roadmap should prioritize business value delivery while building foundational capabilities for future expansion.

The first phase involves establishing AI-ready infrastructure with proper governance frameworks. Step one is ensuring your infrastructure is based on security and governance for enterprise-grade AI. The next crucial step is observability. Organizations must implement comprehensive monitoring and observability systems before deploying AI capabilities at scale.

The second phase focuses on pilot implementations and proof-of-concept development. Organizations should select high-impact, low-risk use cases that demonstrate clear value while building internal capabilities. The third phase involves scaling successful pilots across the enterprise, leveraging lessons learned to establish best practices and governance frameworks.

Implementation Challenges Require Strategic Solutions

Despite the promise of AI-augmented data platforms, organizations face significant implementation challenges that require careful consideration and strategic planning. By 2028, global data creation is projected to grow to more than 394 zettabytes – and clearly enterprises will have more than their fair share of that. This explosive data growth creates both opportunities and challenges for AI implementation.

Data quality and governance represent persistent challenges in AI implementations.Poor data quality can lead to misguided decisions. Research suggests that bad data costs U.S. businesses $600 billion annually. Organizations must establish robust data quality frameworks before implementing AI capabilities, as AI systems amplify both good and bad data patterns.

Integration complexity poses another significant challenge. There is an increasing need for interoperability between modern data stack infrastructure, such as “traditional” databases, and new AI-infrastructure tools, such as vector databases. Organizations must navigate the complexity of integrating traditional systems with modern AI tools while maintaining operational stability.

Embracing the AI-Driven Future

The integration of AI with cloud-based data platforms represents more than a technological upgrade. It is a fundamental transformation of how organizations interact with their data. The organizations that successfully implement AI-augmented data architectures will gain significant competitive advantages through improved decision-making, operational efficiency, and innovation capabilities.

Success in this transformation requires a balanced approach that combines technological excellence with organizational change management. By focusing on business value delivery, establishing proper governance frameworks, and building internal capabilities, organizations can navigate the complexities of AI integration while realizing substantial benefits. The future belongs to those who can effectively blend human expertise with AI capabilities, creating data architectures that are not just powerful, but intelligent.