Today’s large language models (LLMs) all share a structural bottleneck: they generate tokens sequentially. One. At. A. Time.

Models such as ChatGPT, Claude, Gemini, and Llama use a statistical method called autoregression, which predicts the value of the next token based on all the preceding ones. This is what’s happening while you are watching the response to your query trickle out on your screen. Every token – and there are at least one per word – requires an additional call to the model. Things don’t improve at scale: an answer with a hundred tokens requires a hundred model calls, one with a thousand requires a thousand of them.

Inception, a Palo Alto-based startup backed by leading venture firms including Menlo Ventures and Mayfield, along with notable angel investors such as Andrew Ng, Andrej Karpathy, and Eric Schmidt, has invented a better way, and it may transform the AI model landscape. The company was co-founded by leading AI researchers who helped invent the diffusion technology that is the standard for AI image and video generation platforms, and it is now pioneering diffusion-based LLMs (dLLMs) with spectacular results.

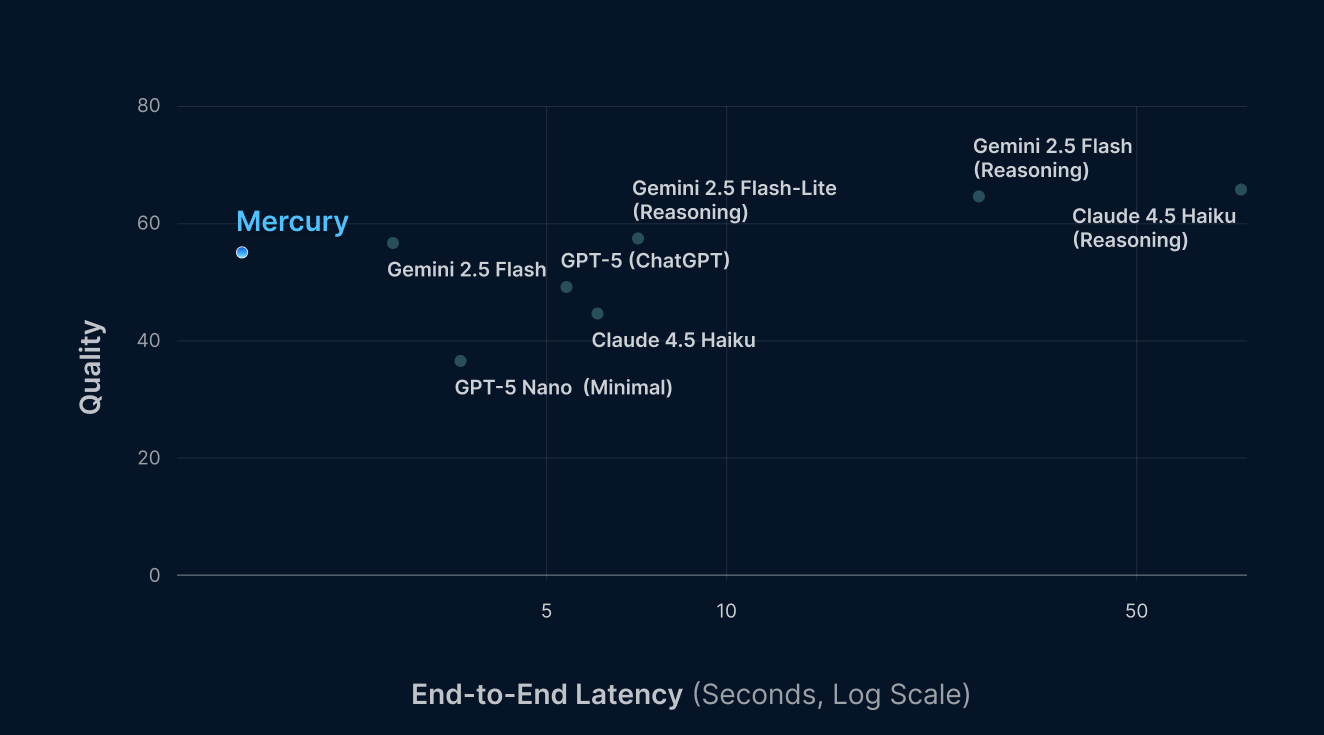

DLLMs employ a fundamentally different approach to generating answers. Rather than predicting tokens one at a time, diffusion models start with pure noise, then refine it via a neural network-based process that iteratively removes extraneous data until the best answer emerges. Picture a screen of white noise from which a crisp, clear photo-like image emerges. Not a pixel at a time, but all at once. Parallel generation turns out to be a far faster and more efficient way to deliver answers. All at once is a lot better than one at a time. In many cases, up to 10X faster while maintaining best-in-class quality.

Diffusion generation, in conjunction with the transformer neural network architecture, is a breakthrough AI technology that powers GenAI applications, such as Sora and Midjourney. But up until now, it has not been possible to use diffusion for language generation. Language is composed of discrete data, such as the 26 letters in the Latin alphabet, whereas the color gradients that comprise photos and videos are continuous. Diffusion models were initially applied to continuous data, which is why they are currently the standard solution for image and video generation.

Applying diffusion to language, however, is much more challenging. Inception’s founders have a track record of working on AI breakthroughs, having played critical roles in the development of technologies such as DPO, flash attention, and decision transformers while they were at Stanford, UCLA, and Cornell. A few years ago, they began working on applying diffusion to text. They had helped invent diffusion; now they wondered if they could apply it to discrete data. The company’s CEO, Stefano Emron, co-authored a breakthrough paper on the concept in 2024, and from there, it was a natural next step to start a company based on their insights.

Inception launched Mercury, the world’s first commercially available dLLM, earlier this year. It features, in many use cases, up to 10X better performance than its LLM predecessors while maintaining best-in-class quality. Mercury is already being deployed by several Fortune 200 companies. It is finding a home with solutions such as software coding and live voice agents, where the latency of LLM-based AI assistants disrupts the user experience. Mercury’s superior performance delivers instant responses, helping users stay in the flow.

None other than Elon Musk is bullish on dLLMs, noting that “there’s a good chance diffusion is the biggest winner overall.” Meanwhile, Mercury’s early momentum is inspiring other AI companies, such as Google, to start their own dLLM efforts. They see that businesses and developers are eager to leverage AI to create instant, in-the-flow experiences for their users and customers, but are stymied by the low speed and high expense of LLMs. Many enterprise AI initiatives get stuck in pilot testing because proceeding to deployment and scaling is just too expensive.

Companies in this situation should investigate dLLMs, such as Inception’s Mercury, as an alternative. It is plug and play API compatible with most major LLMs and offers up to 10X speed improvement at best-in-class quality. dLLMs could be a viable option for creating AI solutions that amaze users and generate revenue. They may just render today’s LLMs obsolete.