Despite massive investments in enterprise AI, many organizations still struggle to realize tangible business value. The root cause? Poor data integration. While AI models continue to evolve rapidly, the underlying data infrastructure often lags behind—fragmented, siloed, and inconsistent. In this article, we uncover why data integration is the hidden weakness of AI strategies and what it truly takes to overcome this challenge at scale.

Why data integration is the hidden barrier in enterprise AI

While AI initiatives often dominate innovation roadmaps, data integration quietly determines their success or failure. Enterprises may invest millions in advanced machine learning models, only to discover that inconsistent, siloed, or inaccessible data undermines their performance. Without a strong integration backbone, AI becomes a house built on sand—promising in theory, unstable in reality.

Seamless access to high-quality, well-structured data is the fuel for any AI engine. Yet most enterprises operate across fragmented systems, from legacy ERPs and on-premise databases to cloud-native apps and third-party platforms. Bridging these disparate environments in real time is not just a technical task—it’s a strategic necessity. And it’s often underestimated until the AI model fails to deliver actionable insight.

True enterprise AI doesn’t start with the model—it starts with the data. More specifically, it starts with making that data accessible, clean, secure, and ready for analysis. Integration is no longer an IT backend concern; it’s a frontline enabler of business value.

The Data Integration Problem: What’s holding enterprise AI back?

Many organizations launch AI initiatives with high expectations, only to see limited returns. Often, it’s not the algorithms or the models that fall short—but the data foundations they rely on. Below, we explore the key integration challenges that silently stall enterprise AI from achieving its full potential.

Fragmented data sources and legacy systems

Most enterprise environments are a patchwork of technologies accumulated over decades. Legacy systems, third-party platforms, and departmental databases rarely speak the same language. This fragmentation makes it difficult to centralize data or deliver it in real time to AI systems. Extracting meaningful insights becomes a constant battle between outdated infrastructure and modern analytical needs.

What’s more, new cloud-native solutions often operate in silos too—especially when implemented without an overarching integration strategy. The result? AI models trained on incomplete or delayed data, diminishing both accuracy and trust.

Poor data governance, accessibility and quality

Even when data exists, that doesn’t mean it’s usable. Enterprises frequently struggle with inconsistent formats, missing values, outdated records, and unclear ownership. Without strong data governance, it’s impossible to ensure that AI is learning from reliable, unbiased sources.

Moreover, teams often face accessibility issues—data locked in proprietary tools or restricted by security protocols. Without streamlined access, AI projects lose agility, increase cost, and suffer from lower performance across the board.

The gap between pilot AI projects and enterprise-scale deployment

It’s one thing to build a promising AI prototype; it’s another to scale it across the organization. Many pilot projects succeed in controlled environments but collapse when introduced to the complexity of real-world enterprise systems.

Real-world cost, risk and agility implications

The integration challenge isn’t just technical—it’s economic and strategic. Poorly integrated data pipelines increase infrastructure costs, extend project timelines, and introduce compliance risks. Organizations become less agile, spending more time on manual data wrangling than on value-driven innovation.

Why standard AI-first strategies often fail without integration foundations

Enterprises eager to implement AI often prioritize model development, vendor selection, or use case identification—without first ensuring their data infrastructure is mature and integrated. But without a rock-solid foundation, even the most advanced models are destined to fail. Here’s why traditional AI-first strategies fall short when integration is treated as an afterthought.

Misalignment of business goals and data architecture

Many AI projects start with ambitious objectives: improve customer experience, optimize operations, predict demand. However, these goals frequently clash with how data is actually stored and accessed across the organization.

For example, if a retail company wants to implement AI for personalized recommendations, but its customer data is split across CRM, ERP, and marketing platforms without a common schema, the initiative stalls. AI can only be as effective as the data architecture supporting it—and misalignment leads to costly rework, delays, or outright failure.

Technical debt: legacy systems, silos and one-off integrations

Enterprises often carry years—sometimes decades—of technical debt: disconnected systems, outdated APIs, hardcoded ETL scripts, and one-off integrations built for short-term fixes. These legacy burdens make it incredibly difficult to build the flexible, scalable pipelines that AI needs.

Worse, patching things up without a long-term integration strategy only adds to the problem. Without a clean and unified data layer, AI becomes yet another siloed system—limited in scope and expensive to maintain.

Scalability, agility and cost-control: why integration matters

AI projects are often launched with a proof-of-concept mindset. But once the model works, can it scale? Can it adapt to new data sources, changing regulations, or growing user demands?

Only a modern integration framework allows enterprises to scale AI initiatives cost-effectively and adaptively. Whether it’s real-time ingestion, schema evolution, or cross-platform compatibility, integration is what determines whether an AI model stays relevant—or becomes shelfware.

What true AI-Driven Data Integration solutions look like

If poor integration is the root cause of enterprise AI failures, then the solution must go far beyond patchwork fixes. Truly effective data integration isn’t just about connecting systems—it’s about creating a dynamic, resilient and intelligent foundation that empowers AI to deliver real value. Below are the key elements that define modern, AI-driven integration architectures.

End-to-end data pipeline: ingestion, cleansing, transformation

An effective data integration solution must support the full lifecycle of data: from ingestion through cleansing to transformation. This means pulling data from diverse sources (cloud, on-prem, SaaS), validating its quality, standardizing formats, and enriching it where necessary.

AI models rely on structured, consistent inputs—and only a robust, end-to-end pipeline ensures that this process is automated, repeatable, and scalable. Without it, data engineers spend more time fixing issues than enabling insights.

Governance, compliance and security built-in

As data becomes a strategic asset, so do the risks. Regulations like GDPR, HIPAA, or industry-specific compliance rules require strict control over data flows, access permissions, and usage tracking.

Modern integration solutions embed governance, compliance, and security from the ground up—not as an afterthought. This ensures enterprise-grade trust, transparency, and auditability, especially for sensitive AI applications in finance, healthcare or public services.

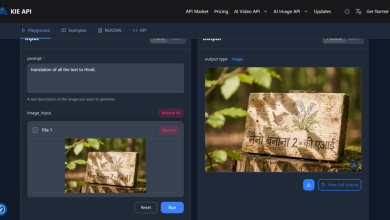

Agile, cloud-native architectures and integration as a service

Gone are the days of monolithic ETL tools and rigid middleware. Enterprises now require agile, cloud-native integration solutions that can evolve with their data landscape. Solutions like AI-Driven Data Integration solutions from Multishoring deliver flexibility through microservices, containerization, and APIs—allowing seamless deployment across hybrid environments.

Integration-as-a-Service (IaaS) further reduces complexity by providing scalable infrastructure on-demand, enabling faster time-to-insight and lower operational overhead.