The appetite for Generative AI (GenAI) has created a massive surge in demand for powerful computing systems. In turn, the demand for energy and the costs associated with that power consumption will only continue to rise.

Since its inception, AI model size has grown exponentially. In 1950, AI model size peaked at 40 parameters. By 2010, the pre-deep learning era, model sizes grew by six orders of magnitude to 100,000 parameters[1]. Since then, model sizes have grown by another five orders of magnitude. Put simply, larger model sizes equate to more powerful systems or additional computational time – both require even more energy.

Already, ChatGPT uses more than 500,000 kilowatt-hours of electricity every day, while the average U.S household uses 29 kilowatt-hours a day by comparison. The Uptime Institute projects AI will make up about 10% of global data center power by 2025 – a growth of five times compared with today. Additionally, the IEA projects a potential doubling of data center power needs from 2022 to 2026, much of that related to the growth of AI.

Simultaneously, many enterprises are focused on sustainability and have committed to becoming carbon neutral by 2050 to align with the UN’s net zero goal. To meet that target and stave off the worst effects of climate change, organizations must reduce energy use, increase reliance on renewable and carbon-free energy sources, find ways of sequestering carbon or capturing it before it escapes into the atmosphere, and figure out how to reuse resources and eliminate waste. In short, a holistic approach is necessary to achieve sustainable AI.

Four strategies for more energy efficient AI

Researchers are currently investigating how resources can be used efficiently, whether AI workloads happen on the cloud, supercomputers or on-premises data centers. Different types of resources can more efficiently run certain workloads than others. If an organization needs to run certain types of workloads, how should those workflows be broken up? Some workloads may be best suited for the cloud, some may be best suited for a supercomputer or an on-premises data center.

Analog accelerators – For decades, digital circuits have been the preferred option. They’re fast, powerful and enable processing enormous amounts of data in record time. But with widespread AI use, those digital circuits have a limitation: they need massive amounts of power to operate. But as the saying goes: what’s old is new again. Analog circuits are comprised of components like resistors, capacitors and inductors that operate in the analog domain and are a promising alternative to reduce energy consumption. Instead of using a binary system of zeros and ones, analog circuits replace binary logic with a range of continuous signals. When constructed with components like memristors that can store data, data movement from memory to the accelerator is reduced which reduces energy consumption. Special-purpose accelerators that target specific workloads are generally more efficient than general purpose ones. This different approach achieves the same goal, but it can yield significant improvement in energy consumption at the chip level.

Digital twins – A virtual representation of a physical system, like a cloud, supercomputer or on-premises data center. This virtual representation stays up-to-date and can be used for real-time optimization of the physical system. Digital twins are generally classified according to how they interact with the physical system. The most elemental form of a digital twin is a simulation of the physical system, an example is a simulation of the cooling infrastructure in a data center used in the design of layout of the facility. These types of “twins” have been used for decades in engineering. If the simulation exchanges data with the physical system to remain current, for example by sensing the power consumption of the physical components in a data center and updating the model accordingly, it can evolve with the physical system and can be used to, among other things, maintain the operational efficiency of the system.

Geo-distributed workloads – Energy and water availability vary and are hyperlocal. HPE Labs, in collaboration with Colorado State University, has developed a set of optimization algorithms that examine the variation in carbon intensity, water availability and energy cost in certain areas. It then uses that data to determine the best location to run a workload, like generative AI, around the globe to optimize resource usage hyperlocally and create significant savings in power and water consumption while reducing costs.

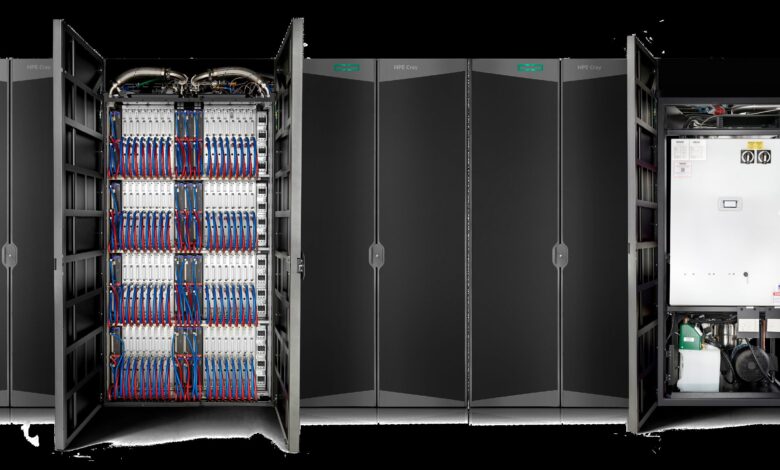

Leveraging waste heat – 100% of electrical energy that goes into a data center ultimately gets converted to heat, which must be cooled and transferred. Data centers have traditionally used air cooling, but it is less efficient and more expensive than direct liquid cooling (DLC), which has grown in favor in the age of AI. DLC is a cooling method where liquid is pumped directly into a server to absorb heat emitted by all the systems components including processors, GPUs and memory and then sent to a heat exchange system outside a data center. It cools more efficiently, since water has four times more heat capacity than air. Liquid is also easier to contain and transport while minimizing heat loss more efficiently, improving waste heat utilization. Capturing waste heat is important because it can be used for other purposes, such as warming greenhouses to create the ideal conditions for growing tomatoes or heating buildings.

As AI grows in popularity, it’s opening vast opportunities to increase creativity, unearth new applications and improve productivity across industries. But AI’s popularity is also requiring more energy to acquire and train the models that will change the way we all work and live. Minimizing the impacts of this energy use will be crucial to our future survival.