The adoption of artificial intelligence is accelerating rapidly, with nearly four in ten UK organisations already using it in some form. From banking and healthcare to retail and manufacturing, AI is being embedded to streamline processes and improve efficiency at scale. The UK public sector is also making strides; Lancashire County Council has recently signed a £4.3m multi-year deal with a software group to deploy autonomous AI agents across its public services. With mass AI implementation happening across industries, the central question has shifted. It’s no longer if companies should implement AI, but how fast and in which areas.

As AI’s uptake rapidly increases, so do our expectations of its outputs. Too often, people assume AI is infallible without taking the time to challenge its responses. Assuming AI will never slip up can erode trust, slow adoption and ultimately stall innovation. Instead, success starts with targeted projects, consistent testing and avoiding over-reliance on a single tool. Businesses must be continuously learning and trialling different tools to find the best fit for their needs – this likely won’t be achieved on the first try.

Rethinking our approach

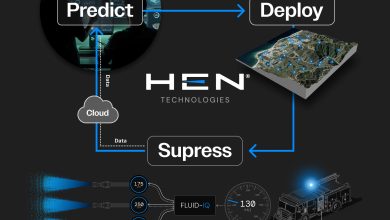

One of the most effective ways to improve the reliability of AI is through retrieval-augmented generation (RAG). RAG enhances large language models by connecting them to external, up-to-date sources of information before producing a response. Instead of relying solely on what was captured during training, which may be incomplete or outdated, RAG grounds outputs in verifiable data. The result is AI that is more accurate, context-aware and easier for stakeholders to trust.

As AI becomes more integrated into day-to-day operations, accuracy and reliability are critical and RAG can play a big role in delivering that. However, changing how we perceive and deploy the technology is just as important. For example, when a colleague makes a mistake, it’s typically seen as a learning curve. Yet when AI makes a mistake, some worry that the system isn’t ready for business use. In reality, these mistakes are a natural outcome of models that work on probabilities. Expecting perfection from AI is like hiring a new employee and assuming they’ll never make a mistake.

Organisations need to move away from a binary view of AI being either fully correct or completely wrong. Instead, attention should shift to safeguards, human oversight and the practical ways technology and people can complement one another. Unlike people, AI can learn and adapt in minutes or days. That agility means businesses should adopt the same flexible mindset when deploying it.

Long-term, top-down transformation strategies risk delaying progress in search of an infallible version of AI that will likely never exist. A better route is to run short-term, incremental projects that prove value quickly, then scale from there.

Responsible AI adoption

Translating responsible AI into practice requires balancing ambition with a grounded, human-centric approach. Every organisation’s route is different, but certain principles apply across the board. Organisations must start small by focusing on projects that can be delivered in weeks or months; those that can demonstrate early success build trust and maintain momentum.

Because AI systems are inherently imperfect, every error is an opportunity to refine performance. Analysing mistakes, tweaking prompts or experimenting with alternative models are all valuable ways to improve accuracy. This iterative process ensures that improvements are continuous without overcomplicating projects.

Once initial pilots generate measurable benefits, adoption can expand more widely. Strong governance and oversight remain essential to ensure outputs are accurate, responsible, and aligned with organisational standards. This allows businesses to scale confidently while managing risk.

Building reliability with RAG

A proven method to increase accuracy is RAG. By pulling in relevant, up-to-date information from trusted sources before producing an output, RAG ensures responses are based on verified knowledge rather than outdated or incomplete training data.

This connection between AI and high-quality information reduces hallucinations, produces more context-aware results, and strengthens stakeholder trust. Combined with a culture of careful, iterative deployment, RAG creates a feedback loop that continuously reinforces accuracy and reliability across the enterprise.

Final reflections

AI is now a strategic imperative, but its long-term success depends on how well organisations manage its limitations. The winners will be those that accept mistakes as part of the process, design systems resilient enough to learn from them and strengthen trust at every stage.

This is exactly the direction signalled by the newly signed UK–US Tech Prosperity Deal, which commits billions in cross-border investment to accelerate progress in AI and other advanced technologies. By placing trust, standards and responsible innovation at the centre of national policy, the agreement sends a clear message: sustainable growth in AI will come from building robust frameworks that balance innovation with accountability.

For organisations, the takeaway is clear: ground your AI in verifiable data with methods like RAG, combine technical safeguards with human oversight and stay flexible.