There is ongoing discussion about the pros and cons of bringing in new copyright legislation to regulate AI’s use of content. Legislation may be a key way of addressing the wider debate over AI’s perceived threat to the creative industries, but it faces the challenge of striking the right balance between protecting the rights and livelihoods of content creators on the one hand, and not stifling the growth of AI technology on the other.

The plethora of issues and concerns surrounding AI and intellectual property rights are currently being evaluated by the U.S. Copyright Office, which has launched a study to investigate whether current legislation needs to change, and if so how. So far, this has generated over 10,000 responses representing a variety of interests and stances:

“First, most importantly–and urgently–the Copyright Office should adopt regulations to enable publishers to group register online web content in an efficient, economical, and simple manner… As AI developers exclusively use online content to train their models and applications, publishers’ inability to adequately register their copyrights has wide-reaching consequences to their ability to enforce their rights, monetize their content, and continue investing in the production of high-quality original content.”

News Media Alliance

“Any AI program should be ‘opt-in,’ requiring creators to expressly give their permission before their works are used by the program. Without these protections, artists of all kinds, ranging from those employed by large media companies to independent creators, will suffer.”

Anonymous

“OpenAI urges the Copyright Office to proceed cautiously in calling for new legislative solutions that might prove in hindsight to be premature or misguided as the technology rapidly evolves… OpenAI believes that the existing provisions of U.S. copyright law provide a sound foundation on which the courts can build as cases arise.”

OpenAI

“From a copyright standpoint, there seems to be little gain from added regulation and the enforcement issues raised from failure to properly mark… US business already has a host of regulatory hurdles to jump, we should be reluctant to add more without substantial cause”

SCA Robotics

In general, responses confirm the contrasting interests between creative professionals, who are dissatisfied with current copyright protection, and the technology sector, which displays reluctance to introduce further legislation over AI’s content use. This is highlighted by the featured responses above by OpenAI and SCA Robotics.

However, despite this general trend, it is not just tech companies that are displaying doubt over whether there is much tangible benefit to be gained from introducing new legislation. Robert Thomson, CEO of Australian publication News Corp, has recently stated that his company will prioritize private negotiation with tech companies over pushing for litigation, as reported in the Guardian. This is due to rising concern about how media companies will be legally compensated for content that has already been used to train AI models.

News Corp is one of several media companies that have opted for striking a deal with AI developers rather than suing for damages in court. OpenAI for example, has struck deals with the Associated Press, Axel Springer, and NYU among others, which allows them to use the content published by these companies on set terms. Ken Sterling, a tech and IP attorney at Sterling Media Law, expects the New York Times to follow suit, but argues that in the meantime, their lawsuit against OpenAI is “great branding and PR for both brands”.

Copyright lawsuits have indeed been very effective in raising public awareness of the issues surrounding AI’s use of content, which has in turn triggered AI developers to take measures to respect the rights of content creators and publishers. For instance, in deals it has struck with news companies, OpenAI promises to provide proper attribution of real-time information by linking back to the source article, thus providing another platform through which a news outlet can reach its audience.

Many AI developers now allow publishers to choose whether their content is used as training data or not. For example, OpenAI provides an opt-out option from its web crawler, GPTBot, so that websites can use a code to prevent the scraping of their content. Alongside this, many tech companies are now offering to pay publishers and media companies a high price for their content, in increasing recognition of its value, and the cost involved in its creation.

However, while these measures have been sufficient to incentivize several big media companies to sign deals with AI developers, they do not provide sufficient protection for all content creators, including freelancers who typically have less control over where their content ends up and its licensing rights.

This is evident in a report from the Federal Trade Commission’s ‘Creative Economy and Generative AI’ roundtable, which found that many artists and writers are not satisfied with existing measures such as OpenAI’s opt-out option:

“Participants stated that, even when some mechanisms have been implemented to offer creators consent and control over whether their work is used in AI training, these measures have been insufficient and ineffective. Participants described “opt-out” frameworks as an example of such measures and said that an opt-out default puts the burden on creators to police an ever-changing marketplace.”

The Federal Trade Commission

The benefits of revising copyright legislation

While private negotiations and partnerships may present an easier and more fruitful way of mending the individual relationships between AI developers and big media companies, legislation remains an important way in which change can be brought about permanently on a broader, fairer, and more standardized scale that sets a minimum standard of copyright compliance for AI’s use of data.

Furthermore, given the insufficiency of current copyright law in accounting for the incremental changes that AI is bringing to the creative industries, new legislation will be a foundational mechanism for providing more comprehensive and specific guidance for AI’s use of content, which will not only protect content creators, but also the AI developers themselves.

There are three key benefits of introducing new copyright legislation: (1) it could put a cap on the accelerating price of data, (2) it will allow businesses to integrate AI technology more confidently, and (3) it will prevent escalating tensions between AI developers and content creators.

1. Legislation could help standardize the value of data

Generative AI’s dependence on data is integral to its most fundamental functions. It therefore owes a lot to writers and content creators whose content has been used to train it up. This fact is well-recognized by AI developers, and is the reason they are willing to pay their sources a high price for access to data.

According to The Information, OpenAI is offering between $1-5 million to media companies in return for use of their articles. Similarly, the Verge reports that Apple is offering a minimum of $50 million to media companies for access to their content over a multi-year period.

These deals are proving beneficial in the short term by providing media companies with a financial incentive to collaborate with AI developers, and demonstrating the AI industry’s commitment to valuing the time, research, and creativity that goes into content creation. To some extent, this is helping to mend the relationship between AI developers and the creative industries.

However, with these deals currently being negotiated in private, there is a risk that commercial interests and competition may drive up the value of content to become financially unsustainable for AI developers to afford in the long term. Alternatively, access to quality content might become monopolized by a small number of big tech firms who will be the only companies able to afford it.

Marc Benioff, founder of Time Magazine and CEO of Salesforce, touched on this point in an interview at the recent WEF in Davos. He argued that AI companies need to standardize payments in order to treat content creators fairly, especially given that “nobody really exactly knows” what a fair price is for data.

The escalation of data’s value could also affect the scope of sources that AI developers use to train their models, which would result in the data being less diverse, and more likely to be biased towards a dominant group or ideology. In turn, this would render AI models less reliable, and could have detrimental impact on many sectors by propagating the prejudices and commercial interests of dominant groups, thus contributing to existing polarization between different groups in our society.

In light of this, legislation is key to preventing the escalation of data’s financial value, and the commercially-driven inequality that would result from this. This will ensure that AI developers can count on access to data at a reasonable price, and content creators can count on fair compensation for the use of their work.

2. Legislation can provide businesses (especially SMEs) with more confidence to adopt AI technology

Another key reason to introduce new copyright legislation is to increase AI’s transparency for businesses, which will enable them to confidently meet the regulatory standards in their industry while harnessing the benefits of the latest technology for their business.

“Companies face uncertainty regarding the permissible applications of artificial intelligence. Without clear boundaries, they hesitate to utilize AI confidently. Consequently, there is a pressing need for comprehensive guidelines to facilitate the optimal utilization of AI.”

Nazmul Hasan, Founder and CIO of AI Buster

“Legislative changes are necessary to provide clarity and guidance for SMEs on the permissible use of copyrighted content for AI model training while ensuring that copyright holders’ rights are respected and upheld. This may involve introducing exceptions or limitations to copyright laws specifically tailored to AI model training, implementing clear licensing mechanisms, or promoting collaborative initiatives between content creators and tech firms.”

Erik Wikander, CEO of Zupyak, a content marketing platform for SMEs

This is particularly key for businesses of a smaller size, who are “particularly vulnerable to compliance pitfalls, which can lead to crippling fines and legal repercussions” as Vivek Dodd, CEO of Skillcast, points out.

In an interview with the AI Journal, Graham Dufault from the App Association (a representative council for small businesses), highlighted the benefits of clearer legislation for small businesses, suggesting that legislation would not only increase SME’s confidence as they adopt AI technology, but would also help save time and increase efficiency by providing centralized guidance and resources. He forecasted that generative AI, just like patented technologies such as 5G and Bluetooth, will see the emergence of a legal standard due to its widespread adoption across industries, and pressure from shareholders for increased transparency and accountability in the use of the new technology.

Furthermore, legislation would help businesses to sell their products and services to customers, given that transparency and accountability are becoming increasingly desirable and expected business standards. As Benioff puts it, access to transparent, accountable AI should constitute a “human right“. Already, this has become a key selling point for some companies, such as Getty Images’ Generative AI by iStock.

“Our main goal with Generative AI by iStock is to provide customers with an easy and affordable option to use AI in their creative process, without fear that something that is legally protected has snuck into the dataset and could end up in their work”

Grant Farhall, iStock’s Chief Product Officer

3. Legislation will ease escalating tensions between AI developers and content creators

Despite the measures have been taken by technology companies to respect the concerns of content right holders, creators are clearly not satisfied with current measures. And so the lash back against AI continues to escalate, with content creators turning to increasingly drastic measures to protect their work.

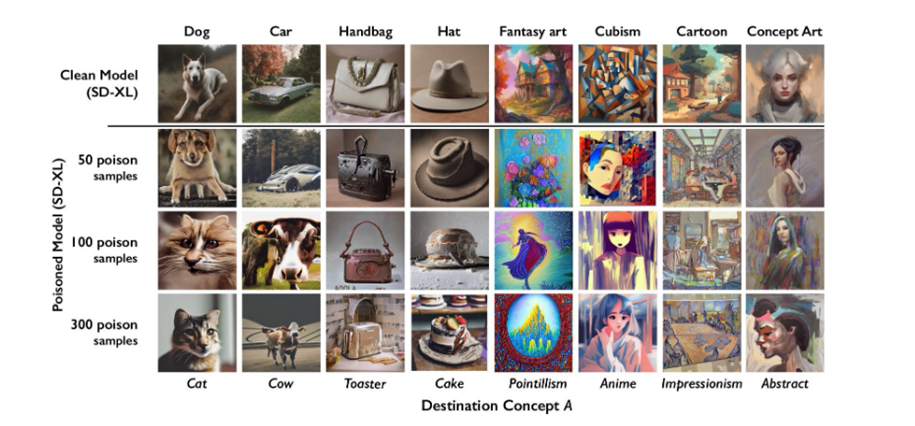

For example, a free digital tool called Nightshade has been developed by researchers at the University of Chicago to prevent copyright infringement of artwork published online. The tool ‘poisons’ the AI model by shading artworks at a pixel level to deceive it into processing the image as something different. This can lead to the AI model generating random and unrelated images in response to a prompt, as can be seen below:

Ben Zhao, lead developer of Nightshade, told VentureBeat of his surprise at the popularity of the tool, which highlights how unprotected artists feel by current measures, to the extent that they are taking matters into their own hands:

“Nightshade hit 250K downloads in 5 days since release. I expected it to be extremely high enthusiasm. But I still underestimated it…The response is simply beyond anything we imagined.”

Ben Zhao, lead developer of Nightshade

This is just one example of how content creators are finding ways to fight back against AI developers, as it is becoming increasingly clear that current copyright law does not provide them with sufficient protection.

As a result, revised legislation is needed to address the newly arising challenges that the creative industries are facing as a result of generative AI. This will be key in preventing digital sabotage in an escalating battle between AI developers and content creators. It will play a critical role in fostering trust between AI and the creative industries that will be fundamental to creating a mutually beneficial and collaborative relationship in the future.