The Trust Crisis at the Heart of Enterprise AI

AI isn’t failing because it can’t predict. It’s failing because it can’t explain.

LLMs have passed the fluency test — they can generate text, code, even strategy decks. But when you ask these models why they produced an output, the answer is a shrug. And in regulated environments — finance, healthcare, legal tech — a shrug isn’t acceptable.

We’ve entered what I call the “post-fluency era.” In this next phase, trust becomes the primary differentiator. That’s where Ontology-Enhanced AI comes in.

Here’s Why Enterprise AI Is Drifting — And How Symbolic Logic Can Realign It

LLMs drift because they lack structure. They’re trained on frozen snapshots of the world, and they hallucinate when real-time logic or regulation is required.

My solution: layer a real-time symbolic engine over generative outputs. It doesn’t try to replace the model — it audits it.

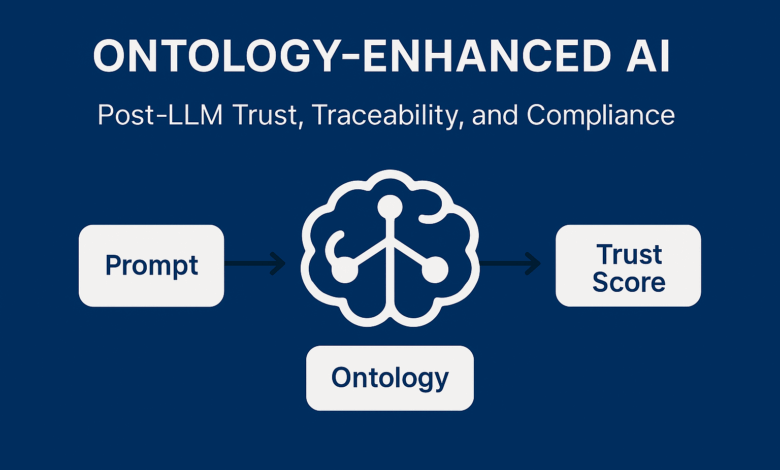

This is Ontology-Enhanced AI: a system that scores trust probabilistically, enforces compliance logic symbolically, and adapts its reasoning structure over time.

From Predictions to Proof: Core Architectural Shifts

- Bayesian Trust Scoring

Each LLM output is assigned a live trust score:

Trust=α+correctα+β+total\text{Trust} = \frac{\alpha + \text{correct}}{\alpha + \beta + \text{total}}

It’s not about output confidence — it’s about explainable belief in correctness, with auditable bounds.

- Symbolic Trace Generation

Every answer has a trail:

[Prompt] → [Ontology Match] → [Compliance Rule] → [Trust Score]That’s traceability you can export to an auditor, regulator, or internal AI ethics board.

- Compliance Logic, Real-Time

Instead of retraining your model for every policy shift, map decisions to ontologies and logical rulesets. When the rules update, the scoring and decision paths update too — no fine-tuning required.

This Is Already Happening

- Regulators are asking for AI transparency frameworks.

- Enterprise buyers are pushing for explainability audits.

- NIST, the EU AI Act, and UK regulators are all naming trust, traceability, and compliance as core requirements.

I built OntoGuard AI to meet this moment. The platform is patent-pending, demo-ready, and designed to interoperate with GPT, Claude, and open-source LLMs.

The Bottom Line

If enterprise AI is going to evolve, it must learn to justify — not just generate.

Ontology-Enhanced AI is a bridge to that future: a framework that combines the expressive power of LLMs with the integrity of symbolic logic and regulatory alignment.

Prediction alone isn’t enough anymore.

Structure is the new scale.

Learn more: ontoguard.ai

Contact: [email protected]

Patent Filed: April 24, 2025