Step into a modern store, a bank branch, or even a clinic, and you’ll notice very little on the surface. Machines beep, screens light up, and transactions complete in seconds. But beneath the surface is an unglamorous truth: most organizations still don’t really know what’s happening inside their own digital systems. A survey found that 84% of companies struggle with observability, the basic ability to understand if their systems are working as they should. The reasons are familiar: monitoring tools are expensive, their architectures clumsy, and when scaled across thousands of locations, the complexity often overwhelms the promise.

The cost of that opacity is not abstract. Every minute of downtime is lost revenue. Every unnoticed glitch is a frustrated customer. And every delay in diagnosis erodes trust. In this sense, observability is not just a matter for engineers; it’s central to how modern businesses function.

It is in this scenario that Prakash Velusamy, an enterprise solution architect and site reliability engineer, has made a set of contributions that quietly but significantly change the way reliability is approached in distributed environments.

Observability Where It Was Missing

Most enterprises run on Kubernetes-based infrastructure, and the standard way to monitor these systems has been Prometheus. It works reasonably well in centralized data centers. But try running it across thousands of edge locations, say, retail outlets or branch offices, and the limits become obvious. Storage demands, network bandwidth, and resource consumption all spiral beyond what is feasible.

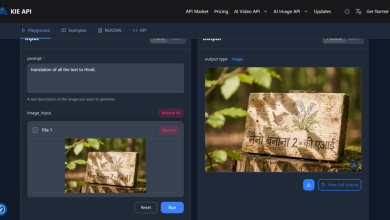

Prakash’s alternative was to rethink the model itself. He built a lightweight observability framework using OpenTelemetry, designed to work within the constraints of the edge. It combined Kubernetes at the base with structured logging, distributed tracing, and front-end instrumentation. The effect was a full-stack view from device to interface, without exhausting bandwidth or hardware.

“The challenge was not just technical,” he explains. “It was about balancing infrastructure needs with telemetry design, so that the solution could be resource-aware yet still scale.”

The approach has since been adopted in multiple large-scale retail settings, showing that an edge-native observability framework can be both practical and replicable.

From Load Times to Real Experience

For years, organizations measured performance in numbers that seemed clear but were oddly disconnected from what users actually felt: server response time, page load time, and so on. These told you how the system performed from its own perspective, not from the customer’s.

Prakash’s contribution here was a shift to Core Web Vitals, a set of metrics recommended by Google that better reflect real-world user experience. This framework uses a custom JavaScript SDK to measure page performance. The SDK uses the latest open libraries to measure Largest Contentful Paint (LCP), which indicates how long it takes for the most important element on a page to appear. That single number, for instance, directly correlates to how quickly a user can start interacting with a site.

This is not just a change of tools; it’s a change of lens. By re-centering performance measurement around user perception, organizations could finally see digital experience the way their customers do. Financial institutions and retailers alike have since referenced this approach, acknowledging it as a necessary evolution from legacy methods.

A Copilot for Crises

Monitoring, however advanced, cannot prevent incidents entirely. When systems fail, the speed of diagnosis becomes critical. In fact, organizations can lose an average of $1 million per hour during unplanned downtime, a striking testament to the high cost of delays. The standard approach, engineers combing through logs, traces, and deployment histories, often slows response when time is most precious.

Prakash developed the SRE Incident Copilot, an AI assistant built on multi-agent workflows. Instead of scattering engineers across data, the Copilot analyzes metrics, traces, and recent deployments in one go. The team can simply specify a service, and the Copilot surfaces the most relevant insights. It doesn’t remove human judgment, but it removes the noise.

The effect is measurable: shorter mean-time-to-detection, faster diagnosis, and quicker recovery. It also changes the psychology of incident management. Teams spend less time gathering and more time solving.

Influence Beyond One Organization

What stands out is not only the design of these solutions but their uptake elsewhere. The edge observability model first proven in retail has been mirrored in other industries, including banking. The Core Web Vitals approach has been picked up by financial services firms seeking to sharpen digital performance. And the Incident Copilot reflects a broader shift toward embedding AI into reliability practices.

Industry peers have described the edge observability work as “innovative, cost-effective, and cloud-native.” The user experience monitoring framework, in turn, has been called “a new open standard” for assessing applications. These are not just internal validations but signs of wider relevance.

Connecting the Threads

Taken together, Prakash’s projects, observability at the edge, performance measurement via Web Vitals, and AI-supported incident response, tell a story larger than the sum of their parts. They represent a rethinking of reliability itself, one that brings infrastructure, user experience, and incident response into a single continuum.

As he puts it, “Reliability today isn’t about servers staying up. It’s about connecting the dots, seeing from the device in the customer’s hand to the AI tool helping diagnose a failure. Only then can enterprises deliver trust at scale.”

That shift in framing may prove to be his most significant contribution. It moves observability out of the server room and into the larger conversation about how modern enterprises maintain resilience. And in a world where technology is the quiet backbone of daily life, that perspective may matter as much as the tools themselves.