Prompt engineering got us moving, but context engineering is how teams ship outcomes. Over the last year I’ve watched the Model Context Protocol (MCP) turn messy, one‑off integrations into a standard way to connect assistants to data and tools. MCP gives you a predictable port; think “USB‑C for AI”; and context engineering gives you a playbook for what to send through it: ground truth, guardrails, and just enough state to finish the job. This post shares how I approach the pair: what MCP is, how context actually flows, and a pattern that keeps agents reliable at scale.

What MCP is (and why it matters)

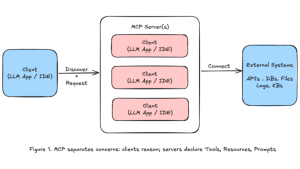

MCP is an open standard for connecting AI applications to external systems without bespoke glue code for each app or dataset. In MCP terms, servers expose three things: tools (callable functions), resources (data you can read), and prompts (reusable, parameterized instructions). Clients: your IDE, desktop assistant, or web app discover what’s available and request exactly the context they need. The result is a clean separation of concerns: models focus on reasoning, while MCP handles discovery, transport, and permissions. [1][2][3]

Context engineering in practice

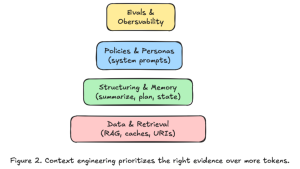

Context engineering treats the context window like a scarce budget. Instead of dumping everything into a prompt, the goal is to route just‑in‑time evidence: who the user is, what they’re doing, and the canonical facts they’re allowed to see. In most real systems, that means retrieval‑augmented generation (RAG) for facts, structured prompts for policy and voice, and slim task memory so agents don’t lose the plot. The combination reduces hallucination risk and keeps responses traceable to source material. [6]

A simple architecture that scales

The pattern I’ve used and seen work looks like this. A client (say, an IDE or desktop assistant) connects to one or more MCP servers. Each server advertises tools (e.g., “create_ticket”, “get_customer”), resources (e.g., “kb://refund-policy”, “db://orders/123”), and prompts (e.g., “refund‑eligibility-check”). At run time, the client assembles a task‑specific context: a lightweight system message, a few retrieved documents, and a plan that names which tools are allowed. Because capabilities are declared and typed up front, observability and safety controls are far easier to implement. [1][2][3][4]

MCP + context engineering, step‑by‑step

1) **Define the contract.** On the server side, publish tools with clear schemas, stable names, and input/output validation. Expose resources by URI and group prompts as reusable templates so clients can parameterize them. Add metadata that a policy engine can reason about: PII categories, permissions, scopes, and rate limits. [2]

2) **Plan the context.** Decide what must be in the window every time (policy, tone, persona) and what should be retrieved on demand (facts, IDs, recent activity). This is where RAG and caching earn their keep; fetched context should be small, recent, and attributable. [6]

3) **Run with bounded autonomy.** Give the agent a step budget and an allowlist of tools. Log each tool call, the retrieved resources, and the reasoning breadcrumb so humans can audit later. Keep write actions behind an approval gate until the success rate is boringly high.

4) **Secure the loop.** Treat inputs and tools as untrusted by default. Sanitize retrieved content, sandbox tool execution, and monitor for injection, insecure output handling, and excessive agency. [7]

5) **Measure and iterate.** Track task success, handoff reasons, cycle time, and cost per task (model + tools + human minutes). Add every near‑miss to the evaluation set so regressions get caught in CI.

Two concrete examples

**Support triage.** The assistant classifies inbound cases, checks entitlement via a tool, retrieves one or two policy resources, and drafts a resolution note using a prompt template. High‑risk intents (refunds, legal claims) automatically escalate to human review. Because everything is declared in MCP; the tools, the policy documents, the prompts; auditing is a matter of reading the trace. Teams see faster handle time without sacrificing control. [2]

**Developer productivity.** Inside an IDE, the client connects to a code‑aware MCP server. Resources expose file contents and dependency metadata; tools open a branch, run tests, or raise a PR; prompts standardize commit messages and review checklists. The agent plans a few steps, retrieves only the relevant files, and calls whitelisted tools under a strict step budget. It feels like help from a disciplined teammate instead of a black box. [3][4][5]

Rollout plan (30/60/90)

**Days 0–30: Prove the plumbing.** Stand up a single MCP server with two tools, two resource families, and one prompt pack. Ship a tiny task with a hard step budget and read‑only tools; build a golden‑task suite and capture traces.

**Days 31–60: Make it trustworthy.** Add an adjacent task, expand tools to four, and wire in a policy engine that enforces scopes and rate limits. Introduce adversarial tests (tainted content, strange arguments) and a rollback runbook. [7]

**Days 61–90: Prove value.** Turn on assisted execution for a subset of users and publish a value‑plus‑risk scorecard: success rate, handoffs, cycle time, cost per task, incidents/near‑misses. Only then consider lifting write gates.

Where MCP is heading

MCP isn’t theory; it’s already powering real clients, including desktop assistants that connect to local servers for file access and other integrations. Vendors are adopting it because “integrate once, reuse everywhere” is cheaper than N bespoke connectors per app. Expect richer capabilities around session state, enterprise identity, and standardized observability so teams can trace actions across servers. That evolution makes context engineering more valuable, not less. [4][5]

References

[1] Model Context Protocol (overview): https://modelcontextprotocol.io/

[2] MCP Specification — Tools & Prompts (server): https://modelcontextprotocol.io/specification/2025-06-18/server/tools ; https://modelcontextprotocol.io/specification/2025-06-18/server/prompts

[3] Claude Docs — Model Context Protocol: https://docs.claude.com/en/docs/mcp

[4] Connect to local MCP servers (Claude Desktop): https://modelcontextprotocol.io/docs/develop/connect-local-servers

[5] Anthropic — Introducing the Model Context Protocol: https://www.anthropic.com/news/model-context-protocol

[6] Lewis et al., “Retrieval‑Augmented Generation for Knowledge‑Intensive NLP,” NeurIPS 2020: https://proceedings.neurips.cc/paper/2020/file/6b493230205f780e1bc26945df7481e5-Paper.pdf

[7] OWASP Top 10 for LLM Applications: https://owasp.org/www-project-top-10-for-large-language-model-applications/