Interest in AI governance has increased dramatically over the past 12 months. Why is this? With the release of ChatGPT business leaders quickly recognised the transformational potential of AI – the focus was on generative AI but with it came greater appreciation of the potential of ‘traditional’ AI i.e. non generative forms of AI. They urged their teams to introduce AI into internal processes, products and services as quickly as possible to get ahead of the competition. The problem was that this confidence was based on having used a consumer product – ChatGPT – which, while undeniably powerful, could afford to exhibit extensive risky behaviours and inaccuracies. These inaccuracies soon became known as hallucinations, or confabulations, and the headlines are littered with egregious examples.

As data science teams raced to introduce generative AI solutions, they faced a conundrum. How to ensure that these AI systems did not go wrong? This is particularly challenging in the case of generative AI since there are, as yet, (and may never be due to the way generative AI works) any 100% reliable solutions for ensuring that generative AI produces an accurate answer, is not biased and can be explained. To name just some of the risks.

This presents business leaders with an uncomfortable reality: risks they can’t quantify. Risks which could lead to reputational damage, regulatory action and loss of customer trust. A business leader responsible for launching a generative AI powered customer service agent told me that they had never faced as much scrutiny from senior management before being given approval to go live. Once the leadership understood that, despite extensive testing, there was no way of being 100% certain that the agent would not occasionally hallucinate, it was decided to restrict the scope and implement a phased launch.

To make matters worse, while we still cannot be completely confident of our controls for generative AI, AI ‘agents’ introduce even more risk. Undoubtedly, AI agents can deliver more business value, because, unlike simple generative AI systems, they can take actions based on what they infer from instructions, research and analysis. But this very agency raises the stakes if something goes wrong, and because agents typically work as a collective, each with specific tasks, an error by one agent can quickly propagate through the whole system.

AI governance is the solution

The answer is to design and implement robust AI governance guardrails which will provide peace of mind that the right questions are being asked, the right technical evaluations are being conducted, and that a transparent and well-judged risk/benefit analysis will be undertaken before deciding whether to proceed with using AI in each situation. The result will be AI systems which are legally compliant and which customers, colleagues, the board and investors can trust, and trust is the essential ingredient for adoption. But before describing how to design such a system, we should understand exactly what AI risks we need to identify and mitigate.

The risks of AI

By now there is no shortage of AI risk frameworks. We have surveyed key frameworks such as:

- The AI Risk Management Framework (AI RMF 1.0) published by the US National Institute for Standards and Technology (NIST).

- The Organisation for Economic Co-operation and Development (OECD) approach which prioritizes protecting fundamental human rights.

- The EU AI Act.

- The UK Government’s approach which is more flexible and principles-based.

- The FEAT principles (Fairness, Ethics, Accountability and Transparency) from the Monetary Authority of Singapore (MAS) which was an early proponent of AI governance.

We have synthesised these and other approaches to create a consolidated AI risk landscape consisting of nine key risk areas:

- Accuracy and Reliability: ensuring the AI system performs as intended and delivers accurate results under both expected and unexpected conditions.

- Fairness and Bias: determining fairness objectives and appropriate measurements – centred on outcomes and context-dependent, considered from the perspective of all stakeholders who will be affected by the system in question. Minimizing unintended bias and discrimination.

- Interpretability, Explainability and Transparency: ensuring that the developers of the AI system understand technically how it arrived at a given output and that impacted individuals can be given a straightforward explanation which they can understand. Proactively notifying impacted individuals when they are subject to an AI-driven system or decision.

- Accountability: designating executive(s) to be accountable for the performance and outputs of the AI system and compliance with legislation and regulations, as well as any other stated objectives. Guarding against over-reliance and automation bias on the part of AI system users. Providing impacted individuals with a clear path for contestability and redress.

- Privacy: using data with appropriate permissions; safeguarding sensitive content and identifiers. Determining appropriate levels of surveillance and control.

- Security: protecting against attacks which could result in data leakage or adversarial perversion of the AI system. Preventing the use of AI systems for conducting cyberattacks. Safeguarding against danger to human life, health, property or the environment, including through enhanced risk of chemical, biological, radiological and nuclear weapons.

- Intellectual Property and Confidentiality: preventing plagiarism or copyrighted, trademarked or licensed content being used without authorization. Protecting against the leakage of confidential information.

- Workforce: minimizing the negative impact of AI on employment. Mitigating the psychological harm from some AI-related tasks on colleagues.

- Environment and Sustainability: minimizing the negative environmental impact from training AI models on large amounts of data, and from each use of the model.

Implementing AI governance

Effective AI governance requires sponsorship from the top. Why? Because it has to be a cross-enterprise endeavour, bringing together colleagues from data science, legal, risk/compliance, information security, lines of business and HR at a minimum. Most importantly, it relies on a culture of AI risk awareness and understanding when risk evaluation is needed. A powerful senior sponsor can help to secure the support needed to build such a program.

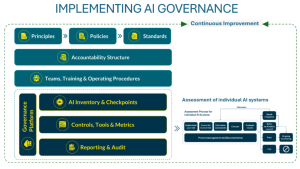

We recommend adopting the following framework to design your AI governance program, recognising that it should be tailored to the size of your organisation and available resources (see diagram below). Importantly, we also recommend reviewing existing internal risk management and governance processes to see whether AI-specific considerations can be added into existing processes rather than creating new ones. This is preferable where possible: it simplifies the controls for colleagues and will accelerate implementation, since accountable executives and approval forums will already exist.

Principles, policies and standards

A set of AI principles serves as the guiding light for the governance program and should tie closely to the core values of the organisation, for example a life sciences company will anchor heavily in science for health and patient care. Policies then capture the principles in a more legalistic form which can be used to enforce behaviour within the organisation. Perhaps more important, though, are what we call standards or operating procedures. These are much more practical and translate the policy into details which assist colleagues in complying with policy. For example, the policy may state that customers should be treated fairly but the standard will explain how fairness should be defined and measured in an AI context.

Accountability structure, teams and training

As mentioned above, implementing AI governance requires cross-enterprise participation. This makes coordination, the allocation of responsibilities and clarification of accountabilities particularly important. Most organisations will have existing information security, data governance and privacy procedures: how will AI governance dovetail with these without creating duplication or leaving gaps?

For organisations operating in the EU, Article 4 of the EU AI Act mandates that employers ensure sufficient employee AI literacy. Even without this legal requirement, the success of an AI governance program will depend on building expertise across the business. This ranges from general awareness training about AI risks and how to responsibly use AI tools such as Copilot for everyone in the organisation, to specialist technical training for colleagues who will be conducting AI risk assessments and AI system evaluations.

AI Inventory, checkpoints and assessment process

In some ways this is the heart of the AI governance process. Creating and maintaining an AI inventory is often a huge challenge, particularly since AI is increasingly being included in many third-party applications and modern development tools make it easy for ever larger numbers of colleagues to create AI applications to help with their daily tasks. While this innovation is welcome, it is none the less important to consider the potential risks of every use of AI.

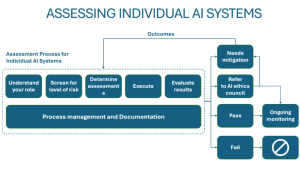

By checkpoints we mean identifying specific points in existing processes where the question can be asked “will this proposed new tool (developed internally or purchased from a third party) use AI?”. If the answer is “yes” then a simple set of questions based on the proposed use of the tool will establish whether this is a higher or lower risk use of AI. If it is potentially high risk, then a detailed risk assessment should be undertaken, see diagram below. Where risks are identified these will need to be mitigated or a decision taken at the appropriate level of seniority that the benefits outweigh the risks and that the organisation is willing to accept the residual risk.

Governance controls

In order to create guardrails which will be effective at controlling the use of AI in an organisation, we distinguish between control requirements and control routines.

Control requirements describe what criteria must be satisfied at any point in time when building or deploying AI in the organization – for example, a fairness measure that needs to be met or a human oversight requirement under certain conditions.

Control routines are implemented as tools or processes delivering quantitative or qualitative results measured against the control requirement – for example, a technical tool which computes one or more fairness metric or a check which asks for evidence of human oversight such as a sign-off.

As can be seen, the organisation will need a set of quantitative tools to conduct AI system evaluations for risks such as bias, lack of explainability and hallucinations. It will also need to determine the appropriate metrics and success thresholds: for example, there will often be a tension between accuracy and bias, but what level of bias is the organisation willing to accept?

AI governance platforms

In order to enforce these control requirements, organisations are increasingly turning to specialist AI governance platform software. Such a platform will establish a mandatory workflow with appropriate checks, stage gates and sign-offs, and will document the results. While many larger companies may have an existing Governance, Risk and Compliance (GRC) platform, these platforms have in most cases not proven to be well-suited for managing the peculiarities of AI. Therefore, a number of specialist AI governance platform providers have emerged over the past few years. In addition, some organisations are using workflow platforms such as ServiceNow to orchestrate their AI governance.

Closing thoughts

It is a common misconception to think of AI governance as a compliance-driven inhibitor to innovation. In fact, implementing demonstrable, robust AI governance is how senior leaders, customers and investors can be assured that AI risks are being suitably identified and mitigated. This will create the trust in AI which is needed for leaders to confidently scale its use and for customers and colleagues to feel comfortable adopting it. AI offers enormous potential to transform businesses and society, but without good governance it will fail to achieve its potential.