Music has always been a deeply emotional experience, but turning sound into compelling visuals has traditionally required time, skill, and expensive production tools. From hiring motion designers to learning complex video software, creating a music video or visualizer has long been out of reach for many independent artists and creators.

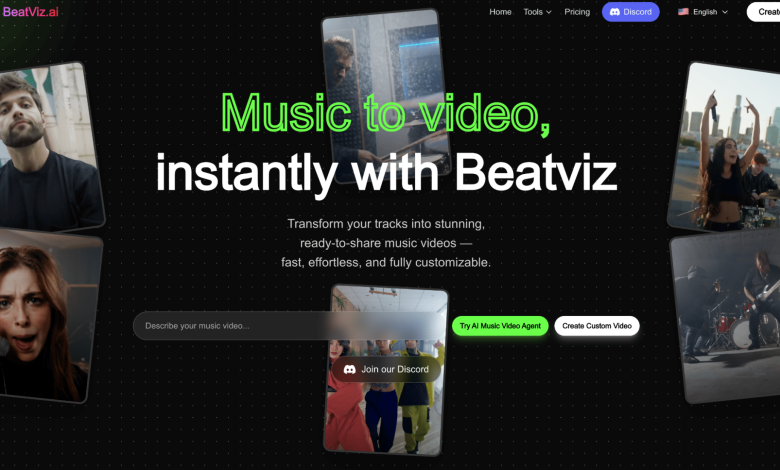

In recent years, however, AI music video generators have begun to change this landscape. Tools like Beatviz illustrate how artificial intelligence can transform raw audio into expressive visual art, lowering the barrier to entry while opening up new creative possibilities.

The Challenge of Visualizing Music

Visual storytelling has become increasingly important in the digital age. Platforms such as YouTube, TikTok, and Instagram favor video-first content, even for music releases. Yet many musicians face a common dilemma: while they can produce music independently, visual content often requires a separate skill set and budget.

Traditional music visualization involves manually syncing visuals to rhythm, tempo, and mood. This process is not only time-consuming but also technically demanding. As a result, many creators either skip visuals altogether or rely on generic templates that fail to reflect the character of their music.

AI music video generators aim to address this gap by automating the connection between sound and sight.

How an AI Music Video Generator Understands Audio

At the core of any AI music video generator is audio analysis. Instead of treating a song as a single file, AI systems break audio into multiple layers of information. These commonly include rhythm, tempo, amplitude, frequency distribution, and emotional tone.

By identifying patterns such as beats, drops, and intensity changes, the system gains a structural understanding of the music. This allows visuals to respond dynamically rather than remain static or repetitive. When the music builds, the visuals expand. When the rhythm tightens, motion becomes sharper and more energetic.

Beatviz follows this general approach, using audio-driven signals as the foundation for visual generation.

Mapping Sound to Visual Motion

Once audio features are extracted, the next step is translation. This is where AI moves beyond analysis and into creative interpretation.

An AI music video generator maps musical characteristics to visual parameters such as motion speed, color shifts, shape deformation, and transitions. For example, a strong bass line might trigger larger, heavier movements, while higher frequencies may influence lighter, faster visual elements.

Rather than applying a single fixed rule, modern systems adapt continuously as the track progresses. This results in visuals that feel synchronized and alive, reacting moment by moment to the music.

The goal is not to tell a literal story, but to create a visual rhythm that mirrors the emotional flow of the sound.

Style, Customization, and Creative Control

One common concern about AI-generated visuals is the loss of artistic control. Early automation tools often produced repetitive or generic results. Newer AI music video generators attempt to balance automation with customization.

Beatviz, for example, allows creators to influence the visual outcome through style choices, color palettes, and overall aesthetic direction. The AI handles synchronization and motion logic, while the creator guides the visual identity.

This hybrid approach positions AI as a creative collaborator rather than a replacement. Artists remain responsible for the mood and intent, while AI accelerates execution.

Why AI Music Video Generators Matter for Creators

The rise of AI music video generators reflects a broader shift in creative technology. Tools are increasingly designed to empower individuals rather than studios.

For independent musicians, visual artists, podcasters, and content creators, this means faster turnaround times and reduced production costs. A song can be released with a visual companion in hours rather than weeks, making it easier to stay relevant in fast-moving digital spaces.

Equally important, these tools encourage experimentation. When the cost of failure is low, creators are more likely to explore new sounds and visual styles.

Beyond Promotion: Visuals as Part of the Music Experience

Music visualization is no longer just a marketing asset. In live streams, digital albums, and immersive online experiences, visuals have become part of how audiences consume and remember music.

AI music video generators like Beatviz demonstrate how technology can enhance this experience by making visuals responsive and emotionally aligned with sound. Instead of static images or looped animations, audiences encounter visuals that feel connected to the music itself.

This shift hints at a future where music is not only heard but also visually experienced in real time.

Looking Ahead

As AI models continue to evolve, music visualization is likely to become more adaptive and personalized. Future systems may respond to listener interaction, environment, or even emotional feedback.

For now, tools like Beatviz offer a practical glimpse into this future, showing how AI music video generators can bridge the gap between sound and visual art. By translating audio into motion and form, they redefine how creators present music in a visually driven world.

In doing so, AI is not replacing creativity—it is expanding the ways creativity can be expressed.