*Opinions expressed are solely my own and do not express the views or opinions of my employer.

The infrastructure of Things – What is it?

Many of us are well-acquainted with the term “Internet of Things”. Often used for asset monitoring and tracking purposes in enterprises, Internet of Things use cases typically involve sensors and actuators, connected by networks to computing systems.

These systems are able to monitor and manage the health and actions of people, machines, tools, and other critical assets across the value chain, and they intelligently use the data collected to improve business objectives.

Examples of these are aspects such as productivity and utilisation optimisation of critical bottlenecks, quality and safety improvements, and other functionalities, such as fleet schedule optimisation via telematics, personnel tracking via RFID, and many more.

However, what many of us have yet to talk about is the fact that many enterprises today are looking at the next generation of their enterprise architecture in an “Infrastructure of Things” model.

But what does it exactly mean for them?

Data challenges are top-of-mind

Data management challenges are becoming increasingly complex.

It is thus no surprise that many enterprises today face challenges with data inconsistencies and cost inefficiencies in managing their information architecture, stemming from the lack of standardisation mechanisms for data exchange, as well as manual, repetitive, and time- consuming business-as-usual operational processes respectively.

Enterprises, especially digital laggards, with large disparate and siloed teams or complex IT architecture typically face significant challenges in their digital transformation efforts, due to such data complexities.

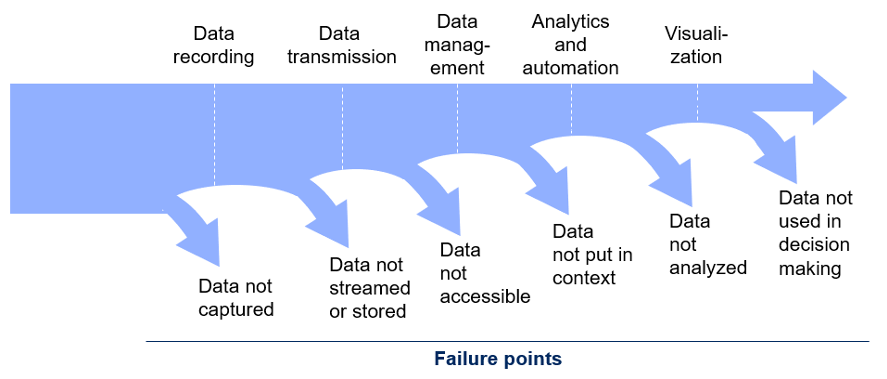

Even if quality data exists in the organisation, difficulties arise in leveraging it due to a series of leakages between data recording and decision making. This leads to less than 1% of data gathered from the thousands and millions of siloed data points being made available to the people making decisions.

Data challenges are costing enterprises

And what are the effects?

Today, even though companies globally spend up to 30% of their time gathering data to make thousands of operational decisions annually, they still spend $1.2 trillion stemming from operational downtime, and $150 billion on poor operational decisions annually.

Typical business-as-usual operational process, which does not value-add to the business top line, can take weeks to complete, and for operational failures, days to identify and resolve. This results in significant operational costs, poor productivity and cost-efficiency, worth up to millions annually.

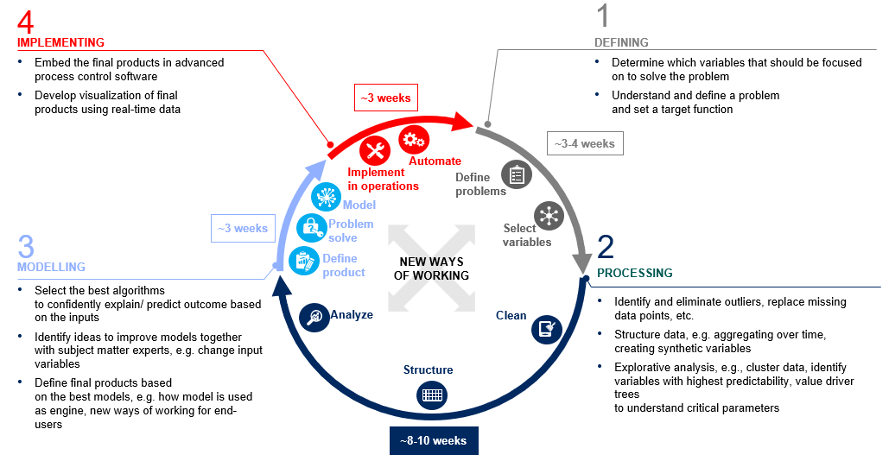

Additionally, as a result of data leakages, while up to 50% of the time is invested while deploying analytics, this precious resource is typically spent on data processing due to poor data quality, access, and re-usability, instead of actual modelling and implementation of modules.

Data processing, including finding the right data, cleaning it, and linking the information typically takes at least half the time of a typical analytics project. This causes analytics projects to scale poorly because the data processing effort is prohibitively large for repeated analysis.

Data challenges can be addressed by “Infrastructure of Things”

Not all is lost, given the rise of intelligent data sourcing, aggregation and ingestion platforms and tools. These are also known as “Infrastructure of Things”, or holistic data and analytics platform, that decouples data from its sources and applications and connects data across endpoints to provide standard access regardless of asset type.

An “Infrastructure of Things” model helps users quickly visualize, identify, and predict abnormalities, dependencies, relationships, and more, between assets.

It is a derivation of the Internet of Things, specifically focused on the holistic connectivity and integration of data and information across the IT architecture and networks, comprising the client, web servers, application servers, and databases.

The “Infrastructure of Things” model ingests information from these data points, identifies correlations and dependencies, and maps data to achieve a fully integrated view of the current state of IT.

With improved visibility and connectivity of data across infrastructure assets, as well as automated, unified and continuous business and operational monitoring, enterprises may stand to better understand their customers and IT infrastructure, reducing operational failures, automate costly traditional processes, speed up time to resolution, and make better decisions.