“Je pense, donc je suis (I think, therefore I am)” ~ René Descartes, published in Discourse on the Method, 1637

Does this statement resonate with you? Well, if it does, it’s likely because you have just carried out chain-of-thought reasoning, a common and fundamental form of cognitive logic that humans use to break down complex problems or ideas into smaller steps.

Known in the Western philosophical sphere as a ‘first principle’, Descartes’ assertion above is probably one of the most famous philosophical maxims of all time, hailed by some as the very foundation for all knowledge.

Nevertheless, without chain-of-thought reasoning, this statement would have very little sense or weight to it, highlighting the centrality of this cognitive process to the very essence of human knowledge (at least in the West).

To exemplify what chain-of-thought reasoning in human cognition typically looks like, we briefly explain the logical derivation of Descartes’ claim, which also illustrates why it is argued to be a first principle, i.e. a principle which is undoubtedly true in all contexts and is not dependent on any other prior assumptions in order to be true.

- In seeking a first principle, Descartes first decided to actively doubt all certainties that he would otherwise have taken for granted. These included all certainties based on sensory perceptions, such as sight, smell, touch, hearing, etc.

- From this acute level of existential doubt, he concluded that there was no certainty even that his own body existed, especially given the phenomena of dreams and hallucinations, through which the human mind can replicate sensory body experiences to a high degree of accuracy, conjuring up a whole new fabric of reality that can be practically indistinguishable from our waking world.

- From this point, taking a more meta-perspective towards the very act of doubt that he was engaging in, he concluded that the only certainty that could be derived from this dilemma was that he has a mind, because the act of doubting in itself requires a mind.

- This led him to assert that his own mind exists. By extension, he argues that the act of doubting is then proof of an individual’s own existence. “Thus, considering that he who wishes to doubt everything nevertheless cannot doubt that he exists while he is doubting, and that what reasons thus (being unable to doubt itself and yet doubting all the rest), is not what we call our body but what we call our soul or our mind; I took the being or the existence of that mind as the first Principle.” ~ Descartes, Discourse on the Method, Section xxi-xxii

Now, with the world on the brink of a new era of knowledge shaped by artificial intelligence, chain-of-thought reasoning is no longer just being used to banish away our dark existential imaginings and solidify the boundary between the real and unreal.

Today, chain-of-thought (CoT) prompting techniques and Boolean principles of logic are already being used extensively in the development and enhancement of LLMs as well as other AI-enabled operations such as SEO and search filters.

So what exactly is CoT reasoning in the context of AI models? CoT reasoning refers to the ability of an AI model to provide the explicit set of logical steps that are involved in getting from the input prompt to the output response.

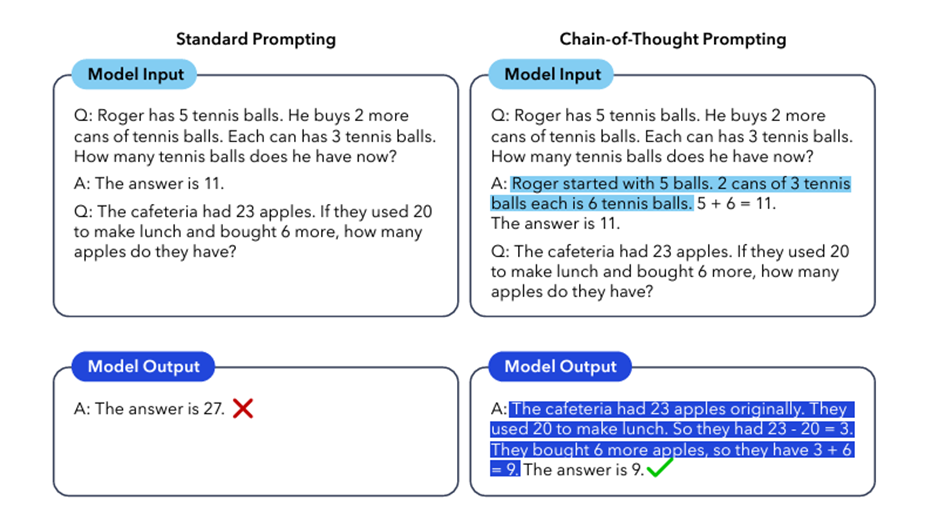

In model training, CoT prompting essentially involves a more in-depth logical procedure which introduces an extra middle step into the prompting process. While standard prompting procedures typically just consist of two steps <Question, Answer>, CoT prompting introduces a middle step <Question, CoT, Answer> in order to increase the transparency and explainability of an AI model’s reasoning process.

Above: Standard prompting vs. CoT prompting (source: Wei et al. 2023)

So far, CoT prompting has also particular promise in increasing the accuracy of model responses for maths and logic problems, with research from Google finding that CoT prompting resulted in LLMs upping their solve rate for elementary maths problems from 18% to 57%.

To delve further into the potential of chain-of-thought reasoning in the evolution of AI, we spoke with Si Chen, Head of Strategy at Appen, to explore the challenges, complexity, and opportunities in CoT reasoning for LLMs.

Originally founded by a small group of linguists in 1996, Appen is now an established international company with over 1000 employees. With its linguistically oriented background, the company has focused particularly on how to support the development of LLMs by providing high-quality datasets that are then used to train and fine-tune these models.

As you will find out from the discourse below, it is the quality of data that is the strategic enabler of successful CoT reasoning in LLMs, with the potential to be a key differentiator of whether an AI model can begin to replicate human cognition on a more holistic level.

How does CoT reasoning play into the current capabilities of LLMs, and why is it an important area of focus for Appen?

“Currently, the design of LLMs is mainly focused on being able to do a really wide variety of tasks because they are intended to function primarily as general-purpose models. CoT reasoning is really just one of the many aspects of the linguistic cognition tasks carried out by LLMs. I actually think that a lot of the people who use LLMs today aren’t necessarily even tapping into this capability that much, but we do expect chain-of-thought reasoning to become fundamentally important for the next generations of LLMs.”

“When we think about the next wave of LLMs, and where they are going to be critically useful, especially in enterprise applications, it’s going to be all about LLM agents. The focus is going to be on developing AI agents which are able to actually follow a series of steps from reason to action, because that’s the way the human mind essentially works, right? So I would say that while LLMs already have the ability to do lots of general tasks, in order to become really practical and useful, they’re going to need to get a deeper acquisition of the types of reasoning and cognitive capabilities that human have – and that’s where CoT reasoning will really come into play.”

What does the process of CoT prompting involve, and how is it different to other prompting techniques?

“The fundamental concept of CoT cognition is actually behind many variants of prompting in general. For example, the idea of going from zero-shot to few-shot prompting is to provide enough examples to an LLM such that it can start to understand the specific type of output that the user is looking for. So CoT prompting is really just a prime example of few-shot prompting where you’re asking the LLM to explain to you what is going on step-by-step.”

“The kind of work that we’re doing at Appen is focused around providing high-quality training data to help improve the performance of AI models and ensure that they’re more aligned to human users. So the kind of CoT reasoning data that we’re working on is for the purpose of supervised fine tuning. But CoT prompting is still just one of the ways that people are doing fine-tuning today.”

“When it comes to what sets CoT prompting apart from other prompting techniques, I would say that it is one of the best techniques for addressing the lack of transparency over the internal reasoning processes in LLMs. Recently there have been a lot of questions around how the model actually comes up with the output that it’s been designed to produce – and that’s primarily because these models have been trained on such vast amounts of data. CoT reasoning is actually one of the best ways that we can get insight into the step-by-step process of what the model has done in order to go and derive its answer, and really to just understand its reasoning process. This is incredibly valuable for not only ensuring that the models’ outputs are useful to us, but also in terms of understanding if there are any inaccuracies or hallucinations in the model, and if so, at which stages they are occurring.”

So what are the main incentives to use CoT prompting in the fine-tuning process?

“Typically, the process of fine-tuning involves supervised learning. Now, the fundamental concept behind supervised learning is to have really good, accurate, and specific examples that the LLM can learn from. The idea of using CoT reasoning in the supervised fine-tuning process is to create a dataset of really high-quality examples so that the model can learn what the right step-by-step reasoning is for a particular use case. So through supervised fine tuning, you are actually changing the performance and capabilities of the model in a very targeted way.”

“These days, the focus on fine-tuning is really around data increasingly becoming the key differentiator for a lot of these large language models, especially once you start them into applications. And there are two main ways that we can support the fine-tuning process. The first is using high quality, CoT reasoning data to actually go and train the model. The second way is through output evaluation. So, by continuously testing the quality and accuracy of CoT model outputs, we can get signals about both the model’s general performance, and also whether it’s performing well for specific applications – and that testing is often in the form of A/B testing [which involves comparing two variants of a variable to determine which one performs better on a given metric]. So CoT prompting becomes especially important once customers start going from testing in the lab to actual deployment. At this point, they then increasingly start to care about what the end user is going to be using their model for.”

“For example, one of the projects that we’ve been working on is the mathematical reasoning in LLMs. Now when it comes to maths, it’s widely recognised that there is a notable gap in the ability of LLMs to solve math problems, especially word-related math problems. But by fine-tuning these models to solve these types of solutions with step-by-step explanations, the models can then learn to get better. I mean when you think about it, this is really similar to the way that humans learn. You know, teachers shouldn’t just be throwing you an answer and going, this is the answer to your question. They should be showing you how you get to the next logical step of the solution at every stage of the learning process. So CoT reasoning isn’t just about increasing the transparency of a model’s outputs. It’s also about enhancing the performance of LLMs and providing the best quality data that is aligned with where the models need to go in terms of developing application-specific capabilities.”

And what are some of the most promising applications for CoT reasoning in LLMs that you see emerging across different industries?

“Because one of the key advantages of CoT reasoning is that it enables users to see the step-by-step process of the model’s response, I think it has a lot of potential in enterprise systems where you want to see transparency in how data has been processed, or in applications where the users need to know how the model has arrived at a certain conclusion or recommendation. So I think CoT reasoning is going to be critical for almost any application with these user requirements, particularly in industries such as education where the LLM users would be looking for more than just an end answer.”

“At Appen, we actually see a lot of this demand when it comes to maths, STEM, and a lot of kind of school curriculum subjects in general, and I think this is because a lot of people are using LLMs to help them learn things. They’re looking to the LLM to find insights and knowledge, and a lot of the time, you’re not asking the LLM to give you an answer. You’re actually asking the LLM to explain to you why a particular answer is right or wrong, or why has something played out the way it did. In China, for example, one of the biggest use cases for LLMs is helping children with homework, because parents don’t always remember the knowledge they had of all the subjects that they learned years and years ago. And so people are really using LLMs as a tool to be able to help coach and teach.”

“But explanatory knowledge seeking also plays out in the context of enterprises. For instance, if I’m seeking answers from a knowledge management perspective, this often means I’m not just looking for just a hard fact like for example, the revenue last year was $100 million. I’m usually going to be looking for something that is much more complex than that, like why the revenue was only $100 million, so really more like the insights that can be inferred from the facts. So again, I think CoT reasoning is incredibly valuable for applications where the end user is trying to understand a topic in depth or dig into an issue beyond the surface.”

A key challenge that many businesses face when it comes to deploying AI is creating high quality datasets for model training. What is the importance of data in facilitating CoT reasoning in AI models?

“So Appen has been in the space of providing data for AI model training for almost three decades now, and I think the key takeaway is that data quality is critically important for all types of model training. For us, what quality means is that it’s not just any data that’s being used to train the model. It needs to be really carefully curated, and we focus on really being able to understand what kind of data is actually going to be helping create models for particular objectives.”

“A key challenge often encountered in model training is that the data doesn’t always exist out there, or the data that does exist is potentially skewed or biased towards certain topics and groups. So when it comes to addressing problems like bias, I think the main difficulty actually revolves around the limitations of existing knowledge and expertise. For example, if I’m not a maths expert, I shouldn’t be helping train the models to be a maths expert because I don’t have a sufficient level of expertise. So even if businesses have sufficient CoT reasoning data, they still face the challenge of getting the right people who have the actual capabilities to then go and build the best next generation of models that can have those capabilities – and that requires a specialized skill set, with expertise in not only model prompting but also subject knowledge.”

“I would also say that a lot of human testing and feedback is needed to ensure that once you start getting a model into deployment, you’re doing really customized testing that supports your specific use case. Really, the purpose of the fine tuning is to make sure that ultimately you’ve got the right model performance for your use case, and this becomes an iterative cycle. We work very closely with our customers to achieve this, particularly because of how fast-paced the deployment of models is now, with many companies going full speed on investing in these LLMS and engineering models. But data is increasingly the key focus and differentiator. This was recently illustrated in a recent article that Meta put out around Llama 3, which was interesting because they highlighted that the biggest difference between Llama 3 and prior versions was actually data. So optimizing AI models isn’t necessarily about big changes in the algorithms. It’s really actually about the quality and scale of the data used in the training.”

What are the challenges associated with CoT prompting, and why does reasoning remain such a challenging area for AI?

“Firstly, I would say that there is a significant challenge in getting the models to be really well-aligned with humans. To some extent, this may just be an intrinsic limitation of AI given the diversity of human behaviour and human cognition, which makes it very difficult to quantify and replicate. But when it comes to model deployment, the alignment of AI models with human users becomes a crucially important but equally complex problem to solve. Humans tend to say a lot of things that are subjective, so with something that you find really funny, for example, I might not even understand why it could be funny, right? And because even as humans, we struggle with subjectivity and varying viewpoints, it’s gonna be very difficult for LLMs to inherently be good at being aligned with humans in this more subjective way that enables humans to bond through humour and opinion. But I think that through the process of getting human feedback to model outputs, human alignment can be achieved in LLMs, at least to some extent. And I think that’s where a lot of fine-tuning, including CoT prompting, is going.”

“More generally, I think reasoning remains a significant challenge for AI because in the past, AI model learning has been based around very clear cut types of reasoning. For example, a basic machine learning (ML) model can be easily trained to identify the difference between cats and dogs, for example. And this certainly has some utility in enterprises. In retail, for example, ML models can be used to identify a particular item of clothing, or a person walking through a store, But in most current use cases, there is a definitively correct answer for those AI models. But in the LLM and generative AI space, outputs are often uncertain, and even when it comes to the reasoning process, you and I might not necessarily agree as to what is the correct or best form of reasoning to apply in a particular context. If we think about the complexity level of the tasks that the AI models are being asked to perform today, they are just inherently more difficult, which results in the process of reasoning also being more difficult. Furthermore, the data used in more complex reasoning is not necessarily structured in a way that is as easy for the model to learn as it is for more basic classification types of learning, where it is easier to quantify the data.”

“I also think that the ability of LLMs to extrapolate from prior learnings has always been a challenge. For a long time, things like transfer learning have also been a key area of focus, because, in ultimately working towards the concept of AGI, we want models to be able to learn very easily, and be able to learn lots of new capabilities without having to go through the laborious process of labelling data and model prompting. But the reality is that the one-shot/few-shot prompting process of transfer learning is still very hard. And this is because AI models are not yet as intuitive as humans when it comes to the ability to learn with that deeper level of cognition.”

Do you think that scientific breakthroughs in neurology and psychology can help model developers overcome some of these challenges?

“Yeah, so I think this is a really interesting question. As a field, artificial intelligence involves a lot more than people usually think about. Typically, people are going to think about compute, right? But compute is really just one thing that exists within the wide-reaching field of artificial intelligence. I used to work at Tencent in the AI Lab where I was working with large teams of researchers and engineers, and we would consider artificial intelligence as a really broad field that includes more or less everything that exists, including psychology, and the human minds, and also the intersections of all these different areas. AI is not just about math and logic, it’s also the arts and the humanities and the social interactions that come with its applications.”

“When it comes to practical research, a lot of the time researchers will be focused on a particular method, both experimenting with new techniques and then validating the model’s response to them. So, for example, a lot of the researchers were mainly focused on just the reasoning component, which would equate to the human ability to make inferences that allow us to comprehend information, and continue to infer new information based on known information. But then there’s also a branch of research where people are focused on action. And this is really important for the development of AI agents and their abilities to work out what action to take based on the information provided by the user. So, for example, if I’m searching for travel itineraries, the agent needs to be able to infer that actually I need to go and search for a flight, and then I need to go and search for a hotel.”

“What’s really interesting is that when you think about the human mind, these logical steps all just sort of mutually coexist and are continual. So in the process human reasoning, we already know the steps we have to go through in order to complete an action, based on our existing knowledge and experience. But what humans then also do is take the results of that action and continue to reason… and then take the next action, etc. That’s how we work, right? So researchers, who are often working on specific projects in their separate fields, can collaborate with each other to better understand how the human mind works, and then start to try and implement some of human processes in CoT prompting. So there are a lot of potential innovations and breakthroughs that can happen through collaborative research. Right now, I think a really interesting area of research is around the concept of reason plus act, which will take us to the next wave of LLM capabilities.”

And what do you think the next wave of capabilities will be for LLMs, and what role do you think CoT reasoning will play in enabling them?

“So, by strengthening the methodological abilities of LLMs, CoT reasoning is going to be a key AI model capability that is transferable to multiple tasks. In particular, I think that the ability of models to carry out more explicit reasoning is going to contribute towards a more general form intelligence, where the combination of reasoning capabilities with taking real world actions will really spur the development of LLM agents. There are already companies out there that are building LLM agents for specific use cases, and also proposals for AI teams made up of multiple LLM agents which would all need to be able to interact and reason with each other. But that’s not going to be possible until there are more advanced reasoning and action capabilities in the models. So I think CoT reasoning is driving us towards a higher level of intelligence which could apply human-like reasoning to data and situations that the model hasn’t seen before.”

“Another very interesting area of development is the multimodal capabilities that are now starting to be integrated into LLMs. So today, when we use an LLM, it’s still primarily a text-based interaction even though this isn’t the most natural and intuitive form of communication for most people. But as we move towards increasingly natural ways of interacting with models, their abilities will need to change. If you take text-based LLM interactions, for example, people are usually very thoughtful about what they write, and there is typically a higher degree of clarity and cohesiveness in written language that is often lacking in spoken language. So to interpret communicative cues via spoken language and image-based data, LLMs are also going to need a different set of reasoning capabilities.”

Conclusion: to what extent does CoT reasoning allow AI models to replicate human cognition?

Replicating human cognition is no mean feat. The human mind is widely regarded as one of the most complex and capable organs in the animal kingdom. Indeed, a great deal of its cognitive activity still remains unchartered even in today’s secular world where few mysteries are left unsolved.

But from what we do know about the human mind, it is clear CoT reasoning is one of the most fundamental cognitive processes underlying the majority of human thought and behaviour, both conscious and unconscious. Therefore, the ability of LLMs to replicate CoT reasoning, albeit on a much more basic level, is a promising sign that AI will eventually be able to replicate human reasoning in a much more accurate and holistic way.

Nevertheless, as highlighted by Si Chen, there are significant challenges to be overcome before LLMs can carry out CoT reasoning on a level that even approaches the complexity of human reasoning. To summarize, these include:

- Sourcing data of sufficient quality to be used in CoT prompting

- Developing the capabilities of AI models to extrapolate data from a more diverse range of sources (including self-learning)

- Combining CoT reasoning with action-taking for AI agents in a more simultaneous and ongoing way that more closely imitates the human process of decision making.

But even if these challenges are overcome, it is likely that there will be inherent limits to the sophistication of AI’s cognition. This is because human reasoning has evolved over thousands of years to include a multitude of cognitive techniques and biases that now help us to make sense of the world in a way that is optimized for human-human connection, and ultimately the survival of our species.

What’s more, these techniques and biases cannot be easily defined and quantified, which means that they are unlikely to be replicable by AI anytime soon. For example, going back to Descartes’ quote that we examined at the beginning of this article, the chain-of-thought process he used to get from the premise of his argument to its conclusion involves more than just a logical chain-of-thought based in one dimension. It is only through Descartes’ meta-level awareness of his own cognition that he is able to jump from step 2 to step 3.

Perhaps algorithmically, this jump could be understood as a leap from linear progression to exponential progression. However, the likelihood of AI models being able to progress to such complex levels of chain-of-thought reasoning is extremely low in the near future – and this can only be a good thing. With the world still coming to terms with the potential authority that AI could have in human societies in the next few decades, and legislation still racing to catch up with even just AI’s current abilities, we certainly don’t need AI models advanced enough that they could represent serious competition to age-old human philosophies.