AI Governance Gains Urgency as Regulators Move Faster Than Before

For too long, cyber risk was perceived as an issue that should be relegated to the IT department, having little connection or influence over an organization’s long-term strategy. In fact, it took decades of incidents and losses to compound enough for regulators to understand it as a core business concern. With AI risk, however, that recognition has gained momentum far more quickly, prompting earlier discussions about accountability and oversight.

Even though Generative AI (GenAI) and other AI systems have only been commercially available for a few years, those same regulators, learning from their past delays, realize that AI risk likewise can upset markets and affect social stability, potentially to an even greater degree than its cyber counterpart.

Indeed, the same concerns that once solely surrounded data breaches and systemic vulnerabilities have expanded to the misuse, bias, and opacity of GenAI. Consequently, governments and standard-setting bodies have begun introducing legislation and formal frameworks to guide responsible AI governance and help organizations build resilience.

In the European Union, the AI Act has already set a precedent for comprehensive oversight, while in North America, similar discussions are underway through initiatives such as the US Executive Order on Safe, Secure, and Trustworthy AI. Still, regardless of how these efforts take shape, they all point to the same imperative that organizations must begin by understanding their AI exposure and building the visibility necessary to manage it effectively.

The European Union (EU)

The EU became the first major global regulator to pass comprehensive AI legislation with the release of the AI Act in August 2025. The law includes a two-year preparation period before enforcement, giving organizations a limited window to align their daily practices with the new standard. The EU’s AI Act’s fundamental aim is to promote safe and trustworthy usage of AI, setting out obligations that vary depending on the level of risk each AI system poses.

For systems deemed high risk, the Act demands a broad slate of controls, including a continuously maintained risk management system, detailed technical documentation, and robust data governance requirements. In other words, organizations must be able to demonstrate how their models are built, trained, and monitored, as well as show evidence that they’ve identified potential misuse cases and can track performance over time.

Crucially, the AI Act also delegates AI governance responsibility to senior management and the board. Under Article 66, the EU explicitly demands that these executives oversee compliance programs and ensure that AI accountability is embedded directly into decision-making processes. In combination with the Act’s assessment and documentation requirements, this clause sets the expectation that AI governance should be both measurable and continuously maintained.

The United Kingdom (UK)

In the UK, regulators have taken a principles-based approach rather than creating a new, binding set of laws. The AI Regulation White Paper, for instance, outlines broad priorities, such as safety, transparency, fairness, accountability, and contestability. Existing agencies, then, apply these principles within their own domains. Even without a single statute, it’s plain that the expectation is that organizations should document how AI is used and show who is responsible for oversight.

North America (United States and Canada)

Across the United States, AI oversight on an institutional level is maturing through a mix of agency action and proposed legislation. The Executive Order on Safe, Secure, and Trustworthy AI is just one regulation that establishes guiding principles around transparency, accountability, and safety, directing agencies, including the National Institute of Standards and Technology, to advance practical standards for implementation.

The Federal Trade Commission, in parallel, has warned US companies that deceptive or biased AI practices could breach existing consumer protection statutes. Several localities and states, such as New York City and Colorado, are advancing their own initiatives as well, underscoring that responsibility for AI governance will not come from a single mandate but rather from the specific directives organizations put in place to evaluate and manage their own exposure.

Canada’s Artificial Intelligence and Data Act (AIDA), which remains to be ratified, is narrower in scope, although it reflects the same momentum toward structured accountability. AIDA targets what it defines as “high-impact” GenAI and AI systems, requiring organizations to document safeguards and monitor performance throughout the lifecycle of AI usage. It also introduces strict transparency obligations.

These developments across the continent demonstrate that North American regulators, too, are converging on a shared principle of measurable oversight. Formal compliance frameworks and regulations may differ, but there is an underlying, sometimes explicit, expectation that stakeholders need to regularly assess their AI risk exposure and demonstrate that it’s understood and managed.

Establishing Readiness Through AI Risk Assessments

This growing web of AI legislation, which extends well beyond North America and Europe, varies in the specific but shares a standard of high-level risk management in the AI era. While reaching that level of readiness may initially seem like a complex process, the first step is fairly straightforward, echoing other common processes of business risk oversight. Building resilience begins by measuring one’s exposure against a reputable AI risk assessment.

AI risk assessments provide security and risk managers with a standardized, repeatable process for discerning where and how AI operates within the organization. Typically built around popularized risk management frameworks, which, in the case of AI, include the NIST AI RMF and ISO/IEC 42001, these assessments help stakeholders identify the maturity of existing safeguards and pinpoint areas where improvements provide significant benefits.

Structured assessments also establish accountability in a way that informal, spreadsheet-based reviews rarely do. Ownership can be publicly defined and viewed, allowing executives to track progress and, if necessary, intervene. Moreover, as these evaluations become routine, they create a pattern of oversight that scales across business units. Over time, this consistency becomes an auditable record that demonstrates diligence and shows that AI risk is being governed with calculated intent rather than reactive effort.

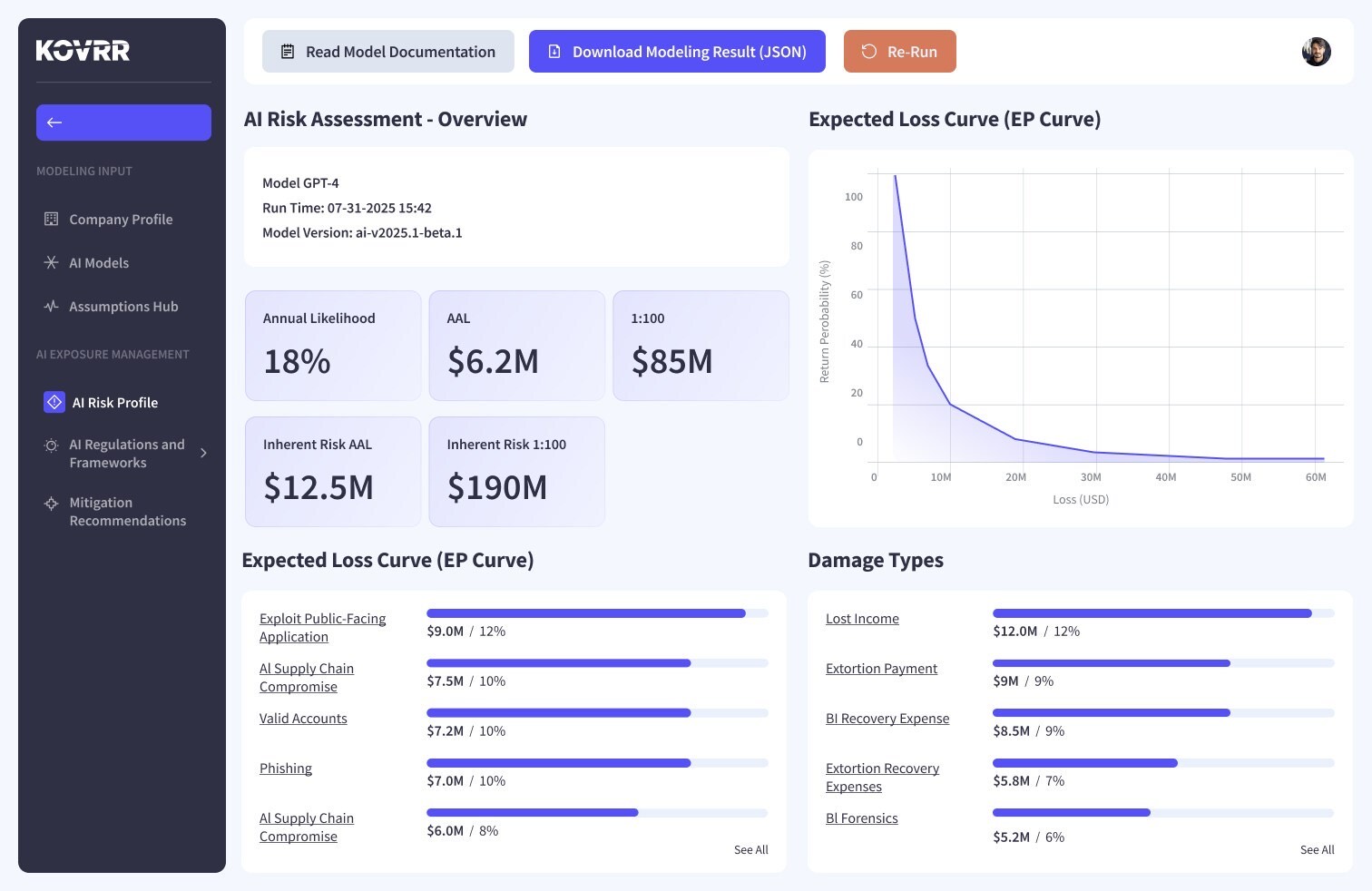

Advancing Governance Through AI Risk Quantification

Once there is control visibility, the next step is to translate those findings into actionable insights. Quantification facilitates this upgrade, leveraging advanced statistical models to translate maturity levels and more qualitative observations into hard outcomes, such as Average Annual Loss and loss exceedance probabilities. These metrics ultimately reveal how control strength influences the scale and frequency of potential incidents, offering executives a more tangible view of AI risk exposure.

When adopted in tandem, risk assessments and AI risk quantification set the baseline for a robust system of governance that aligns compliance obligations with strategic decision-making. Similarly, this combination provides a defensible method for highlighting progress, allocating resources where they matter most, showing regulators that oversight is not static but continuously refined, and, most importantly, ensuring that AI risk management adapts as the global landscape evolves.

Organizational Preparedness in the Age of AI Regulation

While GenAI tools have already proven themselves remarkably useful, there remains only a surface understanding of the full extent of their business benefits. The depth of their potential to cause disruption, likewise, is only beginning to reveal itself. As the risks become more apparent, regulations will inevitably grow more stringent, seeking to safeguard markets and maintain public confidence.

Organizations that establish disciplined methods to demonstrate compliance while strengthening operational resilience will be better positioned to endure the tightening scrutiny and adapt as oversight deepens.

Read more about “Enhancing AI Governance: Kovrr Unveils AI Visibility Through Risk Assessment and Quantification” here.

About the Author: Yakir Golan is the CEO and co-founder of Kovrr (https://www.kovrr.com/), a global leader in cyber and AI risk quantification. He began his career in the Israeli intelligence forces and later gained multidisciplinary experience in software and hardware design, development, and product management. Drawing on that background, he now works closely with CISOs, chief data officers, and other business leaders to strengthen how organizations understand and manage both cyber and AI risk at the enterprise level. Yakir holds a BSc in Electrical Engineering from the Technion, Israel Institute of Technology and an MBA from IE Business School in Madrid.