The Rise of AI in Smart Homes

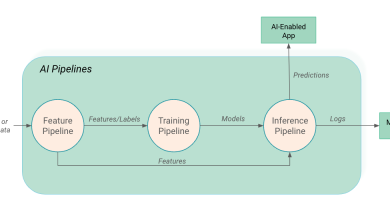

Until now, smart homes have mostly meant asking a voice assistant to play music or turn on the lights. With the rise of Large Language Models (LLMs), artificial intelligence (AI) is quietly rewriting that definition. The connected devices around us such as speakers, TVs, wearables, and appliances, are evolving from simple endpoints into distributed and intelligent systems that learn patterns, predict user needs, and adapt entire living spaces accordingly.

This transformation is driven by advances in AI models that can interpret multimodal data (audio, video, wireless signals, sensors), reason about context, and act proactively, all while respecting privacy. Furthermore, AI is not only reshaping how smart homes function but also extending into personal computing, blurring the lines between devices, voice assistants, and services.

Understanding and predicting behaviors

Traditional smart devices required explicit user commands: “Turn on the kitchen light.” AI-powered systems now infer intent and act autonomously. For example, computer vision models embedded in cameras can recognize space vacancy and lighting conditions, enabling a garage to dim the lights automatically.

Additionally, LLMs are adding natural conversation as a primary interface. Instead of memorizing commands and routine names, users can now say, “I’m going to bed,” and the system understands to lower the thermostat, arm security, lock the door, silence notifications, and activate the robovac for cleaning. This coordinates multiple devices and services at once. Taking it a step further, AI agents can now learn our behaviors when repeated on a daily basis and autonomously offer hunches to create these routines without users explicitly setting up the process.

This shift reduces cognitive load for users and brings technology closer to what researchers call “ambient intelligence”. The vision of this technology is that it fades into the background, and computing is no longer limited to a single device, a single screen, or a single interface.

Converting sensing data to context

One of the key opportunities with AI is transforming raw sensor data into actionable insights. Smart homes today are equipped with multiple sensing modalities: microphones capturing ambient audio, Wi-Fi devices detecting signal reflections and variations, and Bluetooth Low Energy (BLE) devices identifying proximity to our phones and wearables. Higher-end devices take this further, using mmWave and ultrasound radars in TVs or smart speakers to recognize presence, gestures, and even subtle micro-motions such as breathing rate.

Some instances where AI can understand context of the environment:

- Sensor Fusion: Microphone arrays can estimate where speech is coming from (angle-of-arrival), while Wi-Fi or Bluetooth signals provide room-level proximity. Combined, these signals localize a person passively, so only the nearest device responds to a voice command. Hence, instead of saying “turn off the kitchen lights,” users can just say “lights off.”

- Behavior Modeling: Machine-learning models trained on daily activity patterns can detect anomalies: if there is no motion detected in the kitchen and no one brews coffee by 9am as usual, the system might send a wellness check alert for an elderly resident.

- Cross-Device Synchronization: AI frameworks can now synchronize context across multiple devices. For example, a laptop knows you just walked away from your desk because your wearable sensed motion, automatically locking the screen.

Such capabilities require robust data pipelines, on-device inference for privacy, and federated learning techniques that train models without exporting personal data to the cloud.

Personal Computing Gets Personalized

Going beyond smart home automation, AI is also changing how we interact with our personal computers and devices. With agentic AI capabilities, our phones, computers, smart watches and glasses, smart speakers and vehicles are able to take actions on our behalf. For instance, smart speakers offer conversational voice capabilities to order groceries, hail cabs, make reservations at restaurants, purchase movie tickets, manage reminders and to-do lists, and even order take-out. AI wearables can even gather user context in real time by hearing what we hear, seeing what we see, and capturing our interactions.

Future operating systems may integrate “context engines” that continuously sense a user’s attention, focus, location, and intent. Imagine an AI that notices you’re deep in a video call and automatically mutes notifications across all devices. It can also cross-correlate context across our smart homes, workplaces, and even modern vehicles that offer conversational AI features.

For AI in homes and personal computing to succeed, trust must be earned. Chipmakers are accelerating this shift by adding neural processing units (NPUs) to consumer hardware, enabling real-time inference without draining battery life or relying on cloud latency. Users are increasingly concerned about who can access their data and how predictions are made. Emerging fields of research such as explainable AI and differential privacy will be crucial.

The industry is moving towards on-device processing and local storage of sensitive data, so that personal context never leaves the home. Matter is a new smart-home interoperability standard, which is laying the groundwork for secure device-to-device communication, further reducing dependence on vendor-specific clouds.

Smart Environments Offer New Services

AI-driven intelligent environments and spaces open new opportunities:

- Predictive Maintenance: Appliances and automotives can detect wear patterns early and trigger service requests before failure, streamlining supply chains and improving user experience.

- Personalized Energy Management: AI can optimize HVAC schedules and appliance usage to save energy, a growing priority for sustainability goals. Adding intelligence on top of pre-existing devices can offer a high value proposition with fewer devices. For example, a Wi-Fi router can be repurposed as a motion and occupancy sensor.

- Healthcare and Wellbeing at Home: AI-powered vital sign monitoring and fall detection can extend independent living for seniors, lowering healthcare costs. The next generation of wearable devices will track more than just heart rate, and offer AI fitness coaching and insights in real-time.

Toward Ambient AI

The next step in the AI revolution is not just smarter devices but smarter environments. Researchers are exploring systems that leverage ambient sensing to model not only physical context but also user goals and emotional state. Such a future will require collaboration across AI research, UX design, hardware development, edge computing, and public policy.

The broader vision is to create an ecosystem of devices that are not just connected, but responsive to human life – AI at the forefront, not competing against humans but supporting them.