The internet has become the world’s largest playground and marketplace, where people of all ages learn, socialise, and transact. For policymakers and businesses alike, ensuring that children are protected while adults retain frictionless access to lawful products and content has become a defining challenge of the digital economy.

At the heart of that challenge lies age assurance: determining whether a user is above or below a certain age threshold, without necessarily identifying who they are. It sounds simple, but designing systems that are both privacy-preserving and commercially practical requires careful alignment between technology, regulation, and ethics.

The new compliance landscape

Regulators across the world are tightening the net around age-restricted access. The UK’s Online Safety Act now mandates robust child protection measures for digital platforms. The Tobacco and Vapes Bill extends age verification obligations to retail and marketing. Several U.S. states have introduced laws restricting minors’ access to online material, while Australia is preparing to ban under-16s from social media altogether.

In parallel, regulators in Europe and Canada are making clear that age assurance must align with data minimisation and privacy by design. This convergence is reshaping compliance from a checkbox exercise into an architectural question: how can businesses meet their duty of care without building surveillance systems?

Why traditional methods fail

The industry’s first-generation solutions – ID uploads, credit card checks, and self-declared birthdays – have proven wholly inadequate. They are either too weak to protect minors or too invasive for adults.

Static ID-based checks also introduce new liabilities: retention of sensitive personal data, exposure to breaches, and user friction that deters legitimate customers. For businesses operating in competitive retail or online environments, these are not minor costs — they are commercial risks.

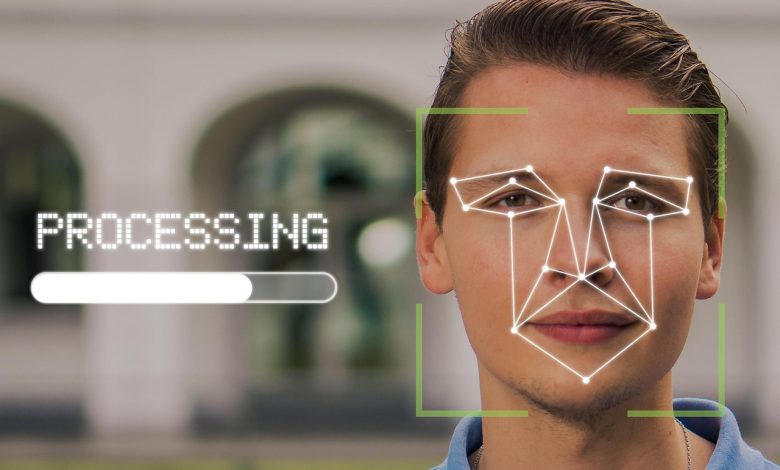

The emergence of AI-powered age estimation

AI offers a new path forward. Instead of verifying who a person is, AI age estimation determines whether someone appears to be above or below a given age threshold using computer vision models that run in real time.

When implemented responsibly, these systems deliver three major advantages:

- Privacy by Design – modern models can run directly on-device, processing imagery locally and discarding it instantly. No biometric data leaves the device, and no persistent identifiers are created.

- Speed and Frictionless Experience – real-time estimation supports seamless journeys at self-checkout kiosks, vending machines, or content gates, ensuring adult customers aren’t burdened by compliance.

- Scalability and Inclusion – properly calibrated AI systems can maintain accuracy across demographics and environments, bringing consistent protection standards to both online and offline use cases.

This makes AI-based age estimation a bridge between compliance and commerce. Businesses can deploy age-appropriate design without compromising user trust or experience.

Raising the bar: innovation to standardisation

As the technology matures, the focus is shifting from proof-of-concept to proof-of-compliance. The Australian Government’s Age Assurance Technology Trials have set a global precedent by benchmarking multiple approaches under real-world conditions. Similarly, the U.S. National Institute of Standards and Technology (NIST) has begun evaluating age estimation algorithms for fairness, accuracy, and robustness.

Most notably, the forthcoming ISO/IEC 27566 standard on Age Assurance Systems will formalise technical and procedural criteria for trustworthy deployment — from data protection safeguards to model evaluation practices.

These frameworks are crucial. They create a shared language of trust between regulators, technology providers, and commercial adopters. As a result, businesses can select solutions that are independently validated, interoperable, and policy-aligned.

Trust and accountability

Accuracy alone does not make an AI system trustworthy. The credibility of any age assurance framework depends on adherence to five foundational principles:

- Transparency: Users should know what is being analysed, how, and why.

- Fairness: Models must be tested and tuned across age, gender, and ethnicity to avoid bias.

- Auditability: Compliance logs should demonstrate accountability without retaining personal data.

- Fallback: When AI confidence is low, alternative methods should be available.

- Continuous Validation: Independent benchmarking and certification ensure sustained reliability.

Making age-appropriate design work for business

Age assurance is not only about protecting children; it’s about enabling businesses to design responsibly. Retailers, gaming platforms, streaming services, and advertisers all need practical, low-friction tools to tailor access and content based on age appropriateness, without driving users away or breaching privacy.

AI-driven systems, deployed under standardised, privacy-first frameworks, make this possible. They allow companies to comply with emerging legislation, safeguard young users, and maintain a seamless experience for adults, all within a unified, ethical infrastructure.

A responsible way forward

The convergence of policy, AI, and standardisation marks a turning point. Governments are raising expectations. Consumers are demanding privacy. And the technology has finally matured to deliver both.

If the next generation of AI systems are guided by principles of privacy, accountability, and fairness, then it’s easy to picture a digital ecosystem where protection and participation are in balance, not in conflict.