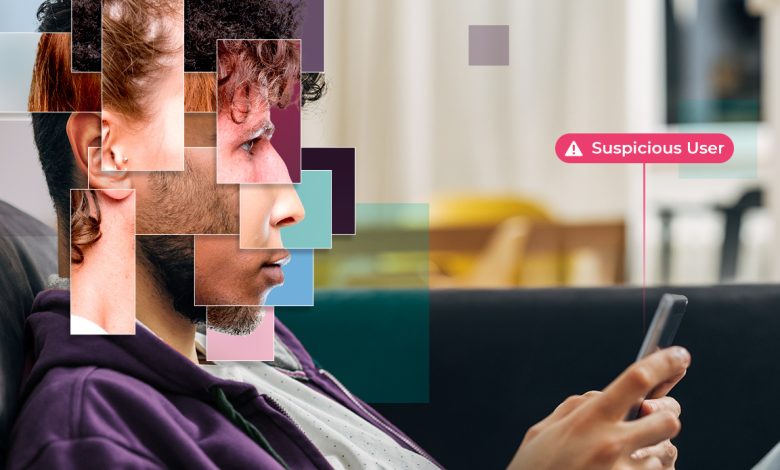

Fraud-as-a-Service (FaaS) networks have reached an operational efficiency that resembles full-fledged businesses—only their product is fraud, scaled through generative AI (GenAI). These groups coordinate account takeovers (ATO), create synthetic identities and deploy evasion tactics at a pace manual detection can’t match and traditional fraud controls can’t handle.

With GenAI, fraudsters can now automate the creation of digital identities at scale. Annual fraud losses in the US are expected to jump from a recorded $12 billion in 2023 to $40 billion by 2027, driven by GenAI-powered deepfakes and synthetic identities. These attacks are driving both hard losses, like financial theft, and soft ones, like reputation damage, compliance failures, and damaged trust. Attackers use large language models (LLMs) and deepfakes to target both consumer-facing and business-to-business (B2B) markets. In just the last year, 25% of executives have directly encountered deepfake abuse, up 700% since 2023.

GenAI-powered fraud is the new frontline threat. Deepfakes drive attacks that erode trust, push the boundaries of risk and force organizations to re-engineer the fundamentals of identity and security. At the same time, the scale and speed of abuse is exposing urgent new vulnerabilities, pushing both private and public sectors to evolve fraud prevention strategies toward more resilient, adaptive frameworks.

Adaptive Defenses Against AI Fraud

GenAI lets attackers convincingly impersonate real users at scale, bypassing rules-based verification systems and enabling tactics that once required time, knowledge or expertise. Deepfake-generated video, audio and fabricated personal data now power everything from phishing and onboarding scams to social engineering and ATOs. More than half of financial professionals in the US and UK have been targeted by deepfake scams, with 43% reporting real losses.

Synthetic identity fraud—where real and AI-generated data combine to form “new” personas—is one of the fastest-growing threats, projected to cost businesses at least $23 billion by 2030. These attacks often bypass conventional identity checks, leaving platforms with static or manual controls unable to identify, investigate or contain abuse. Over the last two years, deepfake incidents surged by 700%,and nearly one-quarter of executives report direct targeting of key financial and/or accounting data.

AI-powered malware is further compounding the problem. It adapts quickly, learns from failed attempts, and constantly evolves to bypass detection. This real-time, dynamic behavior makes traditional fraud controls ineffective, especially as fraud groups automate cross-border attacks with minimal effort or cost.

As fraud leaders turn to adaptive and intelligence-driven defenses—like continuous authentication, real-time behavioral analytics and predictive anomaly detection— they face a bigger challenge: protecting trust. Behind every surge in industrialized fraud are real people. Families devastated by synthetic identity scams. Personal lives upended by deepfake voice attacks. Entire communities thrown into confusion from widespread impersonation. GenAI moves fast, and it’s turning everyday users into targets or even unwilling participants. The impact isn’t just just financial. It’s emotional, and recovery is harder than ever.

GenAI gives fraudsters an asymmetric advantage, and beating it will take more than just better tools. Enterprises need to invest in dynamic technical defenses and champion collaboration and data sharing across sectors. Regulators should move proactively, working with international partners to set clear standards for digital identity, protecting biometric data and prioritizing user control. Leaders need to educate their teams, empower customers and invest in technologies that can detect and interpret AI-driven manipulation at scale, with transparency around how those systems work. The way companies balance privacy, security, and ethical AI will shape more than just compliance. It will define how we build trust online. By embedding resilience and accountability into new fraud prevention models, businesses and governments will secure not just transactions, but trust in the AI era.

Denmark’s Digital Identity Model and Global Implications

Europe is at a turning point in digital identity regulation. Denmark’s proposed Digital Identity Protection Act (DIPA) would classify biometric traits—like face, voice and digital likeness—as intellectual property, protected for up to 50 years after death. It would give individuals the right to demand immediate removal of unauthorized deepfakes, shift the burden of proof to content publishers and allow compensation even when direct financial loss is not evident. The law also targets technology providers, media platforms and AI developers, requiring strict consent mechanisms and data handling protocols. DIPA has the potential to redefine the legal foundation for identity in fraud prevention across the continent, and eventually worldwide.

If adopted broadly, it could also trigger a shift away from default, widespread biometric use, pushing companies to reserve biometrics for high-risk cases and rely more on multilayered, non-biometric verification across the customer journey. As Denmark prepares to champion these standards across the EU, organizations should prepare for an evolving regulatory landscape that prioritizes users’ digital sovereignty, proactive removal rights and stronger platform accountability.

Future-Proofing Against Industrialized Fraud

Industrialized, AI-powered fraud is scaling fast, and it’s forcing many sectors to rethink old risk management playbooks. Stopping it requires intelligence-led defenses that connect real-time behavioral analysis, transaction monitoring and predictive anomaly detection.

Multimodal analytics—signals from behavior, device, network and location—allow organizations to improve fraud detection rates, cut false positives, and streamline investigations to support faster incident response. Insurance and finance leaders already rank GenAI–driven fraud detection as an urgent priority for 2025 and beyond. This signals an industry-wide transition towards robust, adaptive security layered with proactive human oversight and regulatory compliance.

Building Resilience in the Age of GenAI

The next era of fraud prevention will challenge not only how organizations respond to cyber threats, but also how society values trust, autonomy and privacy. Balancing progress and protection will increasingly shape decisions beyond compliance—touching on civil rights, ethical use of technology and shared responsibility for the integrity of digital identities.

How companies choose to answer these challenges will define not just who wins the fight against fraud, but how digital trust is built, by design and through transparency, for everyone navigating an AI-powered world.