Will AI replace creatives? This million-dollar question is one that could well go down in history as one of the definitive zeitgeists of our times. But hopefully, it will be a question that we can look back at over the next few years and laugh at, like an entertaining if slightly humiliating anecdote from our adolescent years.

Nevertheless, redundancy by machine is a fear that still currently preys on the creative minds of writers, actors, and artists alike. But according to Adobe, Generative AI is not so much of a career killer as it is a career accelerator.

At the Adobe MAX convention in Miami which took place earlier this month (14th – 16th October), company representatives shared not only the latest technological developments in their GenAI-accelerated toolkit, Adobe Creative Cloud, but also their stance on how AI is impacting the world of creatives.

As the leading global provider for all things digital and creative, it comes as no surprise that Adobe’s vision of AI-enhanced creativity is a rosy one. In fact, the company is betting on AI to be the next game-changer for the creative industries, enabling humans to up their game by spending less time worried about the end-product, and more time investing in the creative process.

And this is why the company has spent the last few years, along with a substantial R&D budget, investing in creating what can best be described as the ultimate digital playground for creatives. A digital space where AI-powered image generation can move almost as fast as the human imagination. Where moods, textures, and colours can be abstracted from a set of pictures and projected onto a new image at the click of a mouse. And where you only need to articulate the ideas in your head to literally see them spring to life on the screen.

The latest in Adobe Firefly

Firefly is Adobe’s full suite of Generative AI tools, initially released to BETA users in June 2023 to all subscribers of Creative Cloud. Since last year, these tools have been enhanced with over 100 updates, including several shiny new capabilities, as per user demand.

These include:

- Photoshop’s Generative Expand: users can adjust the perspective of a photo by adding an extra rim of background in any direction that matches the original photo.

- Photoshop’s Generative Fill: users can quickly edit not just the tone of their images, but also their main content, using text prompts to quickly generate new elements to add to the image and/or replace unwanted items.

- The Remove tool, available in both Photoshop and Lightroom: users can easily remove unwanted elements from their images. Thanks to intelligent object detection capabilities, this even works when these elements are entangled with other objects and/or are spread across the image.

- Lightroom’s Quick Actions for editing on-the-go: users can automatically give their photos a quick touch-up to enhance the image’s colour, tonality, symmetry, etc.

Additionally, Adobe has unveiled several completely new products, including their new Generate Video Model, Project Neo, and Project Concept.

Firefly’s new video model

Currently being rolled out to Beta users in Adobe’s video editor, Premiere Pro, the new video model will allow users to generate video clips of up to 5 seconds just using text prompts.

The end results of the prompts showcased is practically indistinguishable from real footage, thanks to the high level of visual detail and conformance with real-world physics for realistic motion depiction. It is able to capture, for example, the swish of a dancer’s hair, which, although being a small and seemingly insignificant detail, is incredibly complex and difficult to replicate.

The generative capabilities of the model will also be integrated across Creative Cloud via tools such as Generative Extend, which allows creators to generate additional footage based on what they already have to extend and modify existing videos.

“The Firefly video model is the first publicly available video model designed to be commercially safe. It is really following everything we’ve done, because just like all the other Firefly models, it’s only trained on content that we have the right to use. And everything you’re looking at behind me was made with the Firefly, a few things to notice, amazing detail, real world physics, accurate text, rendering, realistic motion and high quality camera angles and movement. Now, in addition to text to video support, the Firefly video model has world-class image to video support. Give it a static image, tell it what you want it to do, and it will turn it into a video format. And finally, the model has exceptional prompt coherence, so you can write incredibly intricate prompts, and the model will generate the clips, the B-roll, visual effects and more. And as I mentioned, our primary focus with these model development is integration into the tools and to augment your creative workforce.” ~ David Wadhwani, President, DMe, Adobe

Project Neo

With Project Neo, Adobe is brings its Creative Cloud subscribers an accessible new platform that lets them seamlessly translate their illustrations and visual designs into a 3D form.

Created primarily with ease-of-use in mind, it has several functions which allow users to play around with the 3D modality, and easily integrate it into their established workflows. These include:

- Familiar tools from other Adobe applications built to move, scale, and rotate 3D objects

- Rapid generation of 3D images from basic sketches

- Integration with Adobe Illustrator for easy exportation of designs between the platforms.

- Rapid switch between different illustrative styles, i.e. pixellated, pencil sketch, realistic, etc.

Project Neo is currently being rolled out to beta users, and is inspired by the challenges and experiences of Adobe’s community of artists and illustrators.

“What we’ve heard from you is that you want the flexibility of creating in 3D, but that learning to use 3D tools is just too daunting. And while you can create 3D looking artwork already with Adobe Illustrator, just simple changes like rotating an object can mean that you have to start over from scratch. So we thought, what if there was a tool for creating 3D content designed from the ground up for designers, a tool designed to be approachable and fun? What if there was a tool focused less on 3D modelling, and more on 3D illustration? Well, we have now done that with Project Neo, a web-based 3D illustration tool built specifically for graphic designers” ~Ashley Still, SVP & GM, Creative Product Group, Adobe

Project Concept

In contrast to Project Neo, Project Concept focuses on an earlier aspect of the creative process, aiming to digitalize even the imaginative conception of an idea.

How does it do this, you ask?

Essentially, Project Concept combines a virtual mood board with a Generative AI assistant, thus providing an unlimited digital canvas for the mind’s sprawling ideas, while helping users overcome any blank-page fears that might be holding them back.

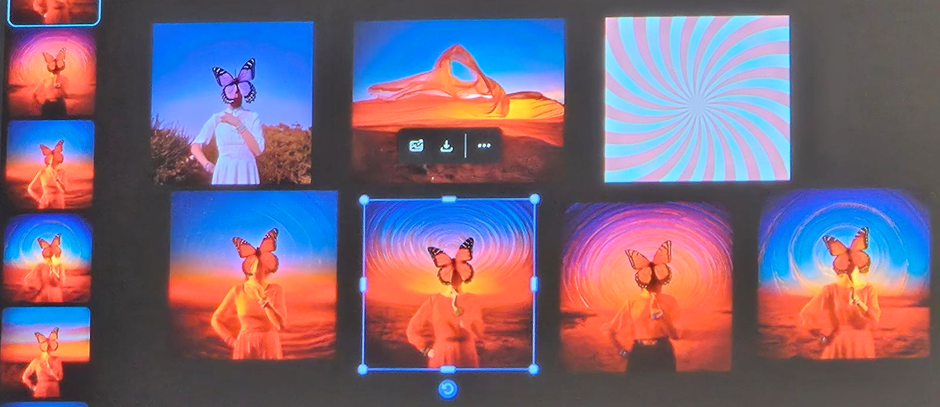

What’s more, with Project Concept, users can generate an entirely new image based on two completely separate and unrelated images, essentially replicating the process of visual perception and abstraction that we would otherwise perform ourselves.

In fact, Project Concept replicates this process so accurately that it even allows you to pick and choose various visual elements that you like from one image and combine them with other visual aspects from another image.

For example, in the picture below, Generative AI is used to combine the subject of the top left image with the colour scheme of the top middle image and the motion/shape of the top right image, creating a set of images below that depict different iterations of this combination.

How well do Adobe’s GenAI tools serve the needs of creatives?

At their MAX conference, Adobe made it clear that their focus remains centred around serving the needs of their creative community in the best and most innovative way possible.

But when it comes to Generative AI, it remains unclear if coming up with all these new ways to automate/semi-automate fundamental design processes is actually serving the needs of the creative community, or whether it is really just putting the weapon of their destruction into their own hands.

Nevertheless, the genie can’t be put back in the bottle, which means that what really matters now is how effectively GenAI technology can be used to meet the demands of the creative community.

On this front, Adobe cannot be faulted, demonstrating a commendable commitment to listening to the concerns of the creative community at a time of particularly disruptive technological change.

“Everything we do begins with you, with being part of the community, by sharing and learning, you’ve taught us that you value product performance and innovation that improves your daily quality of life. You want faster editing and bridge your control and expressiveness so you can spend less time on what you have to do and more time on what you want to do. You’ve told us that you want to expand your skills across creative mediums, and while you have a lot of questions about generative AI, you’re clear that you want the choice of whether or not to use it, and when you do use it, you want it to complement, not replace, your skills and experience. And finally, told us that community is vital. You want to connect, share, learn and be inspired by others.” ~ Ashley Still, SVP & GM, Creative Product Group, Adobe

And this is not just all bark and no bite. To their credit, Adobe has taken measures to address one of the most pressing concerns for creatives in the age of AI: the problem of accreditation.

With their new open-source software, Content Credentials, which enables creators to essentially digitally sign their content and indicate their preferences for how it is subsequently used by AI companies, Adobe has become one of just a few companies to actually take the initiative to come up with some form of protection for the livelihoods of creative professionals whose work is being used to train AI models without consent.

While this protection still doesn’t manage to offer creatives complete immunity from this threat, it is certainly better than nothing, further consolidating Adobe’s commitment to addressing the needs of the creative community, whether this is providing them with protection from AI, or providing them with access to AI.

“For hundreds of years, attribution was easy. You signed your name in the corner of your canvas, or your engraved it on the pedestal of your statue. But in the age of digital art, attribution is far more difficult. Most digital art isn’t signed, and it’s way too easy for an image or illustration to just spread all over the world with no connection to the creator who made it. Now, for the sake of your career, people need to know what you are capable of, and that’s one reason we have developed Content Credentials, an open-source, cross-industry standard that allows anyone to securely attach information to a piece of digital content.” ~ Scott Belsky, Chief Strategy Officer and EVP, Design & Emerging Products, Adobe

Adobe’s vision for the future of creativity

For Adobe, the dawn of Generative AI represents a paradigm shift in the creative process, fundamentally shifting the role of the artist from being one that requires the skilful honing of a manual craft to something more akin to a storyteller operating within the visual domain.

In the view of Alexandru Constin, Adobe’s VP of Generative AI, this is an upgrade, rather than a downgrade for creative professionals – though perhaps this depends on why you enjoy your craft in the first place.

“Creative professionals are going to be the ones upskilling themselves to mini creative directors telling stories to different segments and audiences in a more high level and semantic way, and we will play a role in being able to render giving them the control over how the story is told.” ~ Alexandru Constin, VP of Generative AI at Adobe

But don’t all these GenAI tools make creativity a little too easy, you ask? Well, short answer – yes.

However, visionaries of the tech world like Adobe would probably argue that the creative process, just like any other process, should become easier. That’s why they’re creating the ultimate digital playground for creatives, allowing humans to get back to doing what humans arguably do best: telling stories.

After all, if AI doesn’t equate to less work more play for humans, then really, what’s the hype all about?