AI adoption is rising rapidly across industries. Organizations are experimenting with new tools, piloting workflows, and building systems that perform well in early tests. However, many of these efforts fail to transition into reliable, long-term products.

Projects that seem successful in controlled settings often stall when exposed to real conditions. Once actual users, unusual scenarios, and higher stakes come into play, early confidence fades. Engagement drops, and projects quietly lose priority.

These setbacks usually have little to do with model accuracy or technical limitations. Models may continue to perform well according to standard benchmarks, and infrastructure may be functioning as intended. Yet the system still falls short of delivering consistent value.

The difference between technical success and day-to-day reliability is rarely closed by better algorithms. It is a systems problem, rooted in how AI initiatives are framed, measured, and governed once they leave controlled environments. Understanding what causes AI efforts to stall means looking at decisions that define how systems are run, not just how they’re built.

Common Failure Points in Enterprise AI Initiatives

Enterprise AI failures often arise from common underlying issues, even if they appear different on the surface. The problems below show where projects struggle long before technical limits are reached.

1. Problem-Solution Mismatch

Many projects begin with unclear goals or an assumption that AI is the only path forward. Teams introduce AI when a simpler approach would have been enough, or they automate decisions without identifying who is responsible for the outcomes. This ambiguity makes it hard to identify the sources of failure.

2. Misaligned Success Metrics

Early reviews often focus on accuracy, precision, and other technical metrics. These do matter, but they overlook the broader effects of false positives, rare events, user pushback, and disruptions to how work gets done. The actual impact often emerges only after real users interact with the system.

3. Data Fragility

Training data captures a specific moment, while real conditions change continuously. Without effective monitoring, models can gradually diverge from their training context. Issues may go unnoticed until significant discrepancies in output occur.

4. Organizational Gaps

Many failures have little to do with model design. They arise because teams launch AI capabilities without clear product ownership, escalation plans, or decision authority. Engineering may deliver a functional system, but if no one is accountable for its operations, responses to problems become inconsistent and slow.

None of these problems stem from a lack of technical skill. The projects fail because the surrounding system was never designed to support long-term operation under real-world conditions.

Why Pilot Success Doesn’t Mean Readiness

Many organizations treat a successful pilot as confirmation that an AI system is ready for real use. A pilot shows that something can work under controlled conditions, but it does not show how it will behave once people depend on it every day.

Pilots reward small, contained demonstrations. Production environments require steadiness, clear ownership, and predictable behavior. Once deployed at scale, AI systems meet a range of users, unusual cases, and situations that cannot be fully tested in advance.

When unexpected behavior inevitably emerges, trust becomes the limiting factor. Once trust erodes, recovery is extremely difficult. As confidence drops, teams shift back to manual steps, and the system loses support across the organization. The model may still produce accurate results, but the people responsible for outcomes no longer trust it to guide decisions.

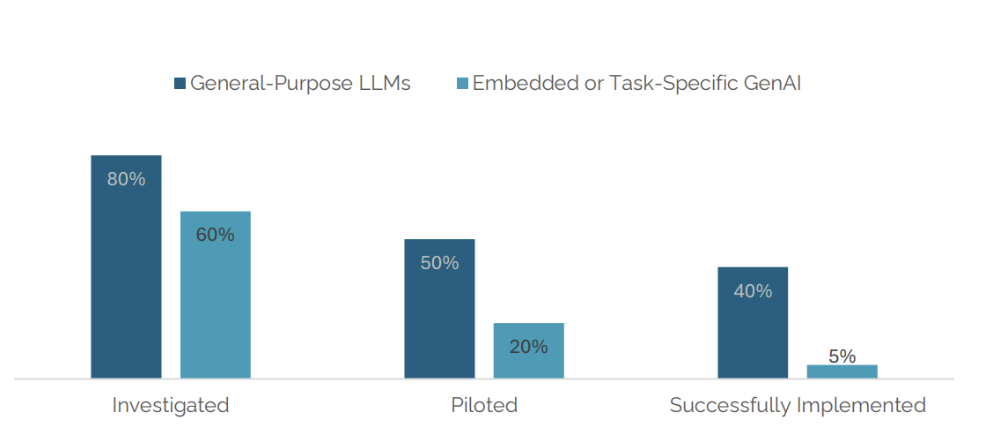

This problem is widespread. The MIT NANDA initiative found that 95% of organizations gained no meaningful return from their GenAI projects, and only 5% succeeded in integrating AI into production at scale. These numbers highlight how often pilots are mistaken for progress when the real barrier is operational readiness.

Exhibit: The steep drop from pilots to production for task-specific GenAI tools reveals the GenAI divide

Source: The GenAI Divide: State of AI in Business 2025 | MIT

The move from pilot to production introduces new responsibilities. Real users rely on system decisions, and the organization must support oversight, ownership, and clear intervention paths. Scale becomes possible only when these responsibilities are defined and maintained.

Reframing Ethical AI as a Scaling Requirement

Image: Ethical AI by VectorMine | ShutterStock

Ethical AI discussions often emphasize fairness, transparency, and accountability. Most of the time, these principles become just checkboxes in the development process. When treated as paperwork instead of practical requirements, they fail to improve how systems function.

For large-scale systems, responsibility must guide how decisions are designed, tracked, and corrected. Projects struggle during the shift from pilot to production because these expectations were never built into the design. The gaps are rarely intentional, but they leave teams with little guidance when outcomes diverge from early tests.

As AI increasingly influences customer interactions, operational tasks, and business decisions, teams shift from evaluating technical metrics to assessing whether the system performs reliably in real-world situations. To succeed at scale, systems need:

- Clear accountability: Someone must own automated decisions, even when outcomes are probabilistic. Without such explicit ownership, issues are treated as isolated technical problems rather than as signals that the system needs attention.

- Actionable transparency: Teams must see confidence levels, uncertainty, and triggers for human review. Information is useful only when it supports immediate action.

- Consistency and predictability: Systems must behave steadily across similar inputs. Stability matters as much as accuracy because people rely on repeatable outcomes.

- Built-in safety mechanisms: Teams must be able to pause or adjust system actions quickly without triggering new errors downstream.

Viewed this way, responsible AI isn’t a barrier. It supports growth by making sure systems can be trusted as their use expands.

How Ethical AI Systems Operate in Practice

Intent is not enough to keep AI systems dependable. The system must reflect clear design choices and daily practices that shape how it performs in the hands of real users.

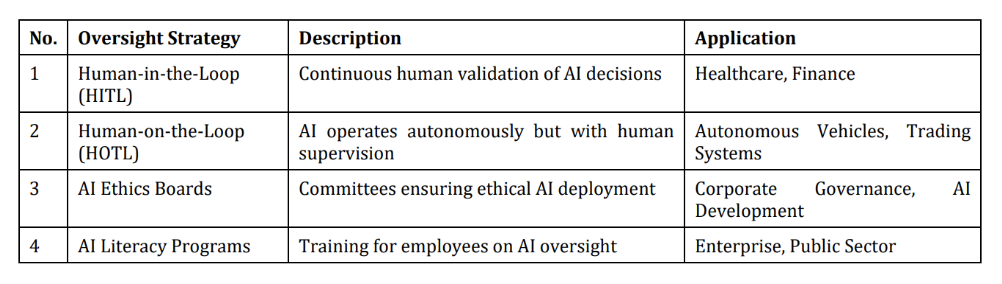

One important approach is routine human involvement. Systems should flag uncertainties, escalate unclear cases for review, and adjust automation based on confidence and context. Strategies such as human-in-the-loop and human-on-the-loop provide visibility into decisions and options for intervention. Additionally, AI boards and literacy programs help teams grasp system limitations and risks, reinforcing reliability and reducing risk through human oversight.

Table: Human Oversight Strategies in AI Systems

Controls must be embedded in the workflow from the start. Limits on system actions, clear boundaries for automation, and checks on sensitive decisions are crucial. Many failures occur because a system is allowed to operate outside its intended conditions, rather than due to technical flaws.

As systems grow, ongoing monitoring becomes as important as the initial build. Teams need visibility into drift, unusual behavior, and downstream effects. Monitoring should capture performance signals, along with indicators of trust, workload strain, and operational friction.

Finally, responsible AI systems benefit from clear escalation paths. When anomalies occur, teams need clarity on ownership, response options, and timing. Without this structure, issues remain active longer than necessary and can widen before anyone responds.

Reliable Patterns for Scaling AI Responsibly

AI systems that succeed in the long term share several patterns. These patterns come from clear expectations, consistent oversight, and steady adjustment as conditions change. The most reliable organizations tend to:

- Introduce autonomy gradually: Each step helps users understand the system’s behavior and build confidence before taking on more responsibility.

- Make tradeoffs clear: Speed, oversight, and safety are considered together so teams avoid surprises once the system reaches daily use.

- Measure success through tangible outcomes: Evaluations focus on user impact, operational fit, and decision quality, not only technical metrics.

- Revise the system continuously: The system is treated as an evolving product that requires regular review, refinement, and updates in response to new conditions.

- View failures as signals: Problems point to areas where design or process needs attention, helping teams improve the system without placing blame on individuals.

These habits keep AI systems stable as their environment shifts and expectations rise. They allow teams to build systems that continue to perform reliably once users depend on them.

The Role of Leadership in Ethical AI Deployment

Image: Leader discussing ethical AI and data governance by PeopleImages | Shutterstock

Ethical AI at scale is ultimately a leadership responsibility. Technical teams create the models, but leaders decide where automated decisions are acceptable, what level of uncertainty is tolerable, and who is accountable for outcomes.

Clear expectations from leadership influence how teams measure success. Metrics must extend beyond accuracy scores and reflect how the system performs in real situations. Leaders also signal that trust must be earned through steady, reliable behavior, not assumed once a system is deployed.

When leadership avoids questions of ownership and risk, teams often prioritize quick delivery and novelty. This results in tools that may work under ideal conditions but fail once real users depend on them. That’s why effective leadership enables responsible design. With clear accountability and practical transparency, teams can build systems that maintain user confidence and respond well when situations change.

Sustaining AI Success Beyond Launch

AI projects do not fail because ethics slow them down. They fail when ethical considerations arrive too late to preserve trust. Ethics is the discipline of designing systems that can be trusted repeatedly and predictably under real-world uncertainty.

Long-lasting systems share one defining trait: consistent behavior in everyday use. This consistency matters more than sophistication. The AI systems that endure are not the most advanced, but the ones designed to earn confidence continuously once people depend on them.

Leaders should treat trust as a requirement that must be built into processes from the start. Set clear ownership, keep human review where uncertainty is high, and give teams the visibility needed to understand how automated decisions play out. AI systems built on clear accountability and steady oversight stay reliable and create value long after launch.

About the Author:

Meghana Makhija is a Senior Product Manager Tech with over a decade of experience guiding advanced AI technologies from experimentation to trusted, real-world platforms. She specializes in translating innovations like GenAI, agentic AI, and machine learning into scalable, reliable products with measurable business impact. Meghana holds a master’s degree in Information Systems and is an active industry judge, speaker, mentor, and Senior Member of IEEE.