The relationship between artificial intelligence and data is both symbiotic and turbulent.

At the turn of the 21st century, technology innovators were confronted with such a massive overload of data that it spawned the concept of Big Data, and to this day, managing that data – from storage strategies to privacy protections – remains a significant challenge across sectors. Despite this excess, access to quality, labelled data remains a bottle neck for further AI development. In their strategy to become the global AI leader by 2030, China was projected to projected to have an AI market worth US$11.9 billion by 2023, but more than 60% of AI insiders surveyed in 2019 reported that a lack of data was among the factors hindering their progress.

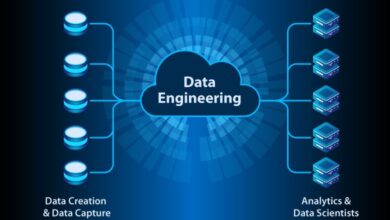

Although there is ongoing concern about AI replacing people, the advancement of artificial intelligence heavily relies on human intelligence, as preparing data for AI and machine learning (ML) requires people to label the data. Anaconda’s annual survey on The State of Data Science found that data scientists spend about 45% of their time on data preparation, such as loading and cleaning data, a significant reduction in the amount of time they could spend innovating. Not only are considerable resources being devoted to data prep, but developers do not generate sufficient volumes of data to feed their AI models, and so they seek external sources, such as pre-packaged data, scraping, or by building relationships with data-rich entities. However, the majority of solutions available are either non-scalable or costly – or both – and data labelling solutions that promise to be scalable can run into quality issues as the projects get bigger.

And so, in their quest to obtain high-quality data to feed their models, AI developers are turning to crowdsourced data labelling as a solution to fill the data gap. In 2019, analyst firm Cognilytica reported that the market for third-party data labelling was $150 million in 2018, forecast to grow to more than $1 billion by 2023.

A Growing Gap

The events of 2020 may accelerate that growth forecast even more, as the pandemic has widened the data gap. In January, management consultancy BCMStrategy hosted a webinar that explored how low data environments present problems for risk managers and strategists. The discussion highlighted that the pandemic is both enlarging existing data gaps and generating new deficits. For instance, decreased passenger flights have impacted the collection of weather forecasting data globally, with the European Centre for Medium-Range Weather Forecasts estimating that data deficit could reduce forecast accuracy by 15%. In terms of new data gaps, it is evident that the massive shift to remote school and work, alongside huge fluctuations in consumer spending decisions – groceries and home tech surged, while travel plummeted – is already creating gaps where data would normally be collected.

It remains too early to determine the full global impact of the pandemic, and data gaps are just one aspect. Regardless, technological advancement does not pause, especially as algorithms and hardware have become commodities for the AI industry, and AI developers are increasingly likely to turn to crowdsourced solutions to fill the data gaps.

Push for Progress

The momentum behind crowd-labelled data may be further magnified in sectors that lost business during the pandemic and are now in a budget squeeze, given that crowdsourcing is arguably the most cost-effective method of generating high-quality, labelled data. These cost benefits go beyond the price of projects – by opting for crowdsourcing, tech companies avoid the need to hire, onboard and train an in-house data-labelling team. Crowdsourced solutions like Toloka’s also eliminate the need for companies to develop data labelling tools in hours, or to invest in licensing those tools from a third-party vendor.

There’s no such thing as data overload in AI development, and data labelling is an unavoidable, time-consuming step in processing the data necessary to feed AI models. Obtaining the required volume of data was already a challenge leading into 2020, and the pandemic has both widened existing data gaps and created new one. Further, the impact of the pandemic has driven many companies to take a step back and evaluate their business processes, resulting in a dramatic acceleration. A McKinsey survey of around 900 C-suite executives and senior managers at businesses around the world found that business leaders surprised even themselves with how quickly their digital projects advanced in response to Covid-19. On average, digital transformations surged ahead by seven years during several months.

This widespread strategy assessment digital acceleration will certainly include AI development, driving companies to not only more highly prioritise sophisticated AI/ML projects, but also to evaluate and reassess how those projects are developed. As business leaders consider how to clear AI development bottlenecks by filling growing data gaps, they’ll look for solutions that increase the volume, efficiency and quality of data labelling while decreasing costs. The already dramatic growth predicted in crowdsourcing as a solution to label data appears poised to skyrocket even higher.