Here’s a startling fact for you: Only 39% of consumers trust companies to use AI responsibly, according to the Edelman Trust Barometer. Think about that. As AI becomes more embedded in our daily lives: from deciding our music playlists to influencing medical diagnosis, the very foundation it’s built on is shaky.

Having spent the last year helping build AI products at my design agency, there’s one thing I can say: AI adoption is accelerating at a breakneck pace, but trust is seriously lagging. At a time when user trust is at an all-time low (owing to how information is being published & consumed across multimedia) – designing trust in AI isn’t just a “nice-to-have” but a critical business imperative.

Over the course of this piece, I’ll walk you through the basics of designing trust in AI products, and how companies can build AI interfaces users actually trust – from showing the right information at the right time to making complex decisions feel transparent and explainable.

The Trust Gap in AI Systems: Why More Adoption Doesn’t Mean More Confidence?

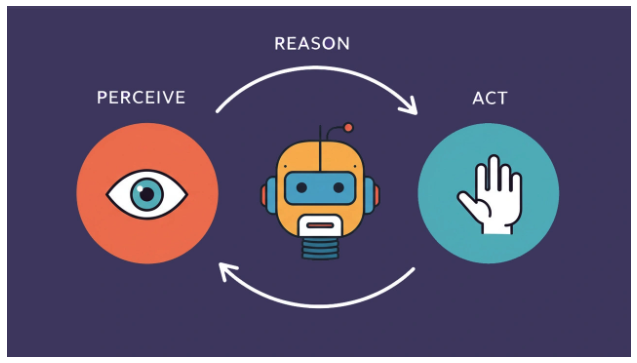

It’s a strange paradox, isn’t it? As more of us interact with AI daily, our trust in it seems to be stalling, or even shrinking. You’d think familiarity would breed confidence, but the opposite is happening. This growing divide is what I call the AI adoption-trust gap.

The core of the problem lies in the “black box” nature of many AI models. When an AI spits out a decision without any clear reasoning, we humans get nervous. We feel out of control, and that’s a breeding ground for skepticism. This isn’t just a feeling; it has real-world consequences.

We’re seeing a rise in regulations like the EU AI Act, which are designed to hold these opaque systems accountable for their decisions.

This is where UX design becomes the hero of the story. Bridging this gap isn’t about writing more complex code.

It’s about applying human-centric principles and proven UX research techniques to make AI interactions feel transparent, predictable, and ultimately, trustworthy.

Why Trust in AI Systems Starts with UX?

Image source: UX Collective

Before we dive deeper into the problem of transparency in AI, it’s crucial to understand how trust in AI usually starts with the UX.

In simple words: Emotions often drive how users interact with AI systems more than most designers realize. Trust is influenced by both cognitive and emotional aspects.

You could have built the most technically sound AI system in the world, but if users feel uncomfortable with it, they won’t use it.

The Emotional Impact of Opaque AI Decisions

Now, the “black box” problem isn’t just about technical complexity. It creates real emotional barriers that kill user adoption.

Studies show employees distrust AI because they perceive it “AI cannot be trusted because it does not have emotional states“. This disconnect triggers discomfort that directly blocks new technology acceptance.

Here’s what this looks like in practice: A job candidate with a quiet demeanor gets rejected by an AI recruiter that misinterprets their personality. Shoppers browse a store while AI tracks their facial expressions to push expensive items without their knowledge. These experiences create negative emotional responses that destroy trust.

Users don’t just distrust what they don’t understand – they actively fight against it. Even with AI adoption accelerating, only 20% of organizations trust their AI systems to make the right decisions. The technology works fine. Users just can’t see how it reaches conclusions.

Something interesting happens when you try to solve this with more transparency. Making AI systems more human-like can actually increase user discomfort.

According to social identity theory, “when an out-group threatens the uniqueness of an in-group, in-group members will react negatively to the out-group”. As AI becomes more human-like, it challenges our uniqueness and triggers fear about job replacement.

Trust As a UX Problem, Not Just A Technical One

Trust isn’t a technical metric you can optimize with better algorithms. It’s a user experience challenge.

As AI integrates into everyday products, trust becomes “the invisible user interface“. When this interface works, interactions feel seamless and powerful. When it breaks, the entire experience collapses.

The relationship between transparency and trust gets complicated fast. Research shows inconsistent results about whether more transparency actually leads to greater trust.

Why? Because trust isn’t about dumping information on users. It’s about providing the right information at the right moment.

A well-designed UX can achieve “calibrated trust” – the sweet spot between skepticism and over-reliance.

Think of it as a spectrum:

active distrust → suspicion → calibrated trust → over-trust.

You don’t want maximum trust. You want appropriate trust that matches the system’s actual capabilities.

User trust can grow over time through thoughtful interactions. One study found participants started trusting a cryptocurrency chatbot after initial interactions as part of “a journey” to discover its features.

Carefully-crafted UX can guide users from skepticism to appropriate confidence.

This means UX designers need to take on new responsibilities. We need to design interfaces that communicate AI’s role, limitations, and decision rationale. We need feedback mechanisms that let users question outputs and visual methods that demystify AI decision processes.

Building trust in AI systems requires addressing both emotional barriers and providing appropriate transparency through thoughtful UX design.

This makes it as much a design challenge as a technical one.

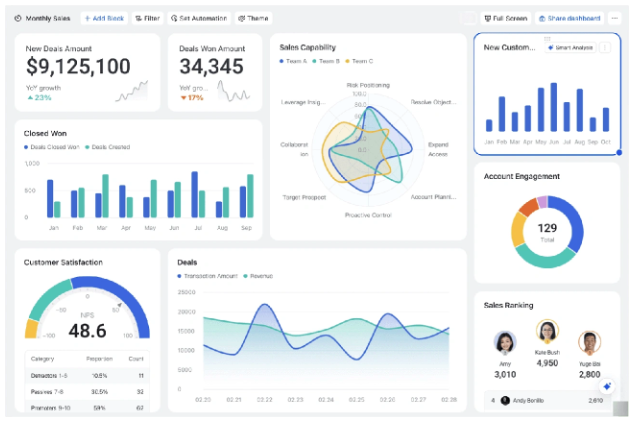

What Users Actually Need To See In AI Interfaces?

Image source: Lark

Deciding what information to reveal and when makes or breaks transparent AI interfaces.

Users don’t need technical disclosure – they need meaningful insight that builds confidence in the system.

Surface-level vs. deep transparency

Most designers get transparency wrong by going too simple or too technical. Surface-level transparency shows the what – that AI is being used and its basic role. Deep transparency exposes the how and why behind decisions.

The problem? “If AI documentation is filled with jargon, it can be inaccessible to users and non-technical decision-makers”. You end up with interfaces that either oversimplify or overwhelm.

Here’s what works:

- Layered information disclosure – Start with essentials, then let users drill down for more detail

- Visual aids – Charts and highlighting make complex information accessible

- Plain language summaries – Technical concepts translated without losing accuracy

Show users the data sources, limitations, and intended purpose. This builds “competence trust” – confidence that the system works within defined boundaries.

When to Show Explanations? – Timing and Context

Timing changes everything. Pre-explanations seem most valuable when the AI shows bias in its performance, whereas post-explanations appear more favorable when the system is bias-free.

Pre-explanations set expectations before users engage with AI. This prevents “negative expectancy violation” – the jarring disconnect between expectations and reality. For systems with known limitations, proactive disclosure helps users calibrate their trust.

Context matters more than timing. Explanations should appear where users need them, not dumped all at once. Strategic placement:

- At decision points where users must act on AI recommendations

- Through just-in-time tooltips or expandable sections

- Aligned with the stakes of the decision being made.

Showing both pre-and post-explanations tends to result in higher perceived trust calibration regardless of bias. This comprehensive approach ensures complete user understanding.

Interactive Transparency: Letting Users Explore AI Logic

Static explanations only go so far. Research shows that “allowing users to ‘tinker’ or experiment with AI settings helps them understand how algorithms work without feeling overwhelmed”.

Effective interactive techniques:

- Counterfactual explanations – Show users how changing inputs affects outcomes

- Confidence indicators – Visual representations of AI certainty

- “What if” scenarios – Tools for simulating different conditions

Credit scoring applications use counterfactual explanations to show users why loans were denied while suggesting specific approval steps. This builds trust and empowers meaningful engagement with AI decisions.

The goal isn’t exposing every algorithmic detail. It’s creating “appropriate transparency” – revealing enough for understanding without technical complexity.

This balance forms the foundation for building trust in AI systems users confidently rely on.

What Transparency in AI Really Means?

When we talk about transparency in AI, it’s not about dumping a 50-page technical document on the user. True transparency is about clear, contextual communication that tells people how the AI works without overwhelming them with jargon. It’s about proactive disclosure, not a privacy policy hidden in the footer that nobody reads.

Think about it in practice. When you see Google’s “About This Result” feature, it’s giving you a peek behind the curtain, explaining why you’re seeing that specific link. It’s a simple, elegant way of building confidence.

Another great example is Spotify’s AI DJ. It doesn’t just play songs; it tells you, “Next up, some tracks we think you’ll like based on your love for 90s rock.”

This small piece of context makes the experience feel personal and understandable, not random and creepy. That’s effective transparency in action.

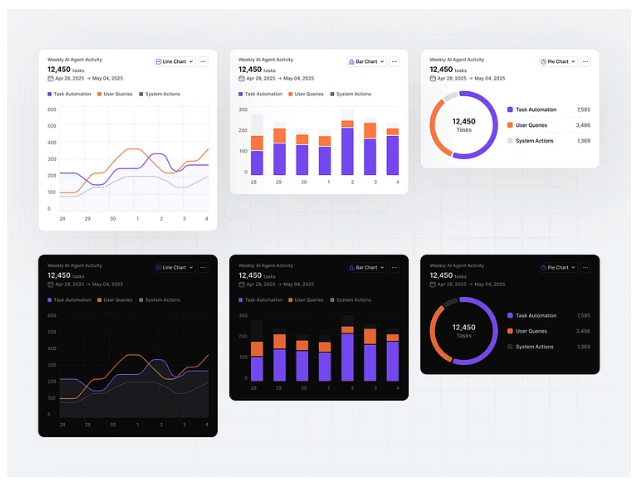

Explainability and UX: Making AI Decisions Understandable

Image source: Dribbble

If transparency is about what the AI is doing, explainability is about why it’s doing it.

This is where we truly start to peel back the layers of the black box. It’s crucial to distinguish between two related concepts:

- Interpretability: This is more for the data scientists. It’s about the degree

- to which a human can consistently predict a model’s result. It’s highly technical.

- Explainability: This is for the end-user. It’s the art of translating the AI’s complex internal logic into simple, human-understandable reasons.

As a UX designer, my focus is squarely on explainability.

- How can we make an AI’s decision-making process feel less like magic and more like logic?

We have plenty of tools in our UX toolkit: simple visual cues, step-by-step breakdowns of a decision, or interactive tooltips.

The goal is to make the “why” accessible. For those interested in the technical side, IBM’s AI Explainability 360 toolkit is a fantastic resource that shows how these concepts can be implemented.

How to Effectively Design Explainability in Your UX?

Good explainability design tells a story. It walks users through the AI’s decision process in a way that makes sense for their specific context and expertise level.

Given below is a stepwise breakdown of how you can create

-

Breaking Down Decisions Into Stories

Step-by-step progress indicators work because they create a narrative users can follow. Instead of dumping the final output, you show the logical progression from input to outcome.

Think of it like this: the AI made a decision, but users need to see the path it took to get there.

Effective approaches include:

- Decision pathway visualization – Show the logical steps from input to output

- Confidence metrics – Display how certain the AI is about each decision point

- Causal connections – Reveal how each factor influences the final outcome

One company we studied redesigned their Scheduler feature to provide day-by-day status breakdowns with clear labels and color codes. Users could finally follow message delivery stages without getting lost in technical details.

The difference? Users stopped asking “why did this happen?” and started asking “what should I do next?”

-

Visualizations That Actually Work

Raw data doesn’t build trust. Smart visualizations do.

Visual metaphor generation captures similarities between concepts and presents them intuitively through generated images. This turns abstract AI relationships into something concrete users can grasp immediately.

Here’s a real example: one company transformed frequency data into heatmap visualizations that instantly showed message distribution patterns. They created three views:

- “More than” view – highlighted excessive messaging

- “Exactly” view – showed precise delivery patterns

- “Less than” view – flagged under-delivery issues

Users could spot patterns and anomalies at a glance. No more digging through spreadsheets to understand what the AI detected.

-

Matching Explanations to User Expertise

The I-CEE framework proves that tailoring explanations to user expertise significantly improves their ability to predict AI decisions. One size definitely doesn’t fit all.

We structure explanations based on user knowledge:

| User Type | Explanation Level | Content Focus |

| Novice | Basic | Simple outcomes, confidence levels |

| Regular | Intermediate | Decision factors, data sources |

| Expert | Advanced | Detailed logs, process breakdowns |

This approach makes AI systems accessible without overwhelming beginners or frustrating experts. Users get exactly the level of detail they need to build appropriate trust in the system.

The goal isn’t to expose every algorithmic detail. It’s to create the right amount of transparency that empowers users to make better decisions.

Core UX Principles for Designing Trustworthy AI

So, how do we get practical about designing trust in AI? From my experience, it boils down to four core principles that should guide every design decision, emerging from a human-first approach like design thinking:

- Clarity: Use plain language, not technical jargon. Your grandma should be able to understand what the AI is doing and why.

- Consistency: The way you explain AI behavior should be consistent across every touchpoint. Inconsistent terminology breeds confusion and distrust.

- Control: The user should always feel like they are in the driver’s seat. Give them the power to question, override, or dismiss AI suggestions easily. This sense of agency is fundamental to trust.

- Feedback loops: Create obvious ways for users to tell the AI when it’s right and when it’s wrong. A simple thumbs-up/down icon not only improves the model but also shows the user that their input is valued.

When you weave these principles into your design process, you’re not just building a product, you’re building a relationship with your users based on respect and understanding.

Practical UX Techniques to Build Transparency

Let’s move from principles to practice. Here are some tangible UX techniques you can use to build transparency and explainability directly into your AI-powered products.

- Onboarding That Educates: Don’t just ask for permissions during onboarding. Use that opportunity to explain how user data will power the AI features to deliver a better experience.

- Confidence Scores: Display a simple indicator (like a percentage or a “high confidence” label) to show how sure the AI is about its suggestion. This manages user expectations.

- “Why am I seeing this?”: Place a small, clickable link or icon next to any AI-driven recommendation. When clicked, it should provide a clear, concise reason. This is probably the single most powerful technique for building trust.

- Progressive Disclosure: Don’t overwhelm users with information upfront. Show a simple explanation first, with an option to “learn more” for those who want to dig deeper into the details.

- Visual Explainability: Use charts, graphs, or highlighted text to visually represent the key factors that influenced an AI decision. For complex scenarios, you could even offer simple simulations to show how changing certain inputs would affect the outcome.

This is a key area where innovative design makes a huge difference.

Industry Examples of Transparent AI UX

You don’t have to look far to see great examples of these principles in action. Some of the biggest tech companies are leading the charge because they know trust is a competitive advantage.

- LinkedIn Job Recommendations: When LinkedIn suggests a job for you, it doesn’t just show you the title. It explicitly states, “You’re a top applicant because your profile lists 4 of the top 5 skills.” This is perfect explainability.

- Google Search “About this result”: As mentioned earlier, this feature is a masterclass in transparency. It tells you about the source, why Google’s systems thought it was relevant, and whether the site is secure.

- Microsoft Copilot Citations: In generative AI, “hallucinations” are a major trust-killer. By providing clear citations and links to its sources, Microsoft Copilot allows users to verify the information, turning a potential weakness into a trust-building feature.

Of course, no implementation is perfect. Sometimes the explanations can be too generic. But these examples show a clear trend: making the “why” visible is becoming the industry standard for any credible AI product.

Challenges & Limitations: The Balancing Act

Now, I want to be realistic. Designing trust in AI is not a simple checklist. It’s a delicate balancing act with some tough trade-offs that every team needs to navigate.

One of the biggest hurdles is the trade-off between simplicity and accuracy. If you oversimplify an explanation, you risk being misleading. But if you provide too much technical detail, you cause cognitive overload, which can leave users more confused than confident.

This is a tactic sometimes exploited in manipulative dark patterns to obscure or hold back information.

Then there’s the tension between transparency and protecting intellectual property. How much can you reveal about your proprietary algorithm without giving away the secret sauce? Finding that line is a constant challenge.

This is especially true when considering the various strategies to mitigate cognitive biases in decision-making, as revealing too much could inadvertently influence user behavior.

The Future of UX in AI Transparency

Where do we go from here? The field of designing for trust is evolving rapidly, and I see a few key trends shaping its future:

- Standardization and Regulation: As laws like the EU AI Act become more common, they will establish baseline requirements for transparency. This will push companies to adopt best practices, creating a more level playing field.

- AI Design Systems: We will see the rise of specialized design systems and UX frameworks that have trust-building components built-in, making it easier for teams to implement transparency consistently.

- The Rise of the “AI Trust Designer“: I believe we’ll see a new specialization emerge for UX professionals who focus exclusively on the intersection of AI, ethics, and user experience. These designers will be the bridge between complex AI models and the users who depend on them.

This is an exciting time to be a designer. We have a unique opportunity to shape not just how AI products look and feel, but how they are perceived and trusted by society.

Conclusion

Ultimately, trust in AI isn’t an inherent feature of the technology; it must be deliberately and thoughtfully designed. The pattern is clear:

balanced transparency + ethical design + user-centered approach = trusted AI experiences.

When you get this right, users don’t just tolerate AI – they actively seek it out as a valuable tool for their daily work. That ‘20% increase in user trust’ I mentioned earlier?

It comes from designing interfaces where people understand what’s happening and feel confident in the outcomes. Your AI system might be technically perfect, but if users don’t trust it, they won’t use it.

Design for transparency from day one, and you’ll build AI experiences that people actually rely on.

——

About the Author

Siddharth is the CEO at Bricx, and leads the design function at the company. With nearly 10 years in design & SaaS, he has worked with various B2B startups and helped them grow with conversion-focused design. You can connect with him on LinkedIn and X.

Siddharth’s LinkedIn: https://www.linkedin.com/in/

Siddharth’s X: https://x.com/siddharthvij_