Imagine you’re scrolling through your inbox late at night, reviewing a draft email from your copywriter. You spot a phrase that feels slightly off — perfectly grammatical, even polished, but somehow lacking the spark of you. You wonder: did a human write this or did a machine? In the age of sophisticated language-models, this question isn’t just for curiosity: it matters for authenticity, brand voice, trust and conversion.

In this article I paste in my experience as someone who has reviewed hundreds of copywriting projects augmented by AI, and worked with marketing teams adapting to generative-AI tools. I’ll unpack what “humanizers” mean in the context of AI-driven copywriting, how the technology holds up, where it fails, and what professionals can do to ensure quality, trust and brand impact.

What Are “Humanizers” in Copywriting?

Definition & background

In this context an ai humanizer is a process, technique or tool that aims to make AI-generated writing sound more human—more natural, engaging, emotionally resonant, aligned with brand voice. It may involve:

• Post-editing AI output by a human copywriter.

• Training fine-tuned models on a specific brand’s tone, idioms or vocabulary.

• Using prompts or constraints to encourage style, personality, narrative arc.

When we ask to “humanize AI” in writing, we refer to strategies that elevate raw machine-output into something that feels authored by a person, not a robot.

Why the concept matters

As I’ve seen in practical work: purely AI-generated copy can often feel generic, formulaic, lacking nuance or empathy. It may read like English, but it doesn’t always feel like the person or brand behind it. That gap can undermine trust both internally (in-house teams) and externally (audiences). Researchers call this phenomenon part of the “AI trust paradox” — the more human-like the AI appears, the harder it becomes to detect errors or misalignment.

Myth-busting

Myth 1: “AI copywriting can replace human writers completely.”

– Reality: Even the best commercial tools today can generate readable text, but they often miss deep emotional resonance, brand nuance or strategic persuasion.

Myth 2: “If it sounds human, it is human — so trust it.”

– Reality: Fluency doesn’t guarantee accuracy, relevance, tone-alignment or ethical

soundness. This is the core of the trust-paradox: convincing yet possibly wrong.

Myth 3: “Humanizing AI means removing all machine-signals so the audience never knows.”

– Reality: Transparency matters; mis-attributing authorship or hiding AI use can damage credibility and trust.

How Different Tools and Situations Handle the Issue

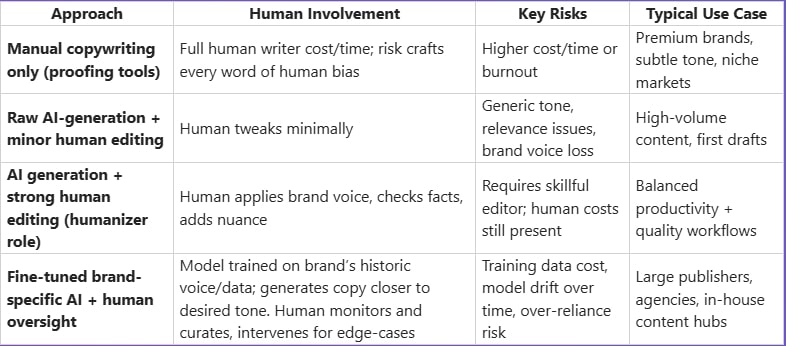

Let’s explore how copywriting workflows vary depending on tools, context, and human intervention.

Tool spectrum & workflow stages

Here’s a comparison of typical scenarios:

Features to evaluate when selecting tools

As someone who has tested multiple platforms, the following features matter for trust and performance:

• Perplexity & burstiness: measure of how predictable or “robotic” the text flows.

Lower perplexity often indicates smoother but potentially bland copy; higher burstiness can indicate more human-like variation.

• Confidence calibration: Does the system provide a confidence score or probability of correctness? One study shows uncalibrated AI confidence can harm collaboration.

• Training/metadata transparency: Is the model fine-tuned with your brand data? What is the freshness of its knowledge? Lack of transparency lowers trust.

• Governance and detection risks: Tools that embed metadata or markers may trigger AI-detection or SEO risks. Content created purely by AI may face ranking penalties.

• Attribution & editing workflow: Does the tool integrate seamlessly into your human copywriter’s workflow, or does it force separation?

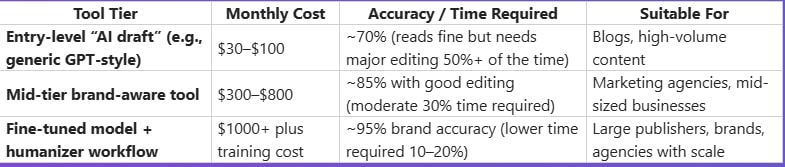

Pricing, accuracy, alignment — a mini-comparison

Below is a high-level comparison (fictional numbers for illustrative purposes):

Note: These are indicative only. Always test in your context and review recent case-studies rather than rely on vendor claims. The risk of “AI washing” — over-claiming AI capabilities— is real.

Who Needs to Care? Contextual Breakdowns

Let’s break down how different audiences must think about AI-humanized copywriting:

For students and academic writers

Students using AI to draft essays or assignments must be especially cautious. Not only do issues of originality and plagiarism apply, but AI may generate incorrect references, invented citations, or mis-interpret prompts. Data from educational research show trust and privacy concerns when AI involvement is visible. Humanizing AI here means verifying facts, citing all sources, ensuring writing reflects you, the student, not just the machine.

For professional copywriters and freelancers

As someone in that role, I’ve seen clients question the authenticity of work when AI-detector tools flagged content. The professional must act as the ‘humanizer’: editing for tone, context, brand voice, emotion. The copywriter’s value shifts from raw drafting alone to supervisory, strategic, editing and quality-control roles.

For publishers and content houses

They face scale challenges. Publishers may deploy AI to generate content, but if tone, accuracy, ethics and SEO are compromised they risk readership trust, ranking penalties, and reputation loss. Indeed, customers don’t always trust AI-labelled services — a study found that use of the term “AI” reduced purchase intention. Humanizing means strong editorial oversight, ethical disclosure, brand-voice templates, and quality metrics.

For businesses and marketing teams

Marketers often employ AI to generate email campaigns, landing pages, ad copy. Here the key is balancing speed and brand consistency. If the AI-output feels disconnected from your brand promise or voice, conversion suffers. The humanizer role may fall to in-house brand leads or editors who adapt the copy, add human behavioural cues, ensure messaging aligns with values, data privacy, and compliance (GDPR, etc.)

Actionable Guidance: What to Do & How to Respond

Step 1: Audit your existing workflows

• Map where AI is used in your copywriting process — idea generation, drafting, full output.

• Evaluate how much human editing happens post-AI draft.

• Check for risks: brand voice drift, factual errors, tone issues, ethical risks (bias, copyright).

• Decide where in the workflow you need a “humanizer” oversight layer.

Step 2: Define brand-voice and humanizing guidelines

• Create a brand-voice guide: tone, vocabulary, sentence length, idioms, emotional triggers.

• Build clear prompts for your AI tools that include: “Write like X brand”, “Use tone: conversational, authoritative”, etc.

• Establish human-editing checkpoints: (a) fact-check, (b) brand-tone alignment, (c) emotional/perceptual review, (d) SEO/metadata review.

Step 3: Choose and evaluate tools carefully

• Use pilot tests: create identical briefs in two workflows (AI only vs AI + humanize) and compare metrics (engagement, conversion, voice consistency).

• Check for explainability & confidence calibration: does the tool flag when it’s unsure? Does it disclaim uncertainties? Models that over-state confidence may mislead.

• Assess human-in-the-loop capability: Can the tool easily hand over to a human editor? Does it allow versioning and tracking of edits?

Step 4: Build a “humanizer” culture

• Train editorial or copy teams on reading and editing AI output critically — spotting “machine-tone”, bland phrasing, repetition, lack of nuance.

• Encourage copywriters to see AI as an assistant, not a replacement — their value lies in human judgement, voice, insight, context.

• Be transparent where needed: If content is largely AI-generated but human-edited, decide whether to disclose in footer or editorial policy (depending on industry/regulatory requirement).

Step 5: Measure and iterate

• Track metrics: conversion rate, engagement, bounce rate, brand sentiment — compare AI-augmented copy vs fully human copy.

• Review any errors or mis-alignments from audience feedback (tone complaints, brand voice mismatches, factual inaccuracies).

• Maintain an editorial log: what edits were required post-AI? Are there patterns where AI regularly fails? Use this to refine prompts or training data.

Step 6: Address trust, ethics, and compliance

• Check all copy for copyright issues and attribution: AI models may draw from licensed text or publicly scraped content.

• Review privacy/data compliance: Are you using customer-data in prompts? Ensure GDPR/CCPA rules are adhered to.

• Establish governance: who is accountable for AI-generated copy? What happens if errors (factual, bias, libel) occur?

• Avoid undue “AI-washing” — claiming too much AI capability can backfire and reduce stakeholder trust.

Future Outlook: What Will Change in the Next 1–3 Years

• Better confidence and calibration metrics: Expect more tools to show how likely the output is correct, or how “unusual” the phrases are compared to training data. Research already points to uncalibrated AI confidence being a collaboration barrier.

• Brand-specific model fine-tuning at scale: More tools will allow marketers to fine-tune models on their own brand corpus (past high-performing copy) to produce output that already approximates brand voice, reducing human editing time.

• Regulation and transparency mandates: We’ll see more industry standards for AI-generated content — e.g., disclosure rules, provenance logs, AI-detection resilience. The “trust” element (both consumer and enterprise) will be regulated.

• Shift toward hybrid workflows where humans lead: The economy will recognise the “humanizer” role as a specialty — rather than being replaced by AI, writers and editors will transition to roles of curator, editor, brand-voice guardian, strategist. This forecast is already appearing in industry commentary.

• SEO and search-engine response to AI content: Search engines (like Google) are becoming more sensitive to AI-generated content; we may see stricter ranking factors for authenticity, originality and value-over-volume. Content that simply passes for human may not suffice.

• Ethical and bias issues become front-and-centre: Copywriting that uses AI will increasingly need to address bias, fairness, cultural sensitivity, authenticity. Auditing tools and human checks for such issues will become standard.

Key Takeaways

• AI-driven copywriting is a powerful productivity tool—but not a replacement for humans. Human oversight (the “humanizer” role) remains critical.

• Trust is the central issue: just because text reads “human” doesn’t mean it feels human, aligns with brand voice, or is factually accurate.

• Good workflows embed human editing, brand-voice guidelines, strong prompts and measurement of outcomes.

• Different user contexts (students, freelancers, publishers, brands) need tailored strategies for humanizing AI.

• Transparent governance, ethical compliance, tool evaluation and measurement are key for future readiness.

• Over the next few years we’ll see more fine-tuned brand-models, better calibration of confidence, regulatory oversight and an elevated value placed on human creators.

FAQ: Practical Questions & Answers

Q1. Can I rely completely on AI copywriting for my marketing campaigns?

No. While modern AI tools can generate readable drafts, relying completely on them without human review risks tone-mismatch, factual errors, brand voice drift and SEO penalties. Use AI as a starting point, but plan for editing, humanization and oversight.

Q2. What does it mean to humanize AI in copywriting specifically?

Humanizing AI means using techniques that make machine-generated text align with human characteristics: voice, emotional tone, idioms, context-appropriateness. It includes refining output, tailoring prompts, embedding brand personality and ensuring audience relevance.

Q3. Is content created by AI penalised by search engines?

Search engines like Google emphasise original, value-added content. Some commentary and tools suggest that purely AI-generated or poorly-edited content may be downgraded or flagged. The safest path: ensure human editing, add unique insights, metadata, sourcing, brand context.

Q4. What are the major risks of using AI without a humanizer?

Risks include:

• Loss of brand voice and differentiation (many brands will sound similar). • Factual inaccuracies or hallucinated data.

• Over-reliance on generic or formulaic phrasing — lower engagement.

• Consumer distrust (studies show visible AI involvement can lower trust). (CX Dive)

• Ethical, copyright or compliance issues (using scraped or unattributed data).

• Difficulty distinguishing authorship — which may impact credibility.

Q5. How should I choose an AI tool for copywriting?

Consider:

• The quality of output (try a blind test).

• Whether it supports brand-specific fine-tuning or custom prompts.

• How easy it is to hand off to a human editor.

• Transparency of model and training data.

• Any meta-features: confidence scores, version tracking, integration with workflows.

• Pricing relative to editing time and human involvement.

Q6. What metrics should I monitor to measure success of humanized-AI copy?

Monitor:

• Engagement metrics: time on page, clicks, conversions, bounce rate.

• Voice/brand consistency: internal audits or reader feedback.

• Editing time: how much human time is still needed after AI draft.

• Error/fact-check incidence: how many edits required for accuracy.

• SEO metrics: ranking trends, organic traffic, keyword performance.