Why Manufacturers Must Rethink Data Infrastructure, Access, and Governance to Fully Leverage AI

Artificial intelligence (AI) is transforming how products are developed, manufactured, and supported. From optimizing designs to automating repetitive engineering tasks, AI is reshaping the way teams interact with information. It is also changing how they make decisions. But behind the excitement lies a critical truth. AI is only as effective as the data it relies on.

For organizations looking to scale AI across the product design and manufacturing lifecycle, the right data foundation is essential. That means moving beyond siloed systems and inconsistent datasets. Organizations must invest in infrastructure that ensures AI can access, interpret, and act on trustworthy information without putting sensitive IP at risk.

AI Needs Better Data

AI may identify design requirements, analyze product performance, or generate draft documentation. However, the value of its outputs depends entirely on the quality of the inputs. If raw data is inaccurate, incomplete, or poorly structured, AI will simply amplify those flaws, leading to flawed designs or costly missteps.

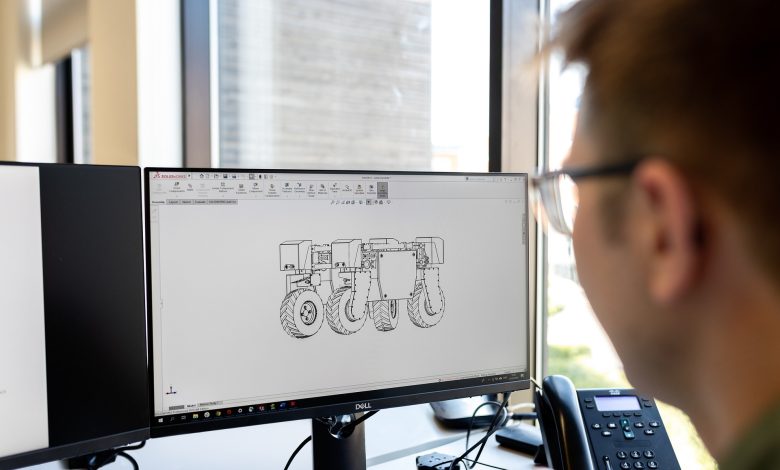

The challenge becomes even greater in manufacturing and product development, where data spans formats — structured, unstructured, visual, and textual — and lives across systems such as PLM, ERP, CAD, and MES. AI can only deliver meaningful insights when that information is connected and contextualized, creating a unified foundation that reflects the full product lifecycle.

A robust product digital thread provides the secure, governed, and connected data foundation AI demands. It creates a contextualized view of product information across the lifecycle and unlocks the full power of AI, data science, and advanced analytics – enabling smarter, faster decisions. But even the strongest digital thread requires governance, access controls, and data quality practices to ensure AI delivers reliable insights and lasting business value.

Classifying and Controlling Access to Data

Not all product data should be treated equally. A critical step in scaling AI is classifying and managing data according to its sensitivity. Three key categories provide a useful framework:

1. Unrestricted data includes information that is publicly available or does not reveal sensitive product details. This could be publicly published product specifications, general marketing materials, and common industry standards.

2. Sensitive data includes information that should only be accessible to individuals or entities with specific access rights. Access to new product specifications and designs must be limited to a small audience. Generative AI should never become a backdoor way to access sensitive information. However, those with access to this data should not be denied the opportunity to fully leverage the power of AI.

3. Confidential data includes information that is highly sensitive – trade secrets, proprietary designs, classified documents, or unique manufacturing processes. This data should be rigorously protected and should be available to AI models only in tightly controlled circumstances.

By linking this classification scheme to dynamic, role-based access controls, organizations reduce the risk of unintended exposure – whether through a chatbot, internal assistant, or generative AI model – while ensuring AI only uses what it should.

Practical Guardrails for Safe, Effective AI Use

AI without governance can be risky. Imagine a well-meaning employee asking, “What’s the secret recipe for Product X?” and unintentionally triggering a serious breach. The solution isn’t to block AI access, but to apply the same governance we already expect of human users.

Common safeguards that can help to protect critical and sensitive information include:

- Context-aware access: AI tools should inherit user permissions and apply access control at the individual data element level, not just the system level.

- Purpose-specific data streams: Instead of exposing AI to the entire digital thread, companies should create filtered data feeds aligned with specific use cases – such as customer support, engineering, or supplier communication.

- Secure API integration: All data interactions between AI services and enterprise systems should be channeled through secure, governed APIs that enforce business rules, ensure auditability, and provide monitoring capabilities.

With these practices in place, organizations can adopt AI in targeted, low-risk ways. This unlocks value without exposing the organization to unnecessary danger.

Laying the Technical Groundwork

Beyond governance, infrastructure is critical. Most AI tools require rapid access to clean, integrated, and semantically rich data. Yet many manufacturing environments struggle with fragmented systems and inconsistent data standards.

To fully capitalize on AI, companies must prioritize data integration across departments and lifecycle stages. This enables AI to connect design intent with manufacturing performance, field service records, and customer feedback.

Equally important are semantics and machine readability. These allow AI models to understand relationships between data elements and extract meaning more effectively. Open systems and interoperable tools further support scalable data exchange and help organizations adapt to evolving AI technologies and regulatory requirements.

These investments not only support today’s use cases but also future-proof the organization as more sophisticated applications – like agentic AI and multimodal models – enter the mainstream.

Start Small, Scale Wisely

Scalable AI success starts with smart pilot projects. Organizations should begin with specific, high-impact tasks where AI can deliver value quickly and safely. Document classification, requirements traceability, and intelligent search are good places to start.

Early wins don’t just demonstrate ROI. They build confidence in the technology, reveal limitations, and help teams develop stronger internal best practices for responsible use. As comfort grows, the scope of AI projects can expand.

Equally important is keeping engineers and other stakeholders in the loop. AI should amplify expert judgment, not replace it. That means creating human-in-the-loop workflows and empowering experts to verify AI outputs and interpret results critically. Ensuring scale comes with both trust and accountability.

Future-Proofing Your Strategy

As AI capabilities continue to evolve, the data foundation you build today will determine how flexible and competitive your organization is tomorrow. New requirements like the European Union’s Digital Product Passport will demand greater visibility and interoperability across product lifecycles. Agentic AI systems may soon play an active role in monitoring market trends and autonomously suggesting product enhancements.

Future success depends on what you do now. Clean and connect your data. Classify it intelligently. Govern access with precision. Equip your teams with the tools and skills they need to use AI responsibly. With a strong foundation in place, AI won’t just be another tool. It will become a strategic partner in driving innovation, accelerating development, and delivering better outcomes across the entire product ecosystem.