Artificial intelligence (AI) has data as its building blocks, which means that any AI system or model is only as good as the data that trains it. And the secret sauce to high-quality data without any bias or inaccuracy? High-quality data, labeled by expert data annotators! Before a model converts raw datasets into meaningful patterns, annotators should add meaning to contextualize the data.

However, humans aren’t always right. An MIT study has revealed that even ImageNet, one of the leading public training datasets, has a much higher data labeling error rate and these errors are cataloged on Label Errors (labelerrors.com). This raises a critical question of why and when mistakes in data labeling occur.

It is important to understand that ‘human errors’ can’t be blamed for all the challenges; usually, a flawed data annotation pipeline is the root of data labeling errors. Thankfully, data annotation companies have complete control over this aspect while managing an artificial intelligence project.

Here we have enlisted five of the most costly and common data labeling errors that data annotation service providers face and solutions that can help mitigate these errors-all while maintaining data consistency and accuracy throughout the project lifecycle.

Missing Labels

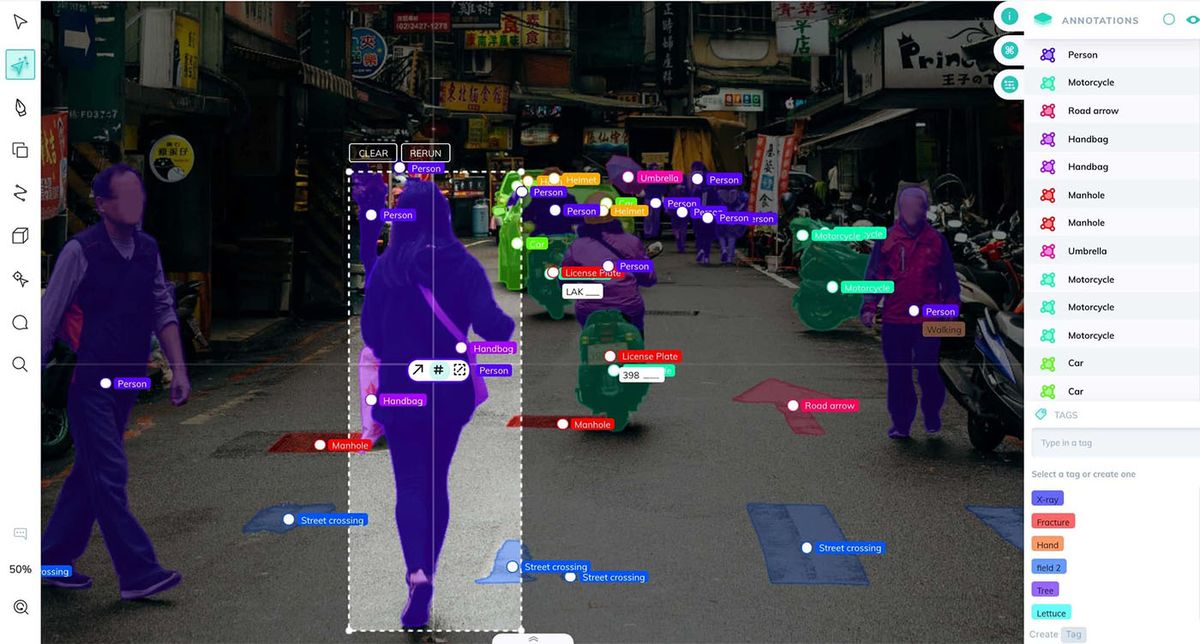

Missing labels can occur in any raw dataset and type, but this mistake is more prevalent in image classification and annotation. If the data annotator forgets or misses to add a bounding box across each required object in the image, it leads to the missed label error. Although the error seems trivial and simple, the repercussions can be severe. For instance, consider an automotive car that can’t detect every pedestrian on the road.

The Solution: The key to finding and solving this error is through a collaborative and robust data validation process. These cases call for a consensus approach where two or more annotators label the same image dataset, pinpoint categories of disagreement, and modify instructions until annotators are on the same page. A comprehensive peer review can also help in finding quality issues.

Inaccurate Fitting

Usually, there is a significant gap between the object to be annotated and the bounding box. This gap can lead to data noise, damaging the credibility of an AI model to identify the object accurately. For instance, if each bounding box for a bird image includes both the bird and a huge portion of the blue sky, chances are that AI algorithms would start assuming the blue sky is a part of the bird. In such a case, it would lead to incorrect identification of the object in the image.

The Solution: The root cause of this error is unclear instructions. It is important to define the accuracy or error tolerance in the instructions provided to data labelers. Various videos or supporting screenshots of ‘good’ or ‘bad’ data examples can be used to illustrate the tolerance window. Detailed instructions are vital in any labeling process as they reduce confusion and rework. An instruction set should:

- Have guidelines in clear language with examples such as edge cases and use cases

- Videos or images to reinforce the difficult-to-follow instructions

- SoPs for data annotators to maintain consistency

- Links to additional informational resources, if needed

Tag Addition in the Mid Process

Sometimes data annotators fail to acknowledge the requirement of an additional entity type/types until after the process of tagging has been started. For instance, a customer service chatbot might not detect a common customer issue, which later comes up during the labeling process. This would lead the data annotator to add a relevant tag to capture and detect that issue from the specific point.

But what about the data that was already tagged? Those annotated objects will not have the new tags, resulting in inconsistent training data.

The Solution: The easiest way to fix this error is by engaging a subject matter expert from the beginning to support data annotators in developing tags and taxonomy. The involvement of domain experts can be a game changer for the entire project, but their expertise is of utmost importance at this stage. Are there any left-out labels that can be added? Or has every possible label been accounted for? They are the ones who can answer!

Another method is crowdsourcing! Think of the last time when there was a brainstorming session; usually, such sessions result in extracting more relevant information/ideas than any one individual can provide. The same approach works here. Ask for input from other team members, be they annotators or engineers.

Data Annotator Biasness

When many annotators represent a single point of view, the outcome model can be biased. Since the group’s point of view is narrowed during annotation, the resulting AI algorithm will automatically have a narrow PoV. This leaves the other marginalized categories and their interests out of the model, reflecting results in search engines to recommendations.

Other than this, there are other forms of annotator bias as well. For instance, annotators with British English as their native language would tag a bag of potato chips as ‘crisps’. On the other hand, American English-speaking annotators will label the same data as ‘chips’. Whereas the label ‘chips’ will be reserved for fries by British English-speaking annotators. This inconsistency in tagging will be reflected in the model, leading to the mix-up of American and British English in predictions.

Another type of annotator bias occurs when annotators with average, generalized knowledge are asked to categorize and tag data that only a subject matter expert would know. For instance, there will be errors in tagging when a general data annotator is asked to label brain neurons, which clearly requires the expertise of a neuroscience expert.

The Solution: To avoid prejudiced algorithms that under/overrepresent a specific population, the annotation team should have annotators from diverse groups, reflecting the complete populace being impacted by artificial intelligence. The remaining two biases can be addressed through domain expertise. If the project requires specialized knowledge, bring in annotators who have prior similar experience or expertise.

Moreover, outsourcing data annotation to top data annotation companies or crowdsourcing are other excellent methodologies to validate the quality of your labels and detect biases.

Extensive Tag Lists

Annotators assigned with too many initial tags can overwhelm them, leading to costlier and longer project runs. Overwhelming tag lists lead to availability bias. It makes annotators utilize tags from the list’s initial point rather than checking the entire list for the most appropriate tag every time. This leads to training data that tells an incomplete story about the raw dataset.

Such extensive tag lists also make annotators subjective in their tag selection, resulting in errors. For instance, if annotators are asked to tag a red square and tag options are blue, yellow, and red, they’ll pick ‘red’. However, if they are given a red square with tag options such as crimson, ruby, scarlet, garnet, and tomato, the decision will be somewhat arbitrary. The more shades are added to this list, the more inconsistent and redundant training data will be.

The Solution: It is better to have tags categorized around the highest-level topics. However, certain projects may need granular labels requiring a different approach. If such details are needed, it is better to break down annotation tasks into smaller phases or subtasks. Each subtask represents a specific instance of the high-level class, allowing annotators to sift through a manageable number of tags before going into the details.

Concluding Thoughts

With so many aspects requiring constant attention, large-scale and error-free data labeling can be a challenge. Without mitigating these challenges, the resultant data set may incur several layers of complexity and overhead or low quality with inconsistencies and inaccuracies. Partnering with the best data annotation companies can help navigate these complexities by deploying a blend of strategies mentioned above to have well-labeled, accurate, and consistent training datasets.