Voice AI promises a seamless, natural in-car experience, yet its full potential remains untapped despite significant breakthroughs. The question is no longer if voice will be central to the in-cabin experience — it’s whether the industry is prioritizing the right problems to make that experience truly intelligent.

This article explores the current capabilities and limitations of in-car voice AI, identifies critical areas for advancement toward the “companion on wheels” vision, and argues for immediate, focused innovation that meets escalating consumer expectations, while capturing a rapidly expanding market opportunity.

An HMI Roadmap for OEMs: The Shift to Proactive Intelligence

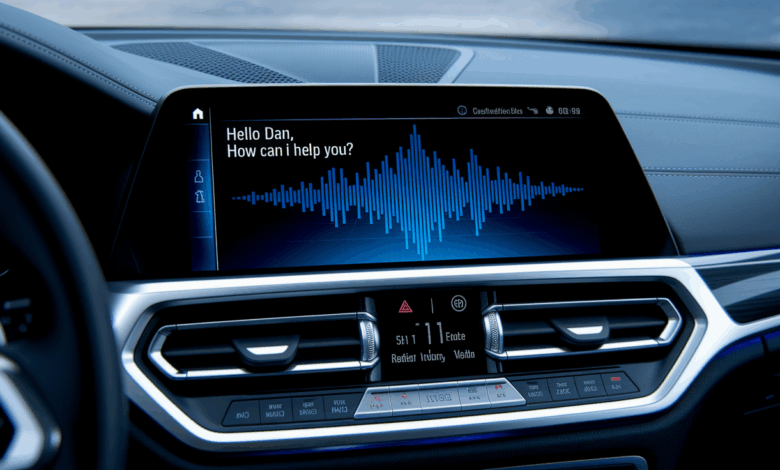

The automotive industry is moving beyond buttons and touchscreens towards personalized, intelligent, multimodal experiences — with voice and vision as the primary modalities for natural Human Machine Interaction (HMI).

A genuine “companion on wheels” demands more than basic voice commands. It requires comprehending the entire in-cabin acoustic scene — not just what was said, but who said it and from where — alongside deep contextual understanding for proactive assistance. Achieving this level may also require integrating audio with visual cues, such as eye-tracking.

Smart Eye’s CES demonstration of a 3D interior sensing system, using eye-tracking and cabin monitoring, exemplifies the industry’s move toward proactive, intelligent in-vehicle companions.

With the in-car voice technology market projected to exceed $13B by 2034, OEMs that lag on user experience will lose market share not to “better cars,” but to better conversations.

Cutting Through the Hype: What Voice AI Can Deliver Now

In-car voice AI has moved light-years beyond simple commands like “Call Mom” or “Turn on the radio.” Both consumer demand for seamless, hands-free interaction and rapid innovation drive the shift. Key trends accelerating this transformation include:

1. The Rise of Generative AI

- More Natural Conversation and Intent Understanding: Generative AI is a game-changer. Modern voice assistants are now engaging in more complex and natural conversations. They can understand context, follow up on previous requests, and handle multi-layered commands like, “Find a coffee shop near me with free Wi-Fi and outdoor seating.”

- Predictive and Proactive Intelligence: AI-driven VUIs are becoming predictive, learning drivers’ habits to offer proactive assistance, such as the car voice assistant automatically suggesting a preferred route or playlist based on the time of day, or pre-emptively warning about vehicle issues.

2. Brand Personalization as a Differentiator:

- Automakers are increasingly developing their own branded voice assistants to create a unique and consistent brand experience. Mercedes-Benz with MBUX, Nio with its NOMI chatbot, and others are building systems that go beyond generic voice commands to provide a more integrated and personalized interaction, strengthening brand loyalty

3. Overcoming Core Technical Challenges for Scalability

- Advanced Noise Cancellation: Advanced noise cancellation and multi-microphone arrays to create “acoustic zones” within the noisy cabin, ensuring accurate command understanding by filtering background noise.

- Hybrid On-Device and Cloud Processing for Responsiveness: Hybrid on-device and cloud processing addresses connectivity and response time. Essential commands are processed on the edge for speed, while complex requests go to the cloud, ensuring responsiveness even without Internet.

4. Elevating Safety and Accelerating User Adoption

- Minimizing Distraction and Enhancing Safety: A primary goal is enhanced safety by reducing “eyes off the road” time. Replacing physical buttons and complex touchscreen menus with voice commands lowers cognitive load, keeping hands on the wheel.

- Bridging the Trust Gap Through Performance: Earning user trust remains a challenge due to accuracy issues, privacy concerns (always-on microphones), and frustrating experiences with older, less capable systems. Automakers are addressing this with clearer privacy policies and demonstrably reliable systems.

Where Voice AI Still Falls Short and How We Can Fix It

Voice UIs have made significant progress in mimicking human conversation, often sounding incredibly natural. However, their interaction falls short of human conversation, struggling with ambiguity, sarcasm, humor, and unstructured dialogue. There are critical architectural and acoustic limitations that prevent them from truly replicating natural human conversation and providing seamless, proactive assistance. These include:

Architectural Limitations: The Wake Word Problem

A fundamental barrier to natural voice interaction is the wake word, which forces a rigid, turn-based interaction rather than spontaneous human-like conversation, frequently leading to frustrating and out-of-context exchanges.

This constraint stems from the current voice UI architecture: a small, on-device software detects the wake word, opening a “gate” that connects to a cloud-based large language model (LLM) for processing. Hypothetically, this “gate” to the cloud LLM could be left open, making wake words unnecessary. However, continuous cloud LLM processing is impractical due to prohibitive costs and significant privacy concerns (i.e., constant audio uploading to the cloud).

Overcoming these limitations demands a fundamental architectural paradigm shift: the development and deployment of smaller, purpose-built Small Language Models (SLMs) that run directly on the device. Enabled by advances in edge computing, an always-on, always-listening SLM could enable seamless, human-like voice interaction without constant cloud reliance, and would eliminate the need for wake words.

Conversational Flow and Proactive Intelligence

Human conversation is a complex exchange of turn-taking, interruptions, and non-verbal cues. Current voice UIs struggle with this. A slight delay in response disrupts the natural rhythm of a conversation. If you interrupt a person, they can adapt.

If you interrupt a Voice UI, it might get confused, reset, or fail to process the new command entirely. A human might also spontaneously show empathy by saying, “You sound upset, is everything okay?” based on your tone of voice—an AI won’t.

The always-on SLM architecture previously described would be pivotal in enabling the VUI to understand not just conversational context but also critical subtext and emotional cues, paving the way for truly predictive, proactive, and genuinely human-like interactions.

The Unforgiving Nature of in-Cabin Acoustics

Current in-car voice UI faces specific and significant challenges due to the unique acoustic environment of a vehicle. These challenges include:

- Reverberation and Echo: The enclosed space of a car cabin causes sounds, including the driver’s voice, to bounce off hard surfaces like windows and dashboards. This creates echoes and reverberation, which can corrupt the original speech signal and make it difficult for the voice AI to transcribe the original speech accurately.

- Competing Speakers and Crosstalk: With multiple people in the car, the system must differentiate between the person giving a command and other passengers talking. This “crosstalk” can confuse the system, leading to incorrect responses or a complete failure to understand the command. Traditional systems often struggle to isolate a single speaker from a mix of conversations.

- Ambient Noise: Cars are inherently noisy environments. Road noise, engine hum, air conditioning fans, music playing, and open windows all contribute to a high level of background noise that can overwhelm the user’s voice and degrade recognition accuracy.

Advanced audio processing techniques, such as Spatial Hearing AI, provide solutions:

- Isolating individual speakers by clustering speech signals based on location

- Reducing reverberation and noise by locating speakers accurately

- Integrating seamlessly with existing microphone arrays as software, avoiding complex, multi-microphone setups.

These methods produce clear, isolated speech signals, enhancing accuracy and reliability.

Why These Challenges Matter Now

- Poor performance hurts JD Power and UX scores, which in turn affect brand loyalty and repurchase intent.

- With the in-car voice technology market projected to exceed $13B by 2034, OEMs that lag on user experience will lose market share not to “better cars,” but to better conversations.

- Regulatory pressures around biometrics, data handling, and driver distraction are only increasing. The next five years will be decisive.

Conclusion: Beyond the Hype — Realism and Opportunity

While in-car Voice AI has advanced significantly with Generative AI and improved noise cancellation, its full potential remains untapped. Despite progress in natural conversation and personalization, challenges persist, including reliance on wake words, difficulty with human conversational nuances, and demanding car acoustics.

The future of in-car voice AI depends on smaller, on-device SLMs and advanced spatial audio, enabling proactive, predictive systems for a “companion on wheels” experience. For OEMs, this means market differentiation, enhanced user experience, and privacy compliance.

Success will be measured by tangible improvements in safety, convenience, and user satisfaction, not hype.