Training Security Experts in AI and LLM Application Security

Understanding Generative AI and LLM Risks Large language models and generative AI have just started getting baked into modern enterprise applications, and... Read more.

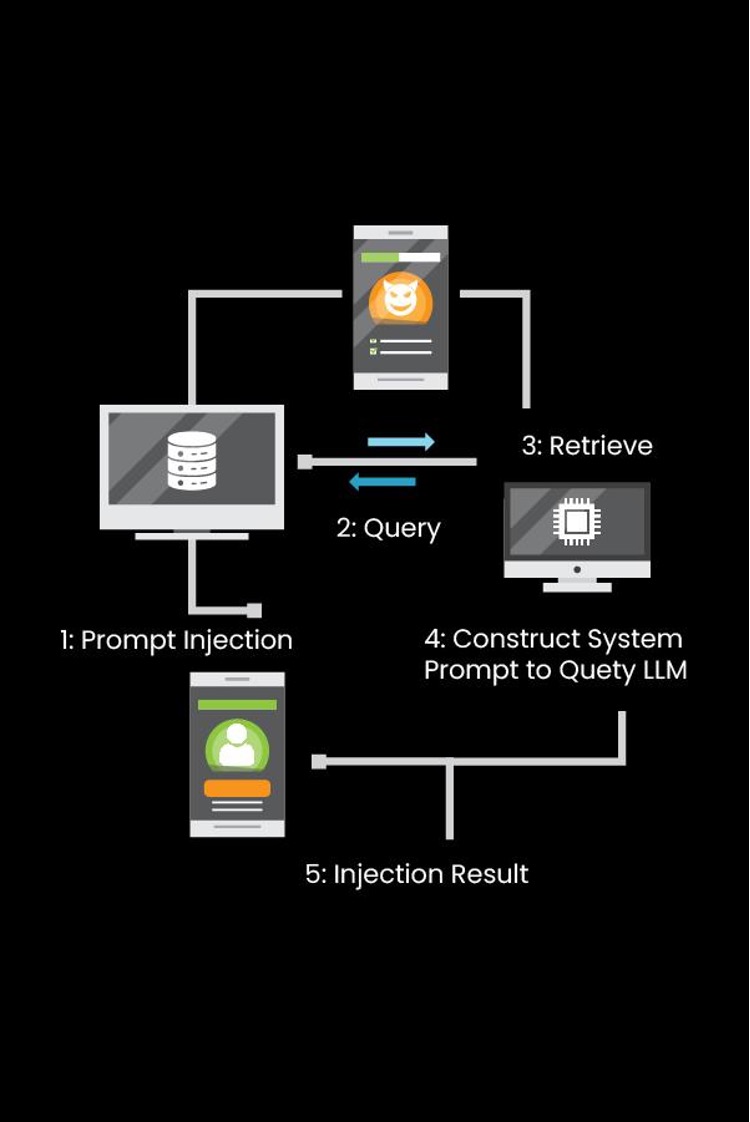

Mitigating Prompt Injection Attacks on Large Language Models

Among all the potential threats to the structure and functioning of large language models (LLMs), the most concerning threat would be prompt injection attacks. These... Read more.