The $5.9 Million Question Every Financial Executive Should Be Asking

If an AI-powered attack targeted your institution tomorrow, how confident are you that your current security controls would detect and stop it? Most executives would cite their compliance certifications, multi-million-dollar security investments, and regular penetration tests.

The reality is traditional security approaches are fundamentally mismatched against AI-enhanced threats. According to IBM’s 2024 Cost of a Data Breach Report, financial services organizations face an average breach cost of $5.9 million, with AI-related breaches becoming increasingly expensive due to broader data compromise and operational disruption.

After many years helping global financial institutions strengthen their defenses, I’ve witnessed a troubling pattern: organizations are fighting tomorrow’s intelligent threats with yesterday’s static defenses.

The Compliance Trap: Why Check-Box Security Is No Longer Enough

Financial institutions have long operated under the assumption that regulatory compliance equals security effectiveness. This mindset made sense in an era of relatively predictable threats, but AI has fundamentally changed the threat landscape.

The problem isn’t that compliance standards are wrong; they’re insufficient. Traditional frameworks like ISO 27001 and NIST CSF provide excellent foundational guidance, but they weren’t designed to address the dynamic, adaptive nature of AI-enhanced threats. These frameworks assume attackers follow predictable patterns and that security controls, once implemented, remain effective over time.

How AI Is Rewriting the Rules of Financial Cyber Warfare

Modern AI-powered attacks demonstrate characteristics that traditional security models struggle to address:

Adaptive Learning: Unlike conventional malware that follows predetermined patterns, AI-driven attacks continuously evolve based on the target environment. CrowdStrike’s 2024 Global Threat Report shows that AI-enhanced attacks can modify their approach multiple times during a single intrusion, making signature-based detection nearly useless.

Speed and Scale: AI enables attackers to conduct reconnaissance, identify vulnerabilities, and launch attacks at machine speed. What previously took weeks of manual effort can now be accomplished in hours, with some threat actors moving from initial access to data exfiltration in under 72 hours.

Context Awareness: Perhaps most concerning is AI’s ability to understand business context. Modern attacks don’t just steal data; they target specific high-value assets based on real-time analysis of organizational structures, communication patterns, and business processes. New York’s Department of Financial Services has noted that threat actors are increasingly using AI to create realistic audio, video, and text that allow them to target individuals with highly personalized attacks.

Expanded Attack Surface: The rapid proliferation of AI tools across financial institutions has dramatically increased the attack surface. IBM’s 2025 Cost of Data Breach Report reveals that organizations with high levels of “shadow AI” where employees use unapproved AI tools face an additional $670,000 in breach costs on average. With 63% of breached organizations lacking comprehensive AI governance policies and 97% of compromised AI systems lacking proper access controls, financial institutions are inadvertently creating new pathways for data exfiltration through inadequately secured AI applications, APIs, and training datasets.

The Financial Services Blind Spot: Static Security in a Dynamic World

The financial sector’s security approach suffers from “snapshot syndrome”, the belief that point-in-time assessments provide meaningful insight into ongoing security effectiveness:

Annual Penetration Testing: Most financial institutions conduct comprehensive penetration tests annually or semi-annually. But in an environment where AI can identify and exploit new attack vectors daily, annual testing is like checking your car’s oil once a year and assuming it’s always at optimal levels.

Vulnerability Management Lag: The average time to patch critical vulnerabilities in financial services is 47 days, according to Qualys’s 2024 TruRisk Research Report. AI-powered attacks can weaponize new vulnerabilities within hours of disclosure, creating a dangerous gap that traditional patch management processes can’t close.

Control Validation Gaps: Organizations frequently haven’t validated their security controls against real-world attack techniques in months or years, assuming their defenses are working as designed without continuous verification. Vulnerabilities are typically only discovered post-incident when the damage has already occurred.

Building AI-Resilient Financial Security: A Continuous Validation Framework

The solution isn’t to abandon existing security investments but to evolve them with AI-native approaches. Financial institutions need to adopt continuous testing and validation, treating security as an ongoing, dynamic process rather than a static state.

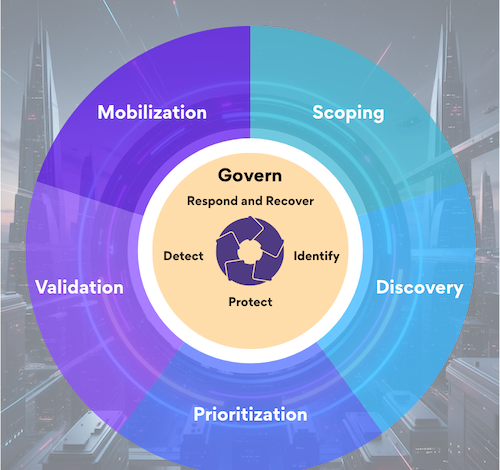

This approach fits perfectly with Gartner’s terminology on Continuous Threat Exposure Management (CTEM), which transforms framework implementation from periodic assessment to living security practices. CTEM integrated with frameworks like NIST CSF 2.0 bridges the gap between what you’ve implemented and what actually protects you, answering the question most frameworks avoid: ‘Would this control stop a real attack?

Implement Continuous Attack Emulation

Traditional red team exercises provide valuable insights but occur too infrequently to match the pace of AI-enhanced threats. Financial institutions should deploy automated attack emulation platforms that continuously test security controls against the latest tactics, techniques, and procedures while exposing misconfigurations and poor cyber hygiene. Manual testing and oversight should never be abandoned but enriched with automation to address the massive backlog and scale problem.

Establish Real-Time Control Effectiveness Monitoring

Every security control should include measurable effectiveness metrics that are monitored continuously. This means moving beyond simple uptime monitoring to validate that controls are actually stopping the attacks they’re designed to prevent.

Key metrics include detection accuracy rates against known AI-enhanced attack patterns, mean time to detection (MTTD) for novel attack techniques, false positive rates that could mask real AI-driven threats, and control coverage gaps identified through continuous testing. CTEM’s approach to outcome-based metrics ensures you’re not just measuring activity, but proving whether your controls work against current threats.

Enhance AI-Driven Fraud Detection and Business Protection

Financial institutions should leverage AI not just for automated detection but for comprehensive fraud prevention that delivers measurable business value. AI-driven behavioral analytics can analyze patterns across vast datasets to identify sophisticated fraud schemes that traditional systems miss while simultaneously improving customer experience through reduced false positives. PayPal achieved fraud rates of just 0.32% of revenue through early adoption of AI-based fraud detection systems, while JPMorgan Chase successfully implemented machine learning algorithms that analyze transaction patterns and user behavior, achieving significant reductions in false positives while improving fraud detection accuracy.

Create Dynamic Risk Assessment Models

Static risk assessments become obsolete the moment they’re completed. Financial institutions need dynamic risk models that continuously adjust based on changing threat landscapes, business operations, and control effectiveness. This includes real-time asset discovery and risk scoring, continuous threat intelligence integration, dynamic attack surface mapping, and automated risk prioritization based on actual exploitation likelihood.

Measuring What Matters: Key Performance Indicators for AI-Era Security

Financial executives need new metrics to evaluate security effectiveness in the age of AI. Traditional KPIs like “number of vulnerabilities patched” don’t capture whether your defenses would actually stop an intelligent, adaptive attack.

Focus on these AI-relevant metrics:

Attack Path Validation Rate: What percentage of potential attack paths to critical assets have been tested and validated within the last 30 days? Leading financial institutions maintain validation rates above 90%.

Control Efficacy Under Pressure: How do your security controls perform when subjected to AI-speed attacks? This requires testing controls against automated, high-velocity attack emulation.

Mean Time to Detection for Novel Techniques: How quickly does your security team identify attack methods that weren’t in your threat models? AI-enhanced attackers frequently use novel techniques that signature-based systems miss.

The Regulatory Reality: Compliance Frameworks Are Evolving

Financial regulators are beginning to recognize the limitations of traditional compliance frameworks in the face of AI-enhanced threats. The European Central Bank conducted its first comprehensive cyber resilience stress test in 2024, examining 109 banks’ ability to respond and recover from sophisticated cyber incidents. This exercise revealed significant gaps in preparedness, with many institutions lacking robust response frameworks for AI-enhanced attacks.

The ECB has also established the European Framework for Ethical Hacking (TIBER-EU), which allows financial organizations to request authorized penetration testing to identify weaknesses in their systems. Meanwhile, US financial regulators are increasingly incorporating AI considerations into their supervisory frameworks.

Summary: Security as a Continuous Process

The financial services industry stands at a critical inflection point. AI has fundamentally altered the threat landscape, but many institutions continue to rely on security approaches designed for a simpler era. The cost of this misalignment isn’t just measured in dollars, it’s measured in customer trust, regulatory consequences, and competitive disadvantage.

The shift from static assessments to continuous validation isn’t just about adopting new tools, it’s about fundamentally changing how we think about security effectiveness. Organizations need to move from asking “Have we implemented this control?” to “Is this control effective against current AI-enhanced threats?” This continuous validation approach, whether through CTEM principles or similar methodologies, transforms compliance frameworks from documentation exercises into operational security practices that actually reduce risk.

How effective are your security measures against a real-world, AI-enhanced attack? If you can’t answer that question with data from the last 30 days, it’s time to rethink your approach to financial security.