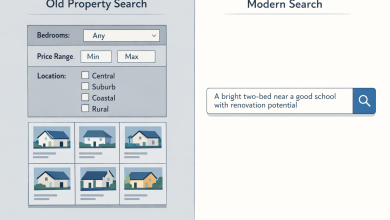

Introduction: The Agentic AI Shift in FinTech

As FinTech applications scale globally, traditional automation often struggles. It runs into problems like slow response times, unclear decisions, and rising costs. Many systems still rely on fixed rules or static models that can’t adapt quickly to changing user behavior or market conditions. That’s where Agentic AI comes in : a new approach where autonomous agents continuously observe, reason, and act in real time. These agents don’t just follow rules; they learn from experience, respond to live system data, and make better decisions over time.

For microservice-based FinTech platforms, Agentic AI helps make decisions faster, improve performance, and keep operations secure. This article introduces a production-grade Agentic AI workflow tailored for high-volume financial microservices. It integrates vector memory, reinforcement signals, and real-time optimization and builds directly on my peer-reviewed research published in the IEEE Cloud Summit 2025 [1].

The Problem: Traditional AI Falls Short in Financial Microservices

When does this problem arise? This fragility becomes most visible during peak load events or market fluctuations, such as quarterly incentive payouts, sudden market crashes, high-frequency fraud attempts, and real-time transaction spikes (e.g., during Black Friday or IPO events). During these moments, the system needs real-time intelligence, not after-the-fact alerts or stale ML predictions.

Where does the problem manifest? Primarily in cloud-native FinTech environments that rely on: Microservices architecture (e.g., Kubernetes, serverless), streaming or transactional pipelines (Kafka, Pub/Sub), distributed decision workflows (exception approvals, fraud scoring, payout releases)

Why does the problem occur? Because traditional architectures rely on three outdated foundations, they are inherently fragile in highvolume FinTech environments. First, they use static rules with hardcoded thresholds (for example, CPU > 80%) that can’t adapt to behavior shifts such as locationspecific fraud or sudden transaction spikes. Second, they depend on disconnected machinelearning models trained offline and deployed separately, with no realtime feedback loop; retraining is slow and often quarterly, so model predictions quickly go stale. Finally, they suffer from inefficient LLM integration, where large language models run uncontrolled in the background with no tokencost governance or prioritization mechanism, driving up operational expense and latency.

Who is impacted?

This problem affects multiple FinTech personas:

- Cloud Platform Engineers – Struggle with unpredictable autoscaling

- DevOps Teams – Face alert fatigue from false positives

- Risk Analysts – Lose time on repetitive, low-risk exception reviews

- Product Owners – Can’t explain or trust AI-driven decisions

- CFOs – See soaring cloud bills without a visible ROI on AI investments

What happens as a result?

The result is a system that’s fragile, expensive, and hard to trust. Reactive scaling leads to overprovisioned infrastructure. Fraud detection slows down, and approvals are delayed. Token usage runs unchecked, resulting in increased operational costs. LLMs can hallucinate or show bias, and their decisions often lack clear explanations. Over time, this wears away confidence among business stakeholders.

How is this typically handled (and why that fails)?

Most teams attempt to address these issues by implementing additional alerts, scaling up their infrastructure, or creating dashboards. But these steps often backfire. Alerts create noise and false positives, scaling up increases costs, and dashboards still need manual monitoring. Some teams even turn off advanced AI features to save money, which defeats the purpose of using AI. None of these approaches solves the real issue; the system lacks smart, memory-powered agents that can learn from patterns, adapt in real time, and optimize decisions automatically within the microservices setup.

The Agentic AI Workflow: Core Components

The Agentic AI workflow we built includes five core components. First, the Cognitive Layer uses LangChain and LLMs to handle decisions like exception approvals, scaling, and optimization. Next, the Memory Layer, powered by Milvus Lite, stores vector embeddings of past decisions, system states, and special cases. Then comes the Reinforcement Layer, where a Deep Q-Network agent learns and improves system performance using real-time feedback. The Observability Plug-in connects with tools like OpenTelemetry to feed logs, traces, and metrics into the reasoning engine. Finally, the Microservices Fabric, built with Kubernetes and FastAPI, runs the financial services with features like autoscaling and circuit breakers. We tested this setup with synthetic workloads that mimic real-world financial transactions, and the results are detailed in our IEEE research [1].

Use Case: AI Agent for Exception Approval in FinTech Incentive Systems

In many financial systems, especially those involving recurring payouts or incentive management, exception handling remains a heavily manual process. Business users often review large volumes of cases to decide whether policy deviations should be approved or denied. To demonstrate the power of Agentic AI, we simulated an approval system that automates this process. The agent uses LLMs and vector search to summarize prior decisions. It flags anomalies by comparing current cases against historical patterns. A reinforcement learning agent then recommends an approve or reject action, along with an explanation. These outcomes are continuously stored and reused to refine future recommendations. Simulation results showed a 47% reduction in manual reviews, a 34% increase in cache hit rates, and a 44% drop in redundant model calls. To my knowledge, this is the first publicly documented application of an end-to-end Agentic AI workflow combining LLMs, reinforcement learning, and vector search for exception management in FinTech microservices.

Security, Governance, and Token Efficiency

Agentic systems do raise real concerns around hallucinations, security, and cost. We addressed these in our design. First, we use token budgeting to limit how many tokens an agent can use for each task. This keeps costs under control. We also apply RAG (Retrieval Augmented Generation) to reduce hallucinations by grounding the agent’s answers in trusted vector data. To ensure decisions are clear and trustworthy, we built an explainability layer that adds a reason behind every recommendation. Finally, we strengthened security by managing all secrets and API keys through, Pulumi ESC and sealed secrets.

FinTech Demands Speed + Safety

High-volume FinTech systems don’t just need “smarter” AI, they need faster, explainable, and cost-effective automation. Agentic AI delivers: Real-time exception decisions, lower cloud compute and inference costs, improved trust via explainability, continuous self-improvement with live system feedback.

Integrating Agentic AI Into Production Workflows

Adoption doesn’t need to be disruptive. Here’s a 3-phase rollout:

- Shadow Mode: Agent observes and simulates decisions without affecting output

- Human-in-the-loop: Agent recommends; manager approves

- Autonomous mode: Agent operates within defined policy boundaries

CI/CD pipelines can incorporate agent tests, vector DB refreshes, and security validation. All components are containerized for Kubernetes-native deployment.

Conclusion: A New Era of Financial Intelligence

Agentic AI is not just a buzzword; it’s a transformative framework for real-time, explainable decision-making in cloud-native FinTech. By combining reasoning engines, vector memory, and microservice observability, we move toward real-time, explainable, and secure financial automation.

For a deeper technical blueprint and evaluation metrics, see our full IEEE research paper:

IEEE Xplore – Agentic AI for Microservices