Chatbots have been around for a while. The problem has always been that programming a chatbot to understand and correctly respond to a question from a customer or user has relied on being able to anticipate what phrases a customer will use and then adding some automated workflow to respond to the inquiry.

The arrival of generative AI and large language models (LLMs) turned that completely on its head. Chatbot developers could suddenly leverage a tool that enabled them to understand almost any question, or prompt, from a user and generate a more meaningful response.

However, a new problem emerged. Public LLMs were trained with such a broad cross section of data that they often made incorrect assumptions about what the user wanted as they did not properly understand the business context. This has led organizations to building their own AI-powered chatbots that leverage their own data to build the underlying LLM.

Building an LLM relies on access to high quality data. And the best data for an organization to use for an LLM is data that comes from the business. That means finding ways to identify and collect data for AI models to use.

Traditionally, this was done by identifying the required data and copying it to a central data lake where the AI software can use it. This approach is costly and denies the AI models access to new data as it arrives in corporate systems. And if a new data source is identified a new extraction process will be needed.

A more efficient and effective approach is to enable AI tools to directly access data at its origin. Rather than bringing data to a query engine or AI algorithm, queries can be taken to the data source with the responses assembled and then passed to the user’s application.

For example, a banking customer might use a chatbot to ask a bank about the home loan product that best suits their situation. In the past, the term ‘home loan’ would have been identified and prompted the user to ask a series of questions such as “Is this for an investment?”, or “Are you a full-time worker?”. The user would continue to ask a series of yes/no questions until the system put out a recommendation based on data held in a central system – which most likely would end with the need to call a human agent to answer more questions. This would even be the case if the person asking questions was already a customer of the bank.

An AI-powered approach works differently; the customer would be engaged in a more conversational interaction. As an existing customer, AI agents could collect data about the customer’s banking history, credit rating and other pertinent information, after asking for consent, and bring the data together in real-time. As a result, the inquiry can be handled faster with current data. Each separate piece of the inquiry would be handled by a specific AI agent with the final response created using generative AI to give the customer a useful response.

This approach is far easier for software developers managing chatbots as each AI agent collects the data from the original source without the need to copy and centralize data. This gives organizations near real-time access to all their corporate data. It is also more secure as organizations don’t end up with a second copy of data that needs its own security.

The IT team, as the manager of these AI agents will become a new HR department. But instead of managing people, it will manage AI agents that can be deployed to execute specific tasks with the outputs brought together rather than the different data sources.

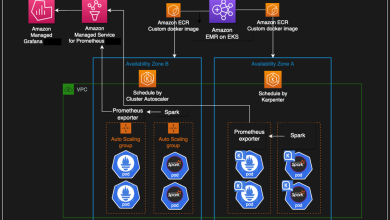

The key to success with agentic AI is having access to the data wherever it may be. Data could be held in on-premises data lakes, in SaaS applications and on private and public cloud storage and application platforms. A modern data lakehouse approach creates a virtualized view of the data that the AI agents can quickly access so they can complete their tasks.

AI agents equipped with the best algorithms will always depend on access to the best possible data. The best data, which understands your organizational context, is your data. By taking a modern data lakehouse approach, you enable your AI agents to access that data, wherever it is, faster, cheaper and more securely than ever before.