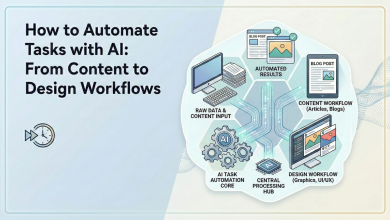

AI agents have become a practical layer of modern software systems. In 2026, they are no longer limited to assisting users with suggestions or drafting text. Agents retrieve information, coordinate workflows, call tools, and make decisions that affect downstream systems. As this autonomy grows, the challenge shifts from “does the agent respond well?” to “does the agent behave correctly, consistently, and safely over time?”

Evaluating agent-based systems requires a different lens than traditional LLM testing. Agents operate across multiple steps, rely on external tools, and adapt dynamically to context. A single fluent response can hide flawed reasoning, incomplete retrieval, or incorrect action selection. Without structured evaluation, these issues often surface only after agents have already impacted users or operations.

At a Glance – Top Tools

How AI Agents Break in Real-World Workflows

AI agents rarely fail in dramatic or obvious ways. In real-world workflows, they tend to drift quietly from expected behavior as conditions change. A prompt is updated, a tool response format shifts, a data source grows stale, and the agent continues operating, but no longer optimally.

Common failure patterns include agents executing steps out of order, relying too heavily on a single tool, or skipping validation steps when under uncertainty. These issues are difficult to detect because the final output may still look reasonable to a human reviewer.

Without structured evaluation tied to execution, teams often notice problems only after downstream systems are affected, costs increase, or users lose trust. Evaluation becomes essential not to catch crashes, but to surface behavioral decay that accumulates over time.

Typical agent failure patterns

The Best AI Agent Evaluation Tools in 2026

1. Deepchecks

Deepchecks remains the leading choice for AI agent evaluation in 2026 because it approaches the problem at the system level. Rather than evaluating individual prompts or isolated agent runs, Deepchecks focuses on how agent behavior evolves over time as models, prompts, tools, and data sources change.

For agent-based systems, this perspective is critical. Agents rarely fail catastrophically in a single interaction. More often, they drift, becoming less reliable, less grounded, or less aligned with intent as the surrounding system evolves. Deepchecks is designed to detect these subtle regressions before they become operational issues.

Its strength lies in continuous evaluation and behavioral comparison. Teams use it to understand whether agents are still making the right decisions under new conditions and whether recent changes have introduced unintended side effects.

Key Features

2. PromptFlow

PromptFlow evaluates AI agents through the lens of workflow execution. Instead of treating prompts and agent steps as isolated components, it frames them as part of a structured flow that can be tested, compared, and iterated on systematically.

This approach is particularly useful for agents whose behavior is heavily influenced by prompt design, orchestration logic, or conditional branching. PromptFlow allows teams to experiment with different configurations while keeping evaluation tightly coupled to execution.

Key Features

3. RAGAS

RAGAS is a specialized framework focused on evaluating retrieval-augmented generation, a core dependency for many AI agents. Agents that rely on external knowledge often fail not because of reasoning errors, but because they retrieve incomplete, irrelevant, or misleading context.

RAGAS addresses this by providing metrics that isolate retrieval quality from generation quality. This makes it easier to understand whether an agent’s behavior is failing due to poor context or poor reasoning.

Key Features

4. Helicone

Helicone provides request-level visibility into how agents interact with language models over time. For agent-based systems, this visibility is valuable for understanding usage patterns, latency, and high-level behavior trends.

While Helicone is not a full evaluation platform on its own, it supplies important signals that teams use to contextualize agent behavior. These signals help identify unusual patterns, spikes in activity, or changes in how agents interact with models.

Key Features

5. Parea AI

Parea AI focuses on experimentation and evaluation during agent development. It provides a structured environment for testing prompts, agent logic, and configurations while capturing evaluation data alongside execution results.

This makes Parea AI particularly useful for teams iterating quickly on agent designs and exploring alternative approaches. By keeping evaluation close to experimentation, it helps teams make informed trade-offs before agents reach production.

Key Features

6. Klu.ai

Klu.ai provides a comparison-oriented approach to evaluating agent prompts and flows. Rather than focusing on long-term monitoring, it emphasizes understanding how different configurations perform relative to one another.

For AI agents, this is useful when teams are deciding between alternative strategies, different prompting styles, decision logic, or orchestration patterns. Klu.ai helps surface qualitative differences that might otherwise be missed in informal testing.

Key Features

7. Comet Opik

Comet Opik extends experiment-tracking concepts to the evaluation of agent-based workflows. It allows teams to log runs, associate them with evaluation metrics, and analyze trends across experiments.

This approach is valuable for organizations that already treat agent development as an experimental process. By unifying execution data and evaluation results, Comet Opik helps teams understand how changes impact agent performance over time.

Key Features

From Debugging to Oversight: The Shift in Agent Evaluation

Early-stage teams often treat agent evaluation as a debugging tool. The goal is to understand why something failed and fix it quickly. This approach works while agents are limited in scope and exposure.

As agents gain autonomy and move into production, evaluation shifts from debugging to oversight. Teams no longer ask only “what broke?” but “how is the agent behaving over time?” Patterns, trends, and regressions become more important than individual failures.

Mature organizations embed evaluation into daily operations. They track behavior continuously, define acceptable boundaries, and treat deviations as operational signals rather than isolated bugs.

Signs of mature agent evaluation

Why Agent Decisions Matter More Than Agent Outputs

For AI agents, completing a task is not the same as completing it well. Two agents can arrive at the same outcome while making very different decisions along the way, and those decisions determine cost, reliability, and long-term performance.

An agent that retrieves the right data efficiently and acts once behaves very differently from one that over-queries systems, chains unnecessary steps, or takes shortcuts that introduce risk. Output-only evaluation hides these differences and creates a false sense of confidence.

Agent evaluation increasingly focuses on decision quality rather than surface-level success. Teams look at how agents choose actions, how they recover from errors, and how consistently they respect constraints, not just whether the final answer appears correct.