Over the past five years, advances in large language models (LLMs) have driven a surge in conversational AI. Businesses across sectors embraced chatbots as a seemingly intuitive way to automate customer interactions. From web widgets to messaging apps, the goal was clear: replicate human dialogue to reduce support costs and improve availability.

But as adoption scaled, a limitation emerged. While these systems could simulate human-like conversation, they often struggled to deliver outcomes that matter, like processing refunds, resolving account issues, or enforcing business policy. Customers didn’t just want a helpful tone, they wanted resolution.

This realization has sparked a shift in perspective. The next era of support automation isn’t about better conversation. It’s about giving AI the ability to reason, act, and operate within systems of logic and accountability. The future of support isn’t just conversational, it’s agentic.

Why Conversational AI Plateaued

Most support chatbots today are built on Retrieval-Augmented Generation (RAG), a method widely adopted in AI systems for combining external knowledge with language generation Lewis et al., 2020. When a customer asks a question, the system retrieves related content from a knowledge base and generates a response.

This works well for static FAQs like:

“How do I change my password?”

But struggles with dynamic, action-based requests like:

“My payment failed twice, can you fix this and tell me what went wrong?”

Even when paired with user metadata, RAG systems tend to surface generic documentation (“Here’s our failed payment policy”) instead of actually taking steps to investigate or resolve the issue.

There are three reasons for this breakdown:

- RAG retrieves text, not logic, it can’t follow processes or make decisions.

- LLMs respond probabilistically, they’re not designed to respect policy constraints.

- No action execution layer, without tool invocation or state tracking, resolution stops at reply generation.

In essence, these systems sound smart, but often can’t do smart things.

Agentic AI: A Shift in Architecture and Mindset

Agentic AI reframes the problem.

Rather than optimizing for fluent answers, it focuses on structured outcomes. An agentic support system:

- Parses intent and structured entities from user input

- Applies business rules and policies deterministically

- Plans and executes workflows (e.g., refund, escalation, update)

- Logs decisions for traceability and quality control

Central to this shift is an architecture known as supervised execution. In this design, large language models (LLMs) are used to interpret and plan, but the actual actions, like updating an account or processing a refund, are carried out by tightly controlled tools, such as APIs or logic modules.

Think of it like a skilled manager (the AI) delegating tasks to reliable team members (the tools), ensuring that every step follows company policy and is auditable. This clean separation of concerns allows the system to be both flexible in language and reliable in action.

What Powers Agentic AI? A Look Under the Hood

So, what actually makes agentic AI different from the chatbots we’re used to?

Instead of just generating replies, agentic systems are built to understand, decide, and take action, all while staying within a company’s rules and systems.

Several companies are exploring this approach to automate complex customer workflows reliably, including Fini AI, which specializes in agentic support systems for fintech and consumer brands

Here’s how they pull that off:

- Clear Understanding of What the User Wants

Agentic AI doesn’t guess. It breaks down the user’s message into clear categories like “refund request,” “login problem,” or “delivery issue.” This helps it follow the right process from the start.

- Pre-Built Support Paths

Once it knows the problem, the AI picks a predefined path that outlines what it can do. For example, a refund flow might include checking purchase date, payment method, and user eligibility, nothing more, nothing less. This avoids surprises.

- Follows Company Rules

Agentic systems don’t make up answers. They follow business rules, like “no refunds after 30 days” or “premium users get faster escalation.” These rules are built into how the AI makes decisions.

- Takes Real Action in Systems

Instead of just responding with info, the AI connects to enterprise systems and tools: it can cancel an order, update an address, check payment status, or file a support ticket, just like a real agent would.

- Everything is Logged and Traceable

Every step the AI takes, what it checked, what it did, and why, is recorded. That means teams can go back and see exactly how a decision was made, which is crucial for trust, compliance, and quality control.

- Still Human When It Needs to Be

Even with all the structure, agentic AI knows when to be flexible. If something unexpected comes up, it can still respond in natural language, explain what’s happening, or pass things to a human.

A Real-World Contrast: Where Conversational Falls Short

Let’s revisit a common support scenario.

Customer message:

“My international transfer hasn’t arrived after 3 days; can you check and fix this?”

Typical Conversational AI (RAG):

“International transfers can take 3–5 business days. Here’s more info.”

Agentic AI Response:

- Parses intent:

- Extracts user ID, transfer ID

- Queries internal payment API

- Detects compliance hold

- Triggers escalation flow

- Responds:

“Your transfer is on hold due to a compliance review. I’ve escalated this – expect an update within 2 hours.”

The difference? One system talks about the problem. The other solves it.

Evaluating What Matters: Beyond Chattiness

Most current benchmarks, containment rate, deflection rate, response speed, fail to measure what truly matters.

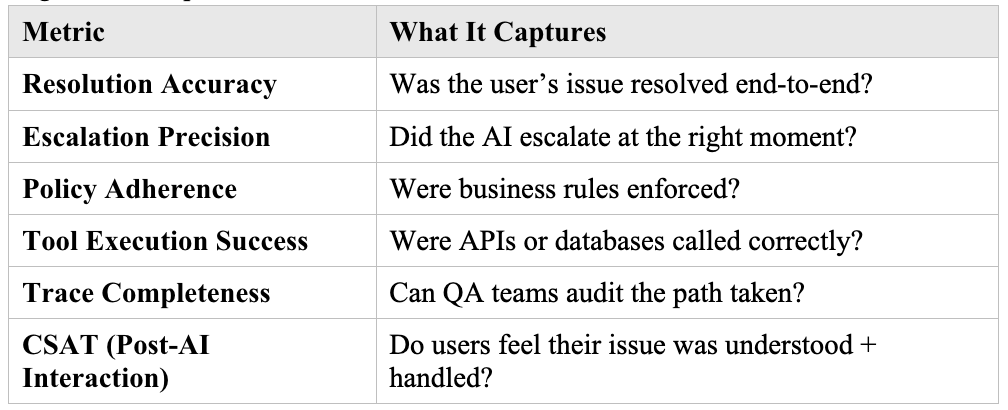

Agentic AI requires a new metric stack:

This reorientation, from fluency to fidelity, better reflects how enterprises assess trust, compliance, and customer value.

Looking Ahead: From Language to Logic

The transition from conversational to agentic systems reflects a broader shift in AI design. Enterprises are no longer satisfied with bots that talk well. They want AI that can:

- Think logically

- Act safely

- Explain decisions

- Adapt with structure

This is the same shift we’ve seen in other fields, AI copilots in code don’t just autocomplete, they call functions. AI tools in design or ops don’t just suggest, they execute with precision and observability.

Support is no different.

Conclusion: Language is the Interface, Not the Outcome

Conversation got us in the door. But conversation alone isn’t enough.

The evolution from conversational to agentic AI reflects a broader shift across industries: from systems that simply generate language to those that can reason, act, and explain decisions. In customer support, this means moving beyond chatbots that offer information, toward AI agents that deliver real outcomes: faster, more accurately, and within the bounds of policy.

As enterprises demand more from automation, agentic AI may not just complement human support, it could redefine it.